the

Act

Annual Assessment of Results (AAR)

Report of the AAR for the New Zealand Aid Programme in the Ministry of

Foreign Affairs and Trade for AMAs and ACAs completed in 2017/18

under

Final Report

Information

Released

Prepared for //

IOD PARC is the trading name of International

Organisation Development Ltd//

Insights, Monitoring & Evaluation

Official Omega Court

Pacific and Development Group

362 Cemetery Road

New Zealand Aid Programme

Sheffield

Ministry of Foreign Affairs and Trade

S11 8FT

United Kingdom

Date // 3 July 2019

Tel: +44 (0) 114 267 3620

www.iodparc.com

Contents

Acronyms ............................................................................................................................................................ 3

Executive Summary ............................................................................................................................................ 4

1. Introduction .................................................................................................................................................... 9

2. Methodology ................................................................................................................................................. 10

Limitations of the AAR .............................................................................................................................. 13

3. Key findings .................................................................................................................................................. 14

3.1 Robustness of effectiveness ratings ..................................................................................................... 14

the

3.2 Robustness of other DAC criteria ratings (ACAs only) ...................................................................... 21

Act

3.3 Assessment of qualitative analyses in AMAs and ACAs ..................................................................... 22

4. Summary of Findings ................................................................................................................................ 29

5. Conclusions ................................................................................................................................................ 29

6. Recommendations ..................................................................................................................................... 30

under

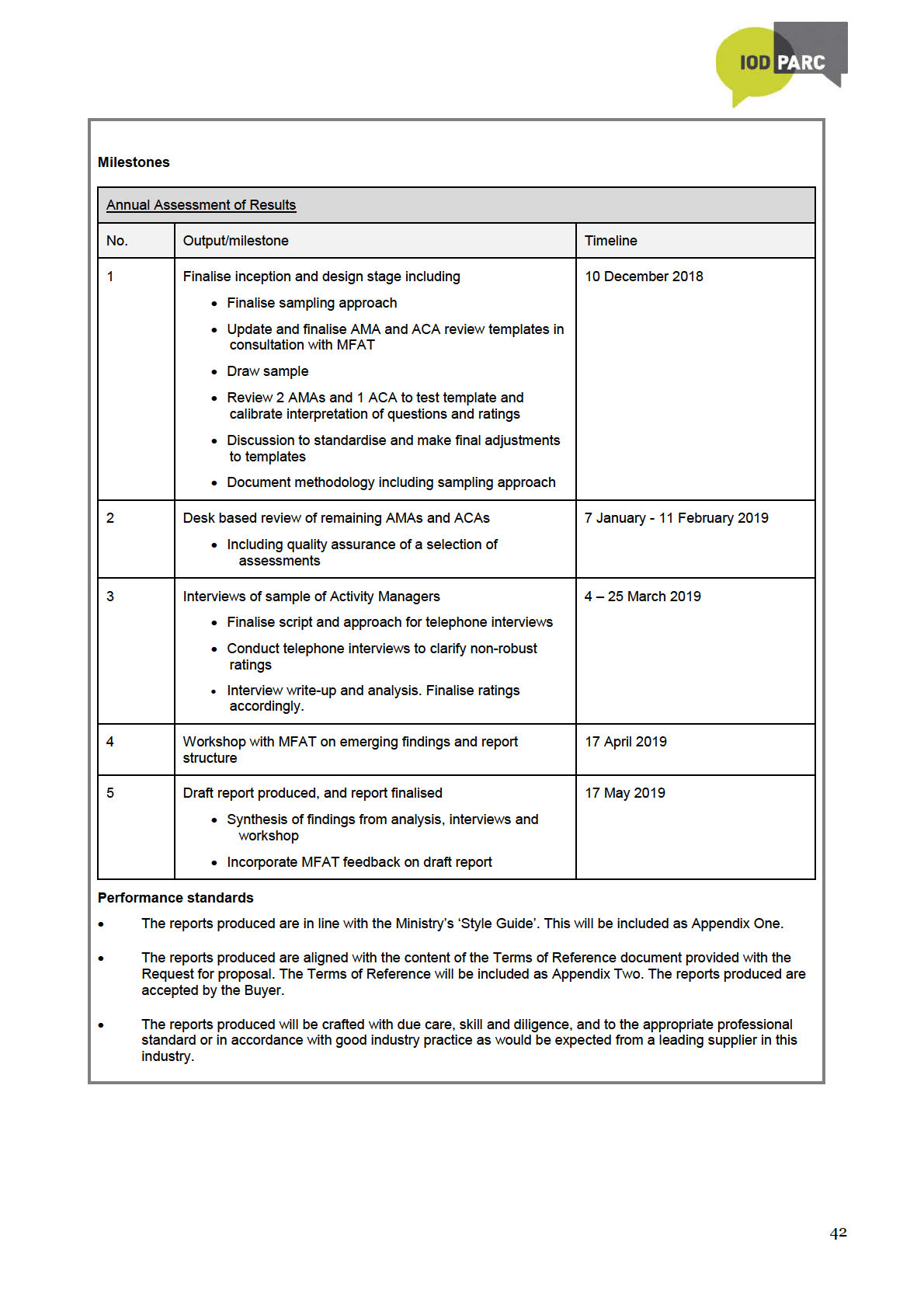

Appendix 1: Methodology ................................................................................................................................. 32

Appendix 2: Terms of Reference ...................................................................................................................... 41

Appendix 3: Distribution of sample ................................................................................................................. 43

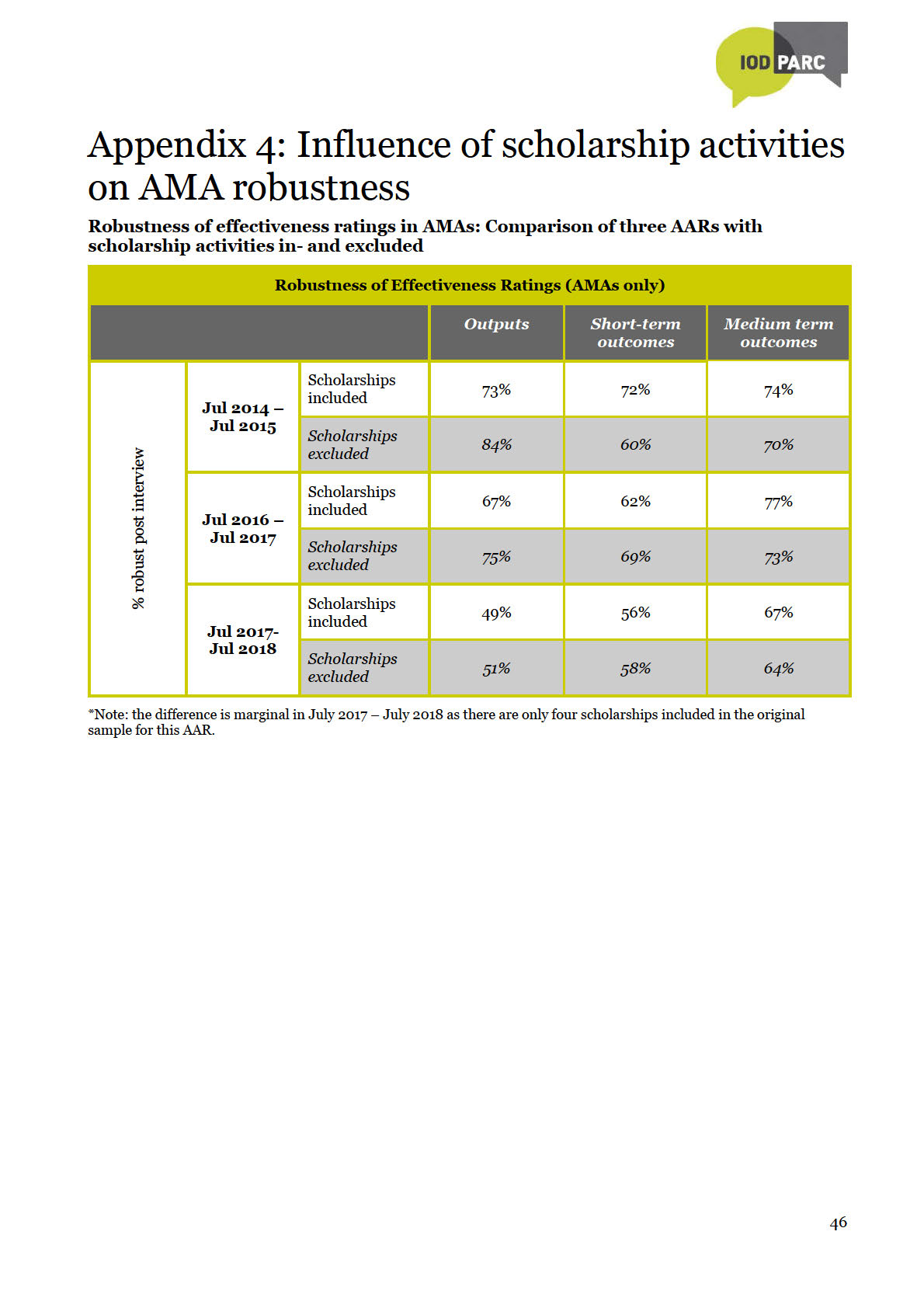

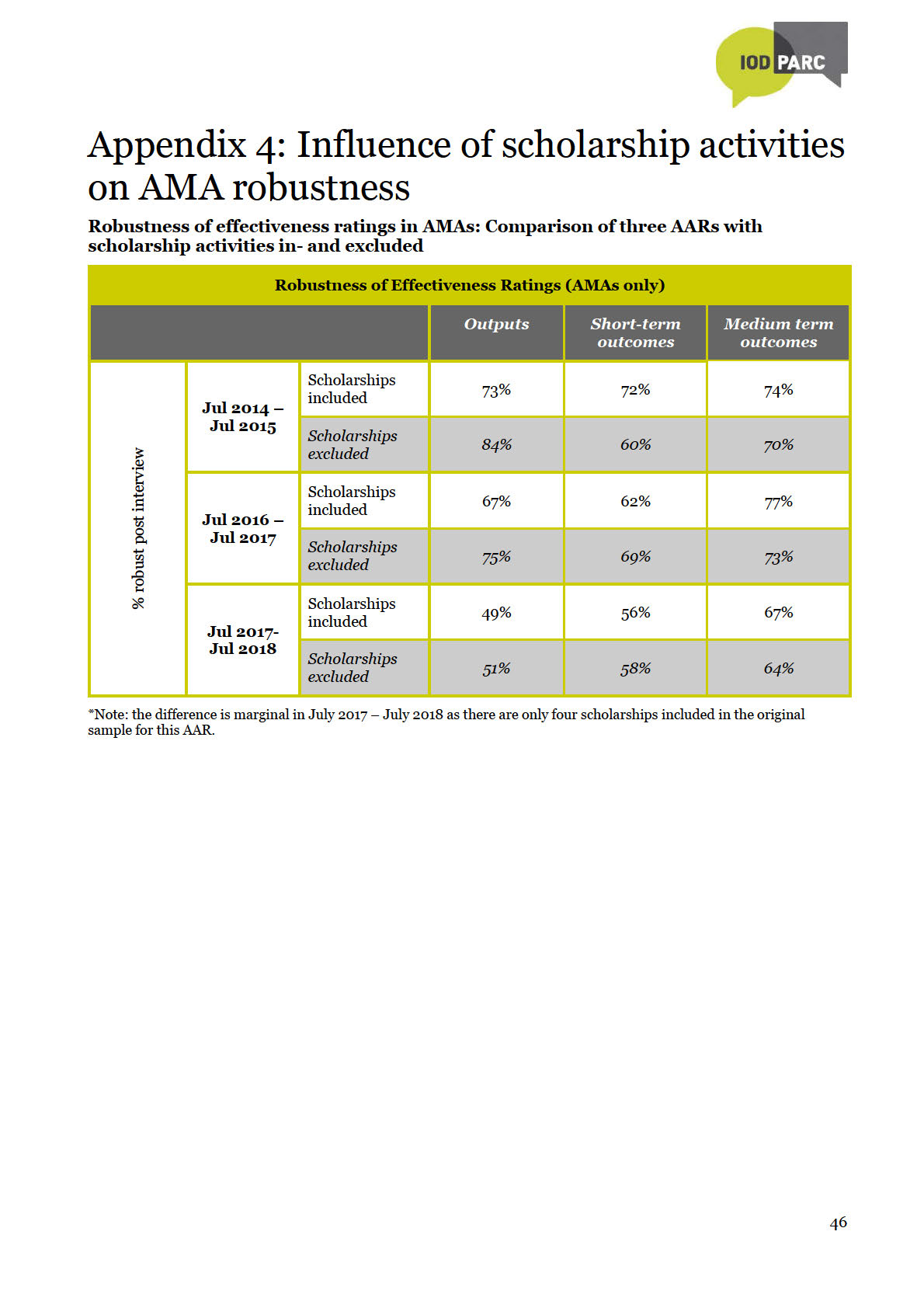

Appendix 4: Influence of scholarship activities on AMA robustness.............................................................. 46

Information

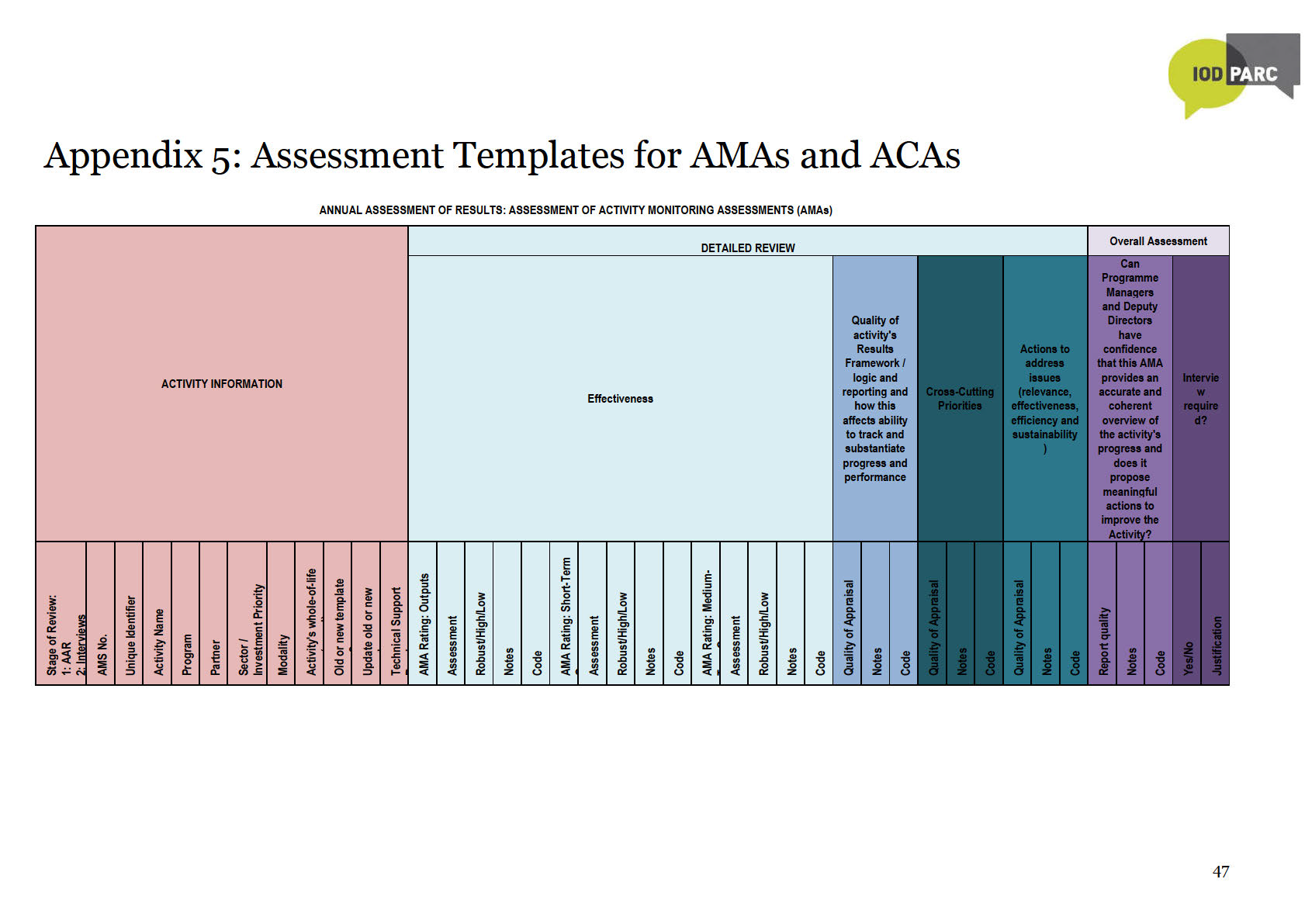

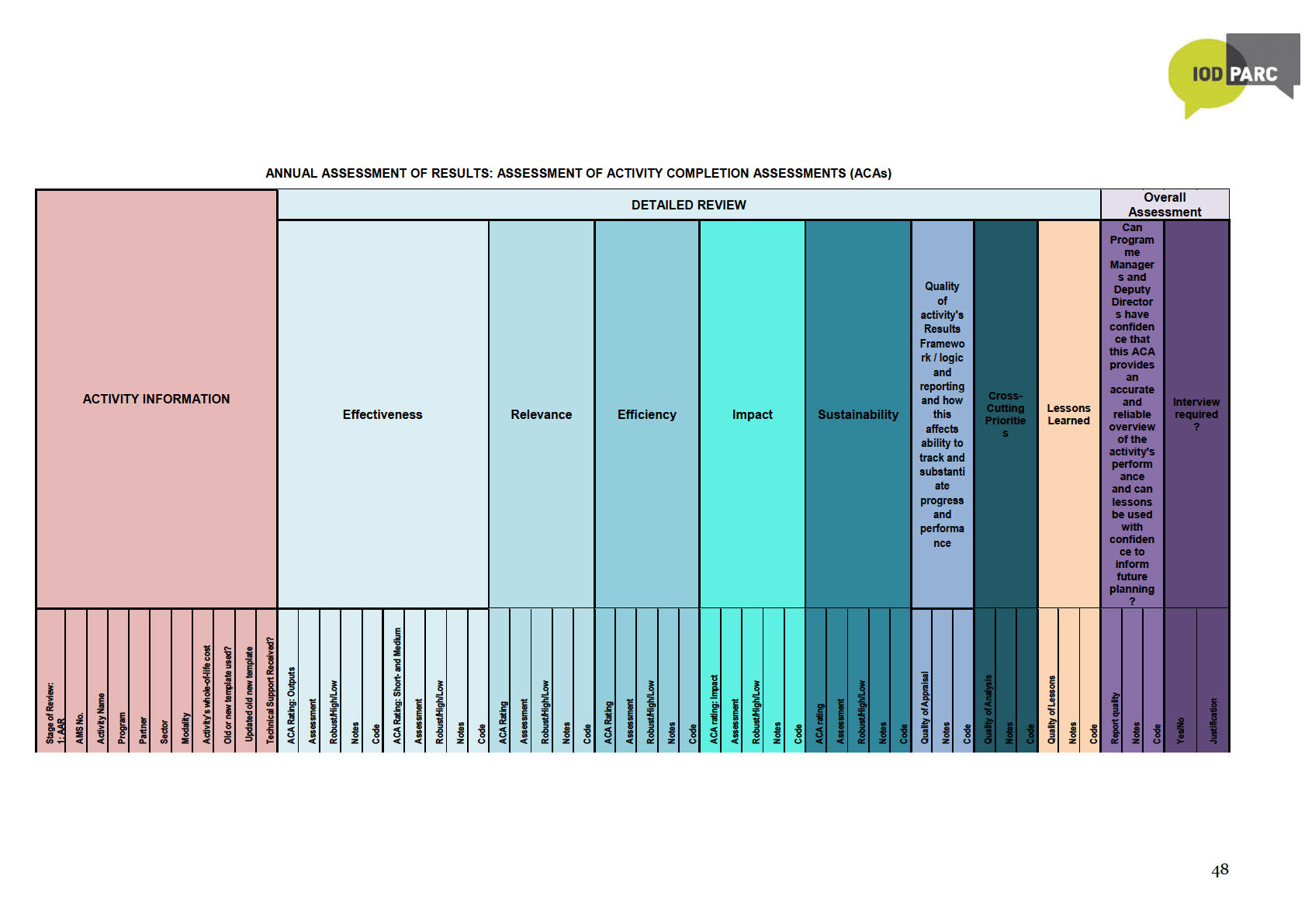

Appendix 5: Assessment Templates for AMAs and ACAs .............................................................................. 47

Appendix 6: Interviewing script ....................................................................................................................... 49

Released

Official

Acronyms

ACA

Activity Completion Assessment

AMA

Activity Monitoring Assessment

AQP

Activity Quality Policy

ARF

Activity Results Framework

CCIs

Cross Cutting Issues

DAC

Development Assistance Committee

DP&R

Development Planning and Results (team)

M&E

Monitoring and Evaluation

the

MFAT

Ministry of Foreign Affairs and Trade

Act

MO

Multilateral Organisation

N/A

Not Assessable

ODA

Official Development Assistance under

RMT

Results Measurement Table

SAM

Scholarships and Alumni Management System

UN

United Nations

VfM

Value for Money

Information

Released

Official

3

Executive Summary

Background

New Zealand’s Aid Programme is delivered through investments (known as Activities) administered

by the Ministry of Foreign Affairs and Trade (MFAT). The performance of individual Activities is

reported through Activity Monitoring Assessments (AMAs) and Activity Completion Assessments

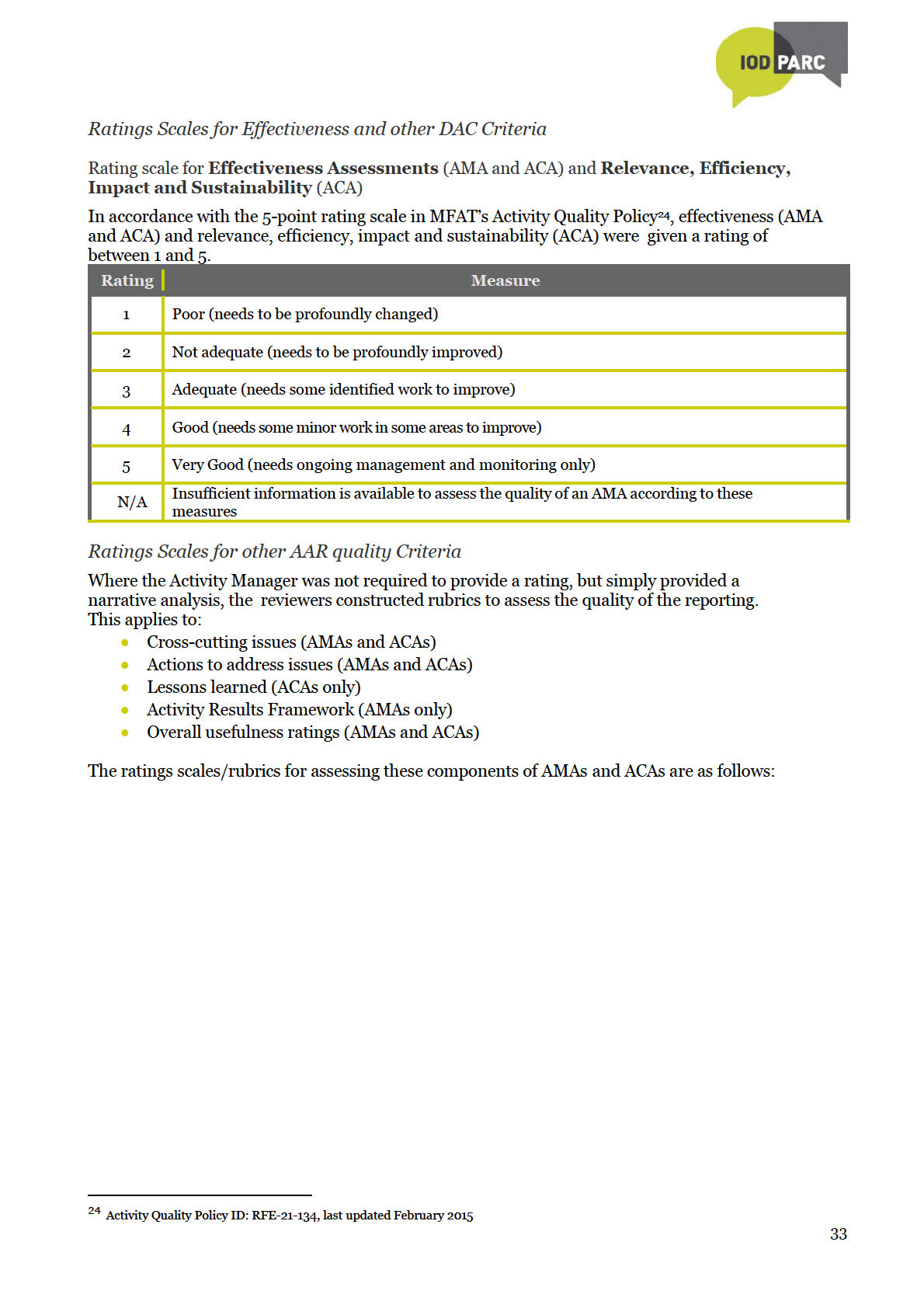

(ACAs). Within AMAs and ACAs, Activity Managers rate performance on a five-point scale against set

criteria. Upon completion of an Activity, ACAs record ratings of effectiveness, relevance, impact,

efficiency, and sustainability. AMAs record ratings of effectiveness of on-going Activities. An

important element of AMAs and ACAs is that they identify issues that may affect the implementation

and results of activities, as well as recommendations and lessons to improve activities.

For many Activities, AMAs and ACAs are the only formal MFAT assessment of their progress and

performance. It is therefore important that MFAT has confidence in their robustness. The Annual

Assessment of Results (AARs) assesses the robustness of ratings in AMAs and ACAs. The AAR also

the

assesses whether AMAs and ACAs are helpful to improve Activities

Act

According to Activity Managers who were interviewed for this AAR, AMA and ACA templates have

become more user-friendly over the last five years through revisions based on outcomes of AARs,

amongst others. AMAs and ACAs are becoming increasingly embedded within MFAT’s performance

management system. Completion rates for AMAs and ACAs have increased substantially from 59% in

2013/14 AAR to 88% in 2017/18, the highest completion rate to date.

This is the fourth (AAR)1, providing an independent quality assurance of a randomly selected

under

representative sample of 66 AMAs and 36 ACAs, drawn from a total of 141 AMAs and 55 ACAs that

were completed between 1 July 2017 and 30 June 2018. Of those selected, 63 AMAs and 28 ACAs

were eventually reviewed.2 AMAs and ACAs with non-robust effectiveness ratings were progressed to

an interview with the relevant Activity Manager, following which reviewers finalised their assessment.

A proportionally low number of interviews conducted with Activity Managers to review non-robust

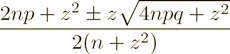

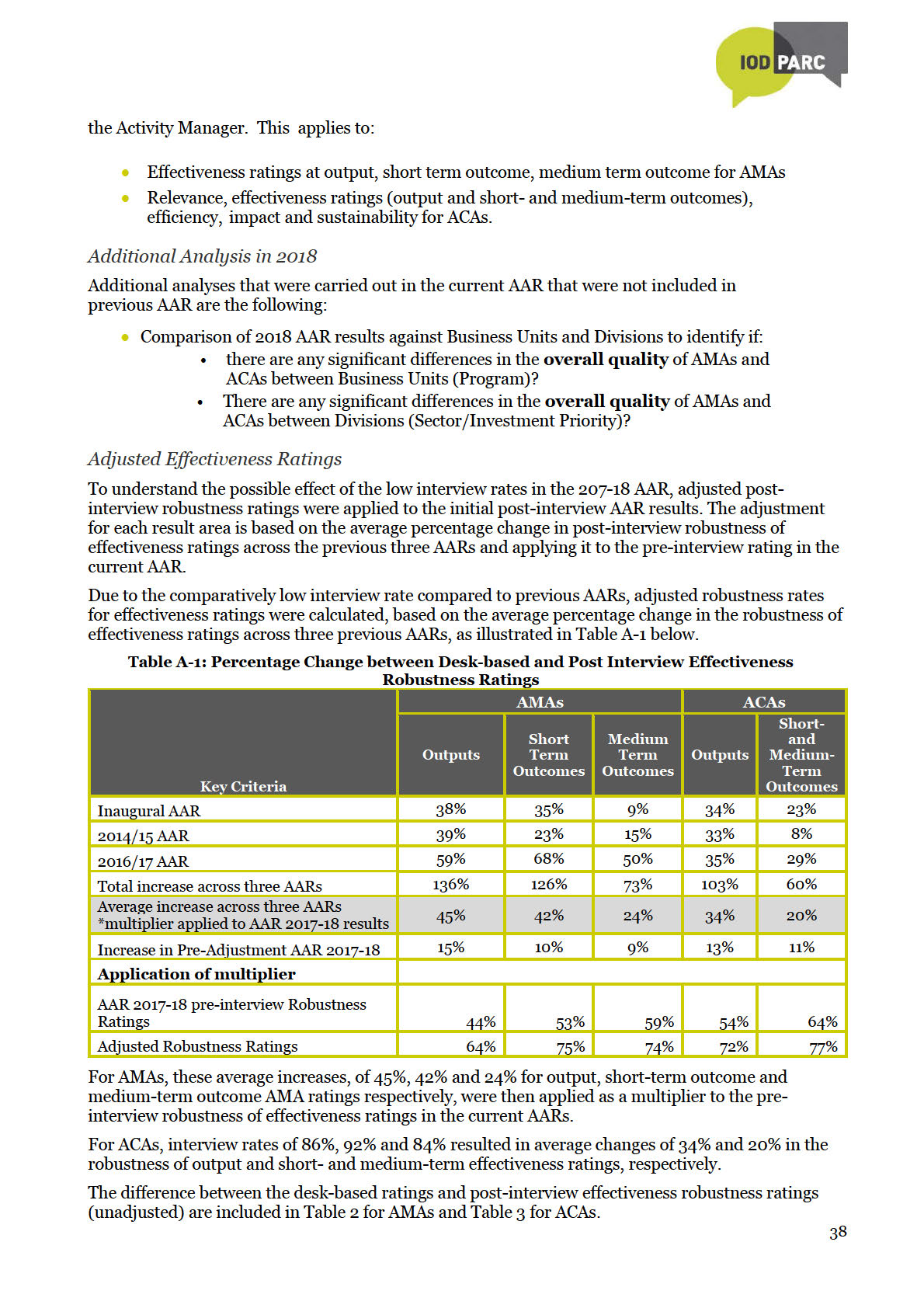

effectiveness ratings is a major limitation of this AAR3. To enhance comparability with previous

AARs, final effectiveness ratings were adjusted to allow for the low interviewing rate in the current

AAR.4

Information

Summary of Findings

Robustness of Effectiveness Ratings

Released

To have general confidence in the robustness of Activity Managers’ effectiveness ratings across all

AMAs and ACAs, MFAT would expect at least 75% of the assessed ratings to be robust.

MFAT can be reasonably confident in the robustness of AMA effectiveness ratings, especially for

short- and medium-term outcome ratings. Based on the adjusted post-interview ratings, it is

Official

encouraging that the robustness of short-term outcome ratings in

AMAs meets the 75% confidence

threshold, while that of medium-term outcome ratings is just under, at 74%. The robustness of output

ratings still trails at 64%.

1 Three previous AARs were conducted in 2015, 2016 and 2018 for AMAs and ACAs completed between 1 July 2013 – 30 June 2014;

between 1 July 2014 – 30 June 2015; and between 1 July 2016 – 30 June 2017, respectively.

2 The main reason for the differences between selected and reviewed totals is that Activity-related documents could not be provided in

time to be included in the review.

3 The interview rate for the current AAR (33%) is much lower compared to previous AARs (86% in the inaugural AAR; then 92% and 84% in

the two subsequent AARs).

4 The adjustment for each result area is based on the average percentage change in post-interview robustness of effectiveness ratings

across the previous three AARs and applying it to the pre-interview rating in the current AAR. Both the initial post-interview robustness

ratings and the adjusted post-interview ratings have limitations. The initial post-interview ratings are not informed by a proportional

number of interviews compared to previous years and therefore are likely to be understated. The adjusted post-interview ratings are

indicative rather than definitive, but are likely to be closer to what the actual results may have been.

4

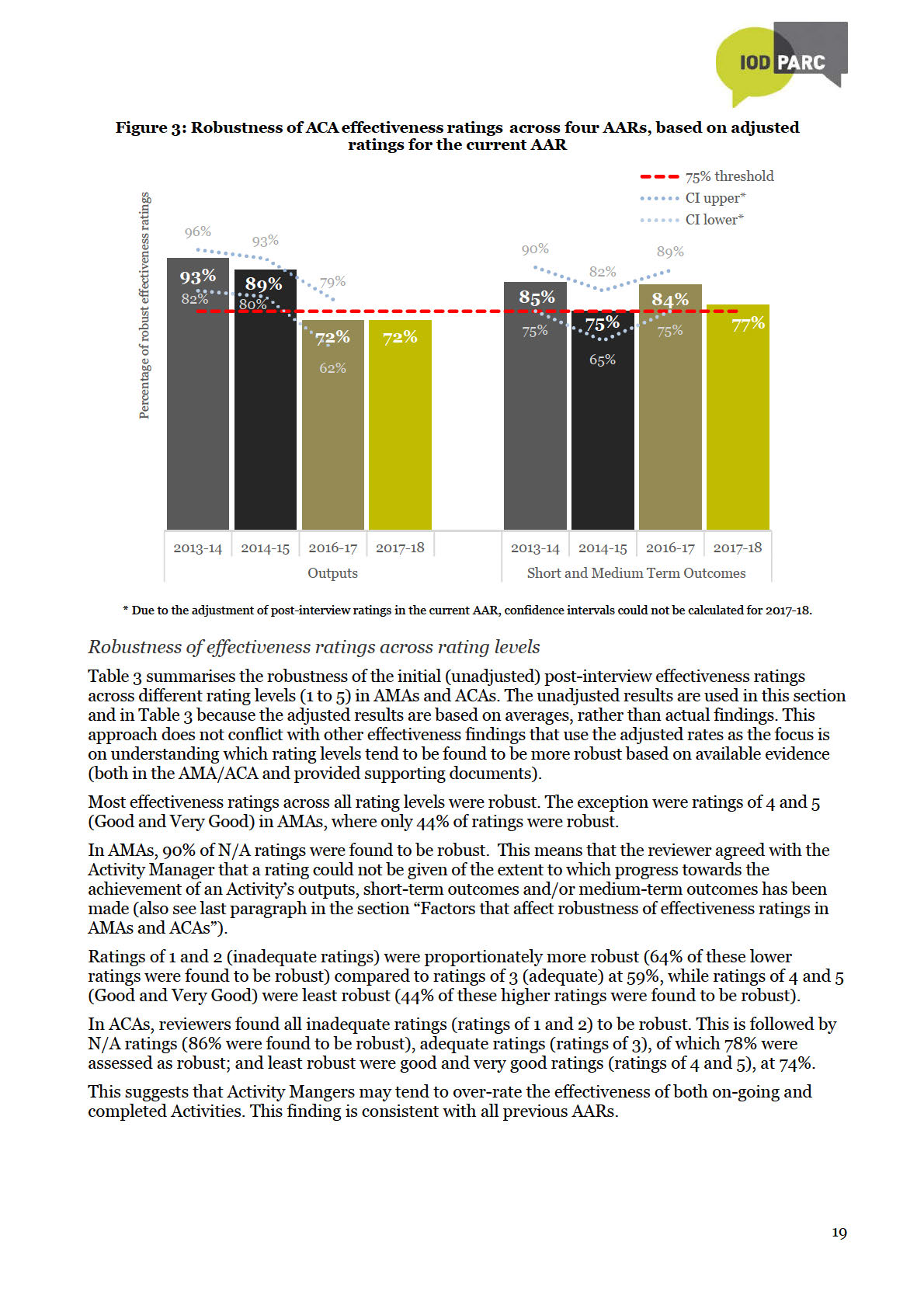

The robustness of output and medium-term outcomes ratings for AMAs in the current AAR is lower

compared to previous AARs, but the robustness of short-term outcome ratings is higher. However,

there has been no statistically significant changes in the robustness of AMA effectiveness ratings in

the current AAR compared to both the inaugural AAR and the 2016-17 AAR.5

Based on adjusted post-interview ratings, the robustness of short- and medium-term outcome ratings

in

ACAs, at 77%, exceeds MFAT’s confidence threshold, but the robustness of output ratings trails at

72% - compared to the inaugural AAR, the decrease in robustness of ACA output ratings is likely to be

statistically significant6. When an Activity ends, it is especially important to know whether it has

achieved its results or not. It is therefore encouraging that MFAT can be reasonably confident about

the robustness of short- and medium-term outcome ratings in ACAs.

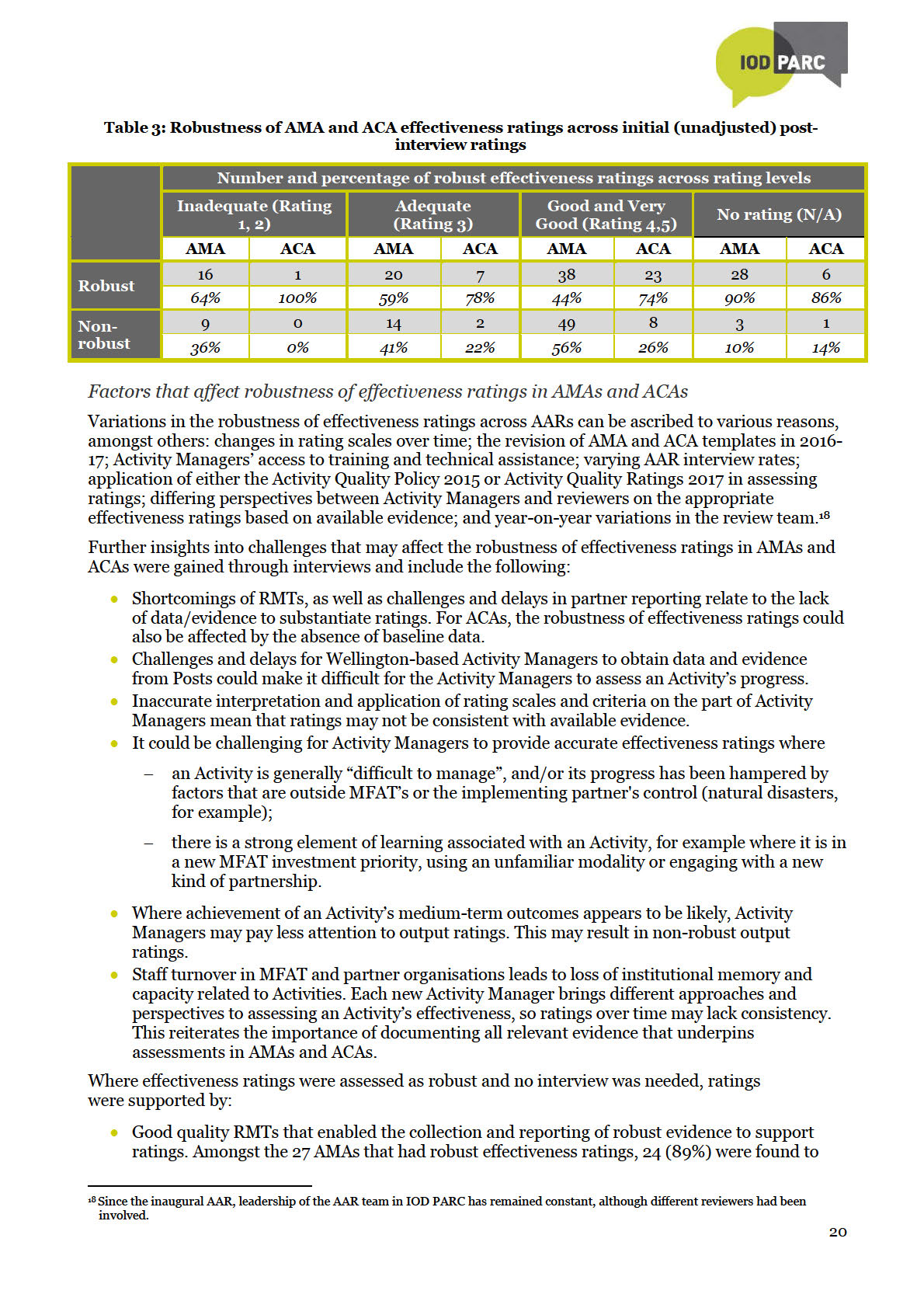

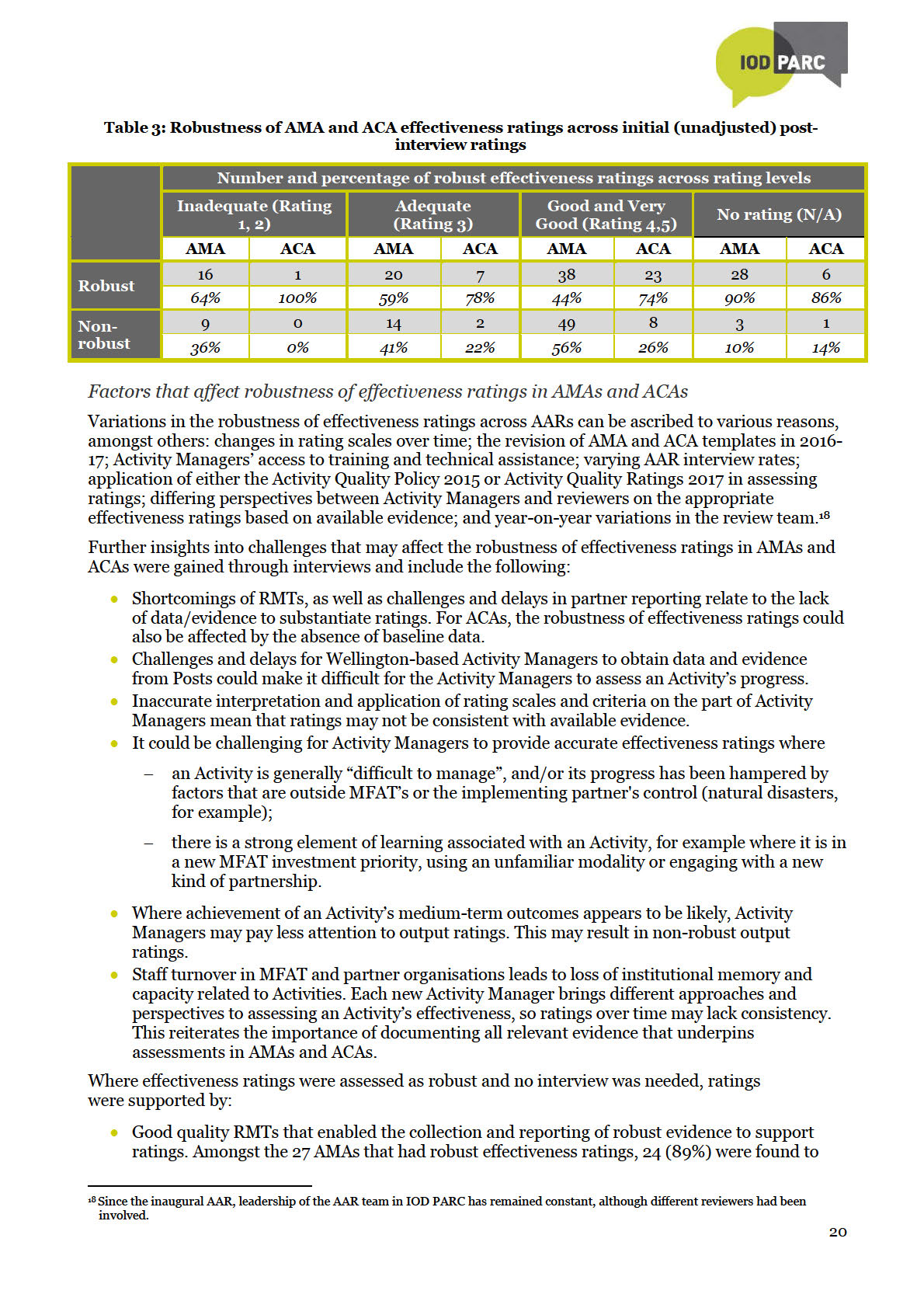

In both AMAs and ACAs, effectiveness ratings of 1 and 2 (inadequate ratings) are more robust

compared to ratings of 4 and 5 (good and very good ratings). This suggest that Activity Managers

may be inclined to over-rate effectiveness of Activities, which is consistent with all previous AARs.

As in previous AARs, robustness of effectiveness ratings for both AMAs and ACAs increased

substantially after interviewing. The main reason is that Activity Managers may not document all the

evidence that informs ratings in the AMA or ACA. Contextual information and understanding are

the

major factors that influence Activity Managers’ ratings, but this is often not explained in AMAs and

ACAs. Activity Managers may assess an Activity’s progress and performance with due consideration

Act

of challenges in the implementing context, but do not always document this in AMAs and ACAs.

Therefore, many AMAs and ACAs still do not capture the evidence that supports effectiveness ratings

in sufficient detail to provide stand-alone records of an Activity’s effectiveness.

Quality of Results Management Tables and influence on Effectiveness Ratings

under

Based on references to Results Management Tables (RMTs) as sources of evidence for completing

AMAs and ACAs, Activity Managers are increasingly relying on RMTs to monitor and report

Activities’ progress. Ratings were often substantiated by references to movements in RMT indictors,

or progress in relation to baselines and targets. An increasing number of Activity Managers are also

proposing appropriate actions to address identified shortcomings of RMTs.

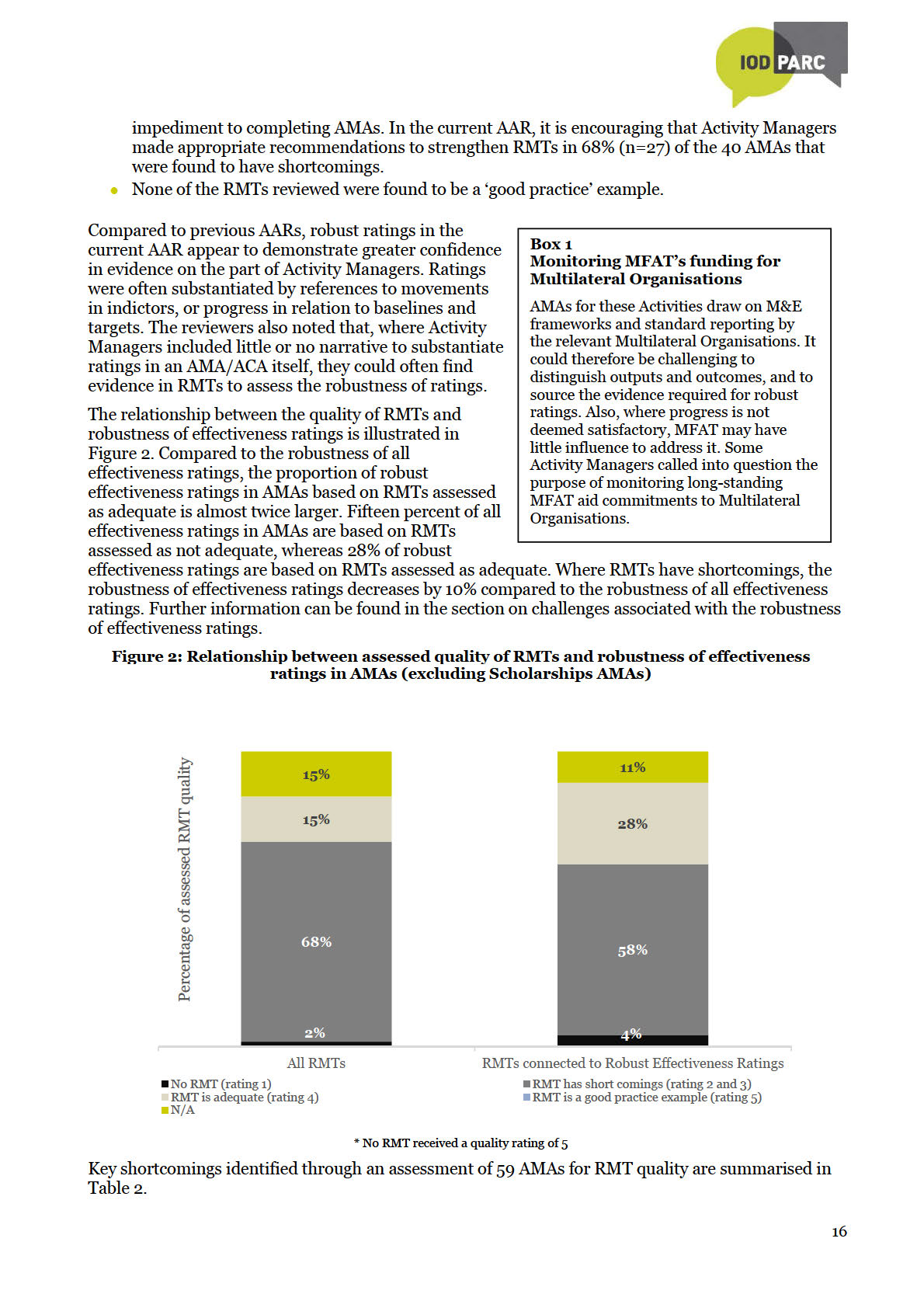

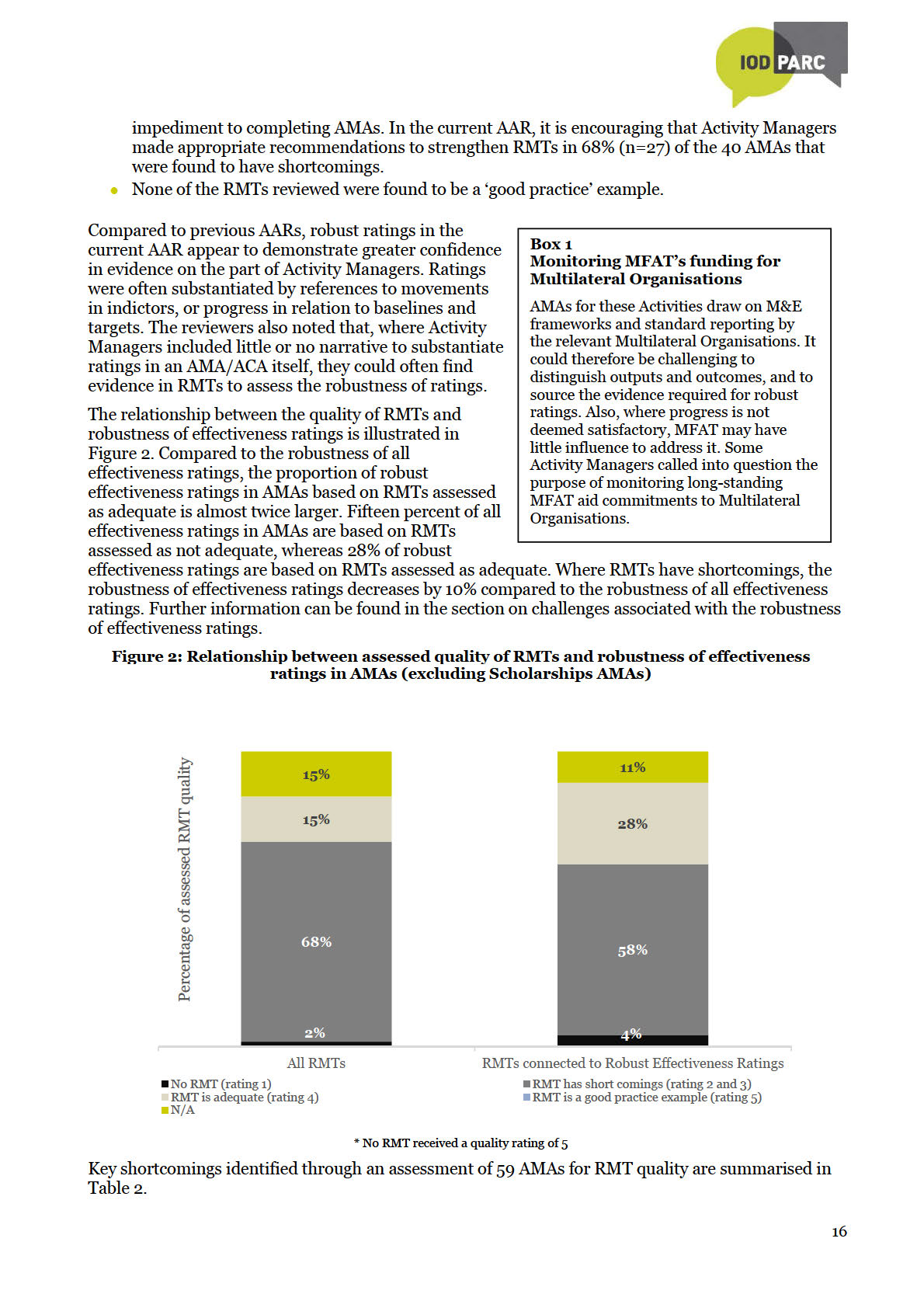

However, RMT shortcomings continue to impede the quality of AMAs and ACAs. In the current AAR,

15% of AMAs (n=9) were based on RMTs assessed as adequate, compared to 68% (n=40) where

RMTs were found to have shortcomings. Many RMTs are not updated regularly and may not reflect

Information

the evolving reality of dynamic Activities. Other major shortcomings of RMTs include the absence of

baselines, targets and data to monitor and report progress. It appears that completing AMAs and

ACAs for complex Activities, for example multi-county and multi-donor Activities, as well as

Activities funded through Budget support, could be challenging because RMTs for these Activities

Released

are not straight-forward. Per MFAT guidelines, AMAs for funding to Multilateral Organisations draw

on the Strategic Plans and annual reports of the organisations themselves. Their annual reporting is

therefore not based on an MFAT RMT and does not provide a clear-cut fit with AMA assessment

criteria.

Official

Robustness of other DAC Criteria ratings in ACAs

MFAT guidelines for the assessment of the DAC criteria of relevance, efficiency, impact and

sustainability were revised in 2017 and incorporated in AMA and ACA templates. However, the

reviewers based their assessment of the robustness of ratings on outdated guidelines in Annex 1 of

the 2015 Aid Quality Policy (AQP). With this limitation in mind, the robustness of DAC criteria

ratings in ACAs has predominantly improved compared to previous AARs but remains below the

75% confidence threshold. More specifically:

5 Assessment of statistical significance is limited by availability of detailed data from the inaugural AAR and the adjustment approach

applied in 2017/18.

6 Assessment of statistical significance is limited by availability of detailed data from the inaugural AAR and the adjustment approach

applied in 2017/18.

5

the robustness of relevance, efficiency and sustainability ratings has improved, following

almost consistent decreases over the previous three AARs.

the robustness of impact ratings is higher compared to the first two AARs but has decreased

slightly compared to the previous AAR. A key challenge in assessing an Activity’s impact at

completion appears to be the absence of baseline data.

Bearing in mind that that ratings of these criteria were not discussed during interviews, and

despite encouraging increases in robustness of sustainability and impact ratings, confidence in

these ratings would still be somewhat lacking.

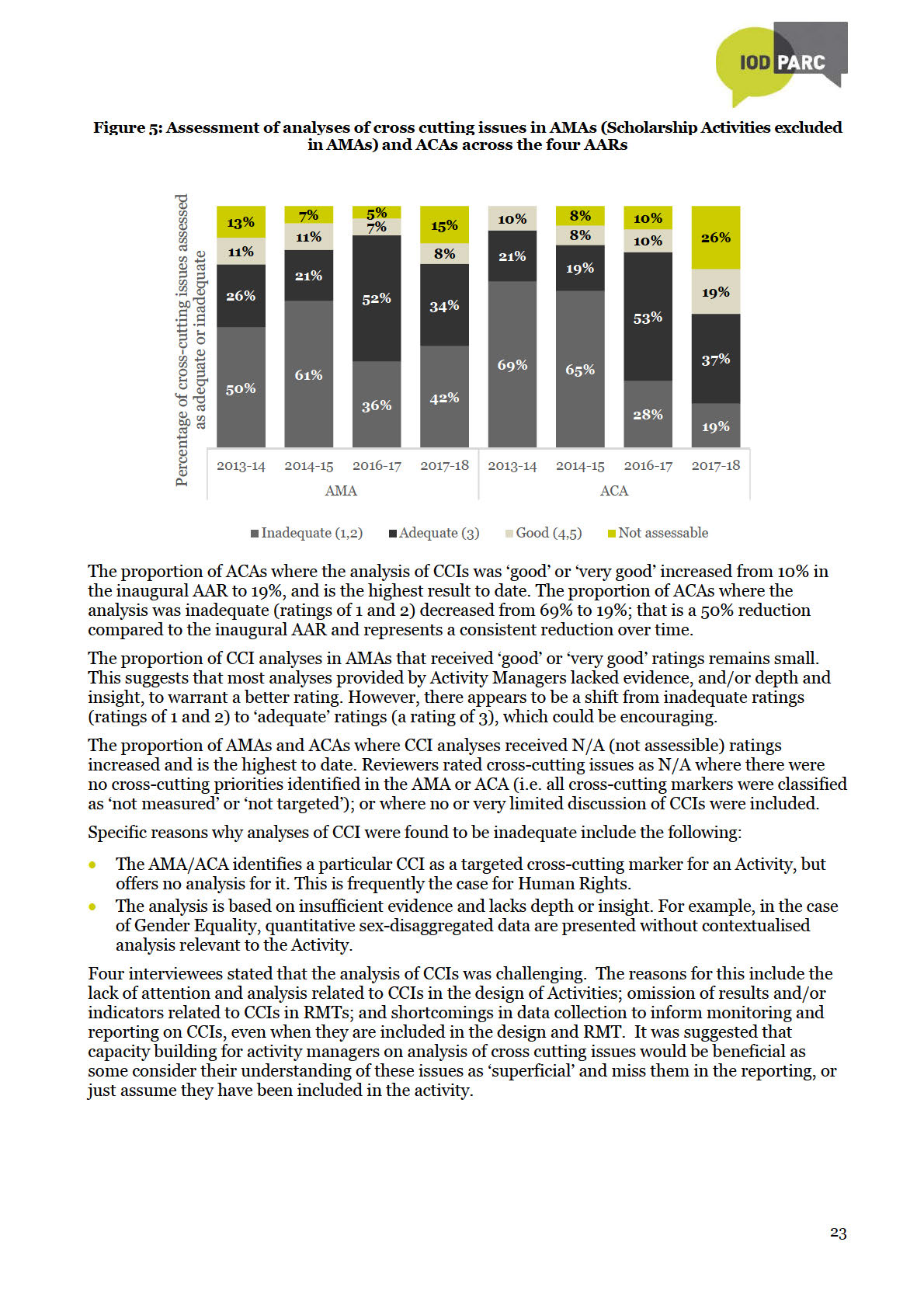

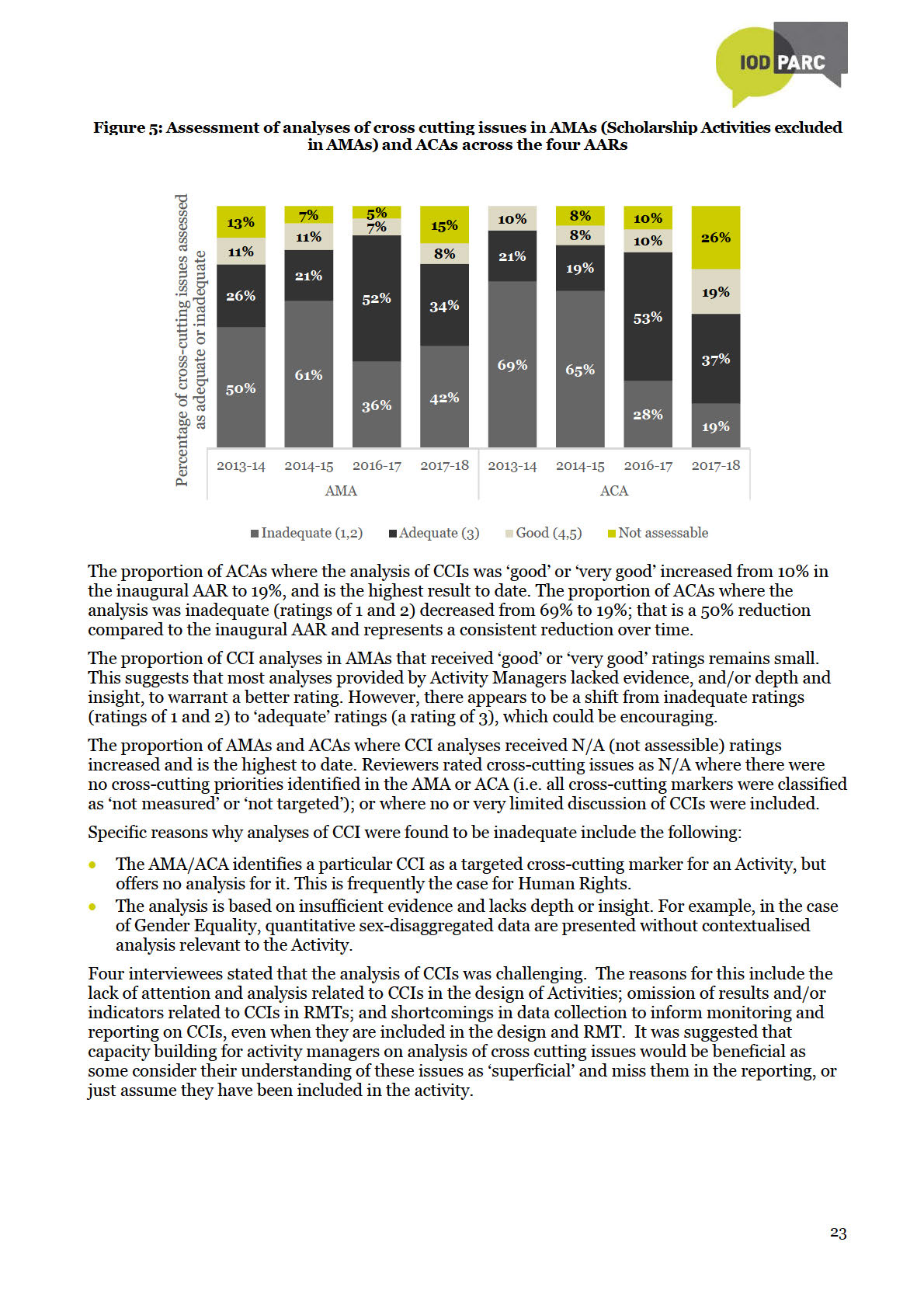

Cross-Cutting Issues

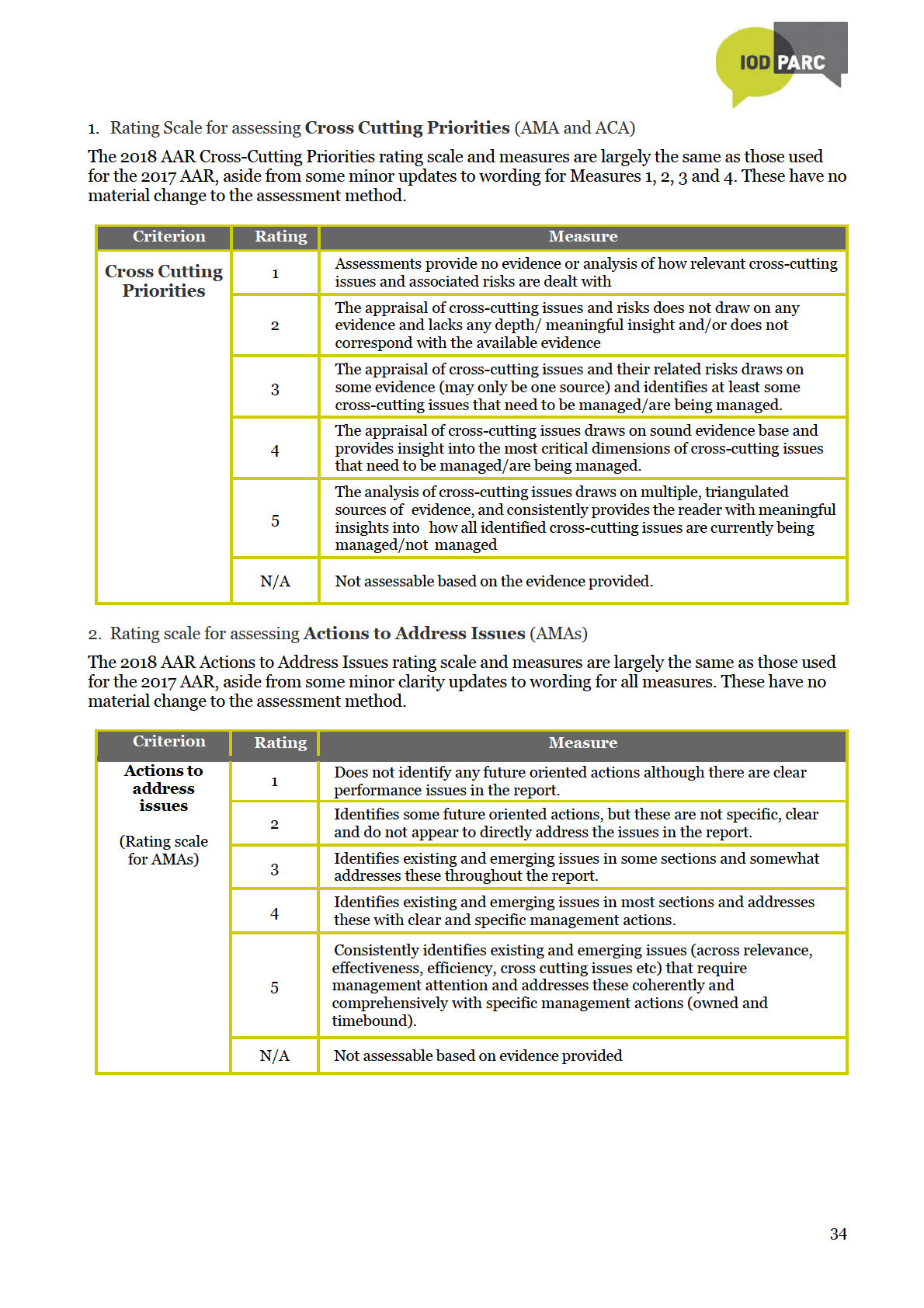

While the assessment of Cross-Cutting Issues (CCIs) remains a challenging aspect of AMAs and

ACAs, it is encouraging that the proportion of ACAs containing ‘adequate’

or better

analyses of CCIs

has increased substantially over time. However, the proportion of CCI analyses in AMAs that

received ‘good’ or ‘very good’ ratings remains small and has decreased since the previous AAR. This

suggests that analyses provided by Activity Managers may still lack evidence, and/or depth and

the

insight, to warrant high ratings.

Act

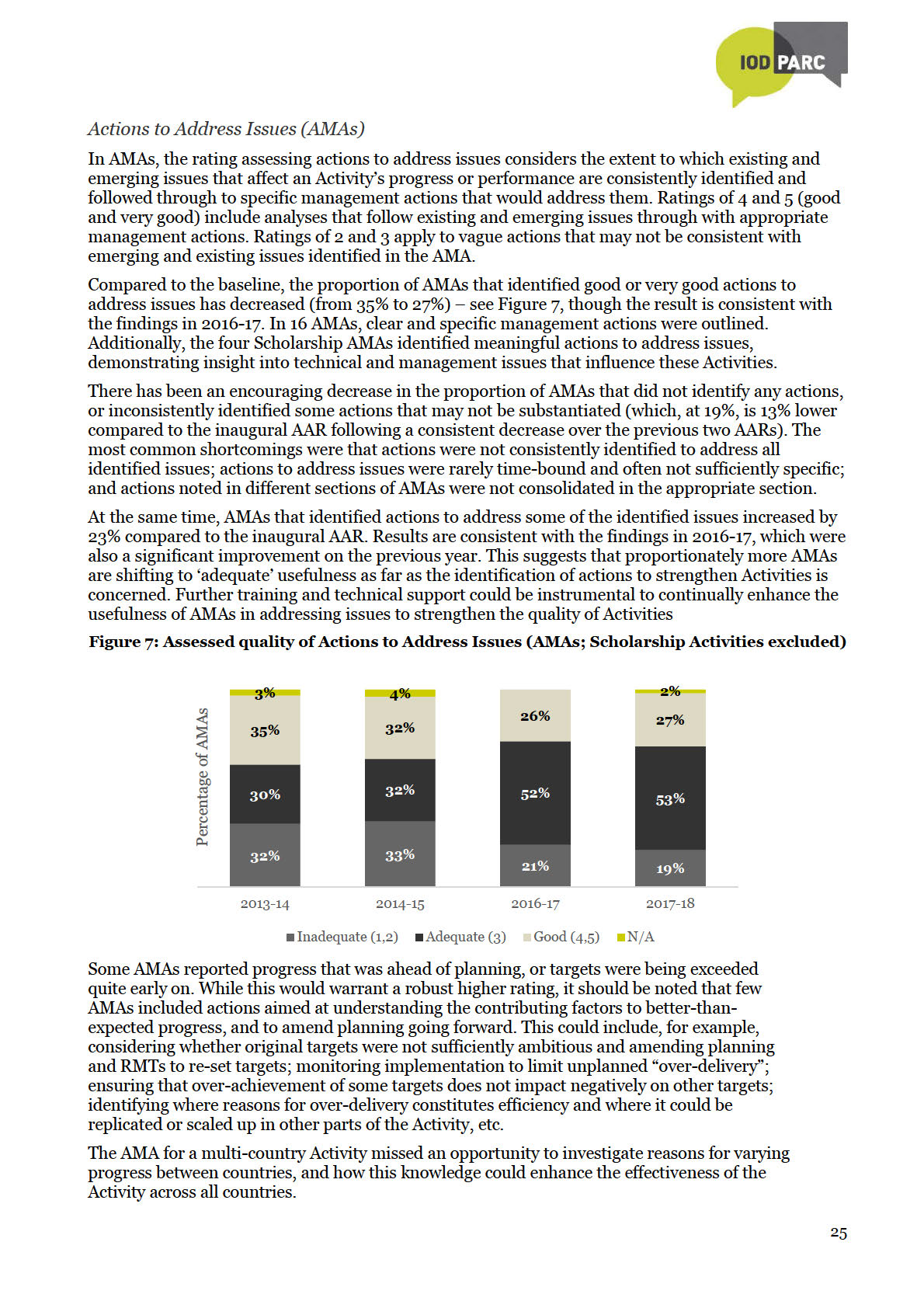

Actions to Address Issues (AMA) and Lessons Learned (ACAs)

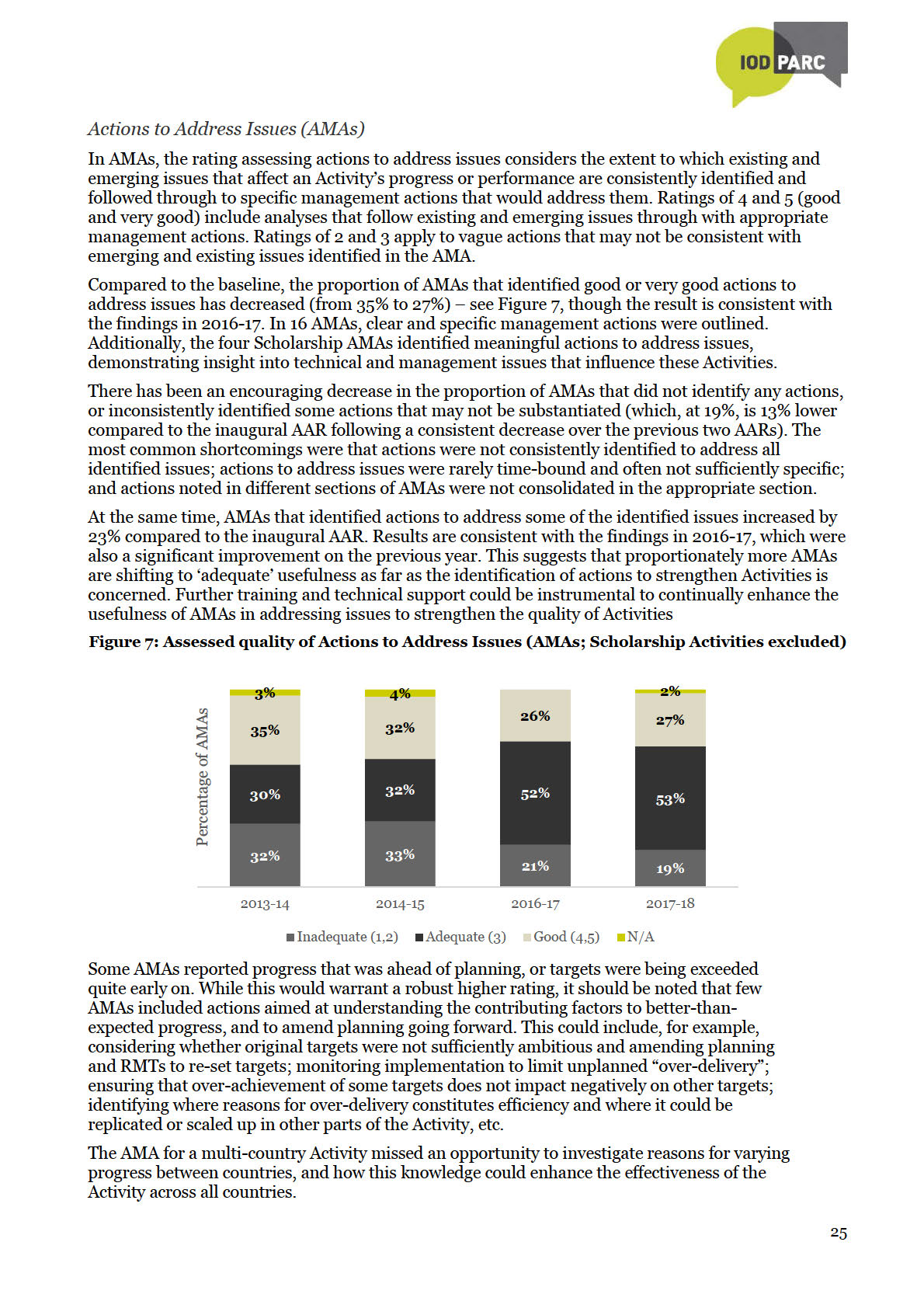

In AMAs, the overall quality of proposed actions to enhance Activities’ performance has increased

over successive review periods. Compared to the inaugural AAR, the proportion of AMAs that

identified lessons assessed as inadequate decreased by 13%. At the same time, the proportion of

lessons assessed as ‘good’ or ‘very good’ has also decreased slightly (8% from baseline), suggesting

under

that actions to strengthen on-going Activities are pooling in the ‘adequate’ category.

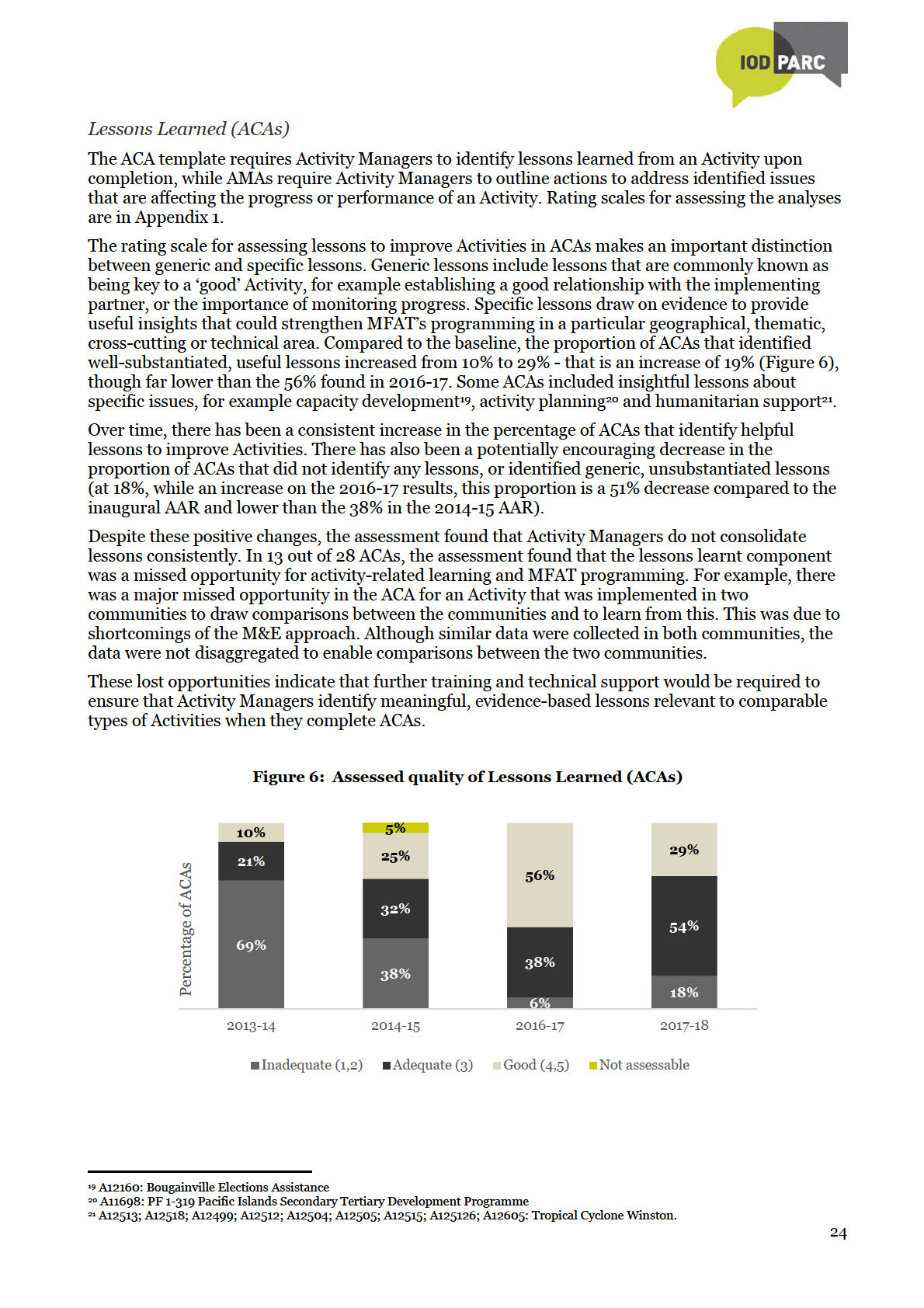

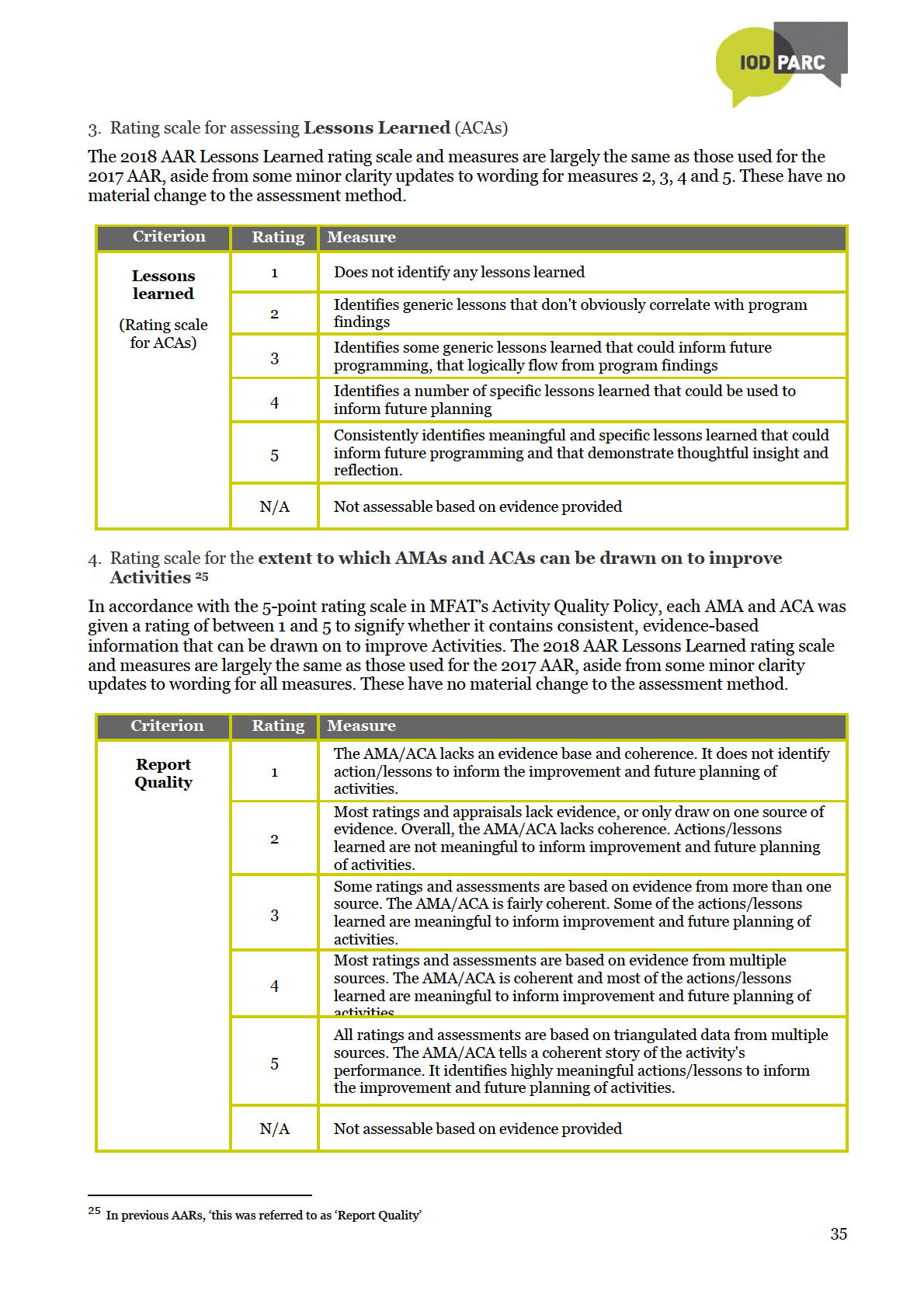

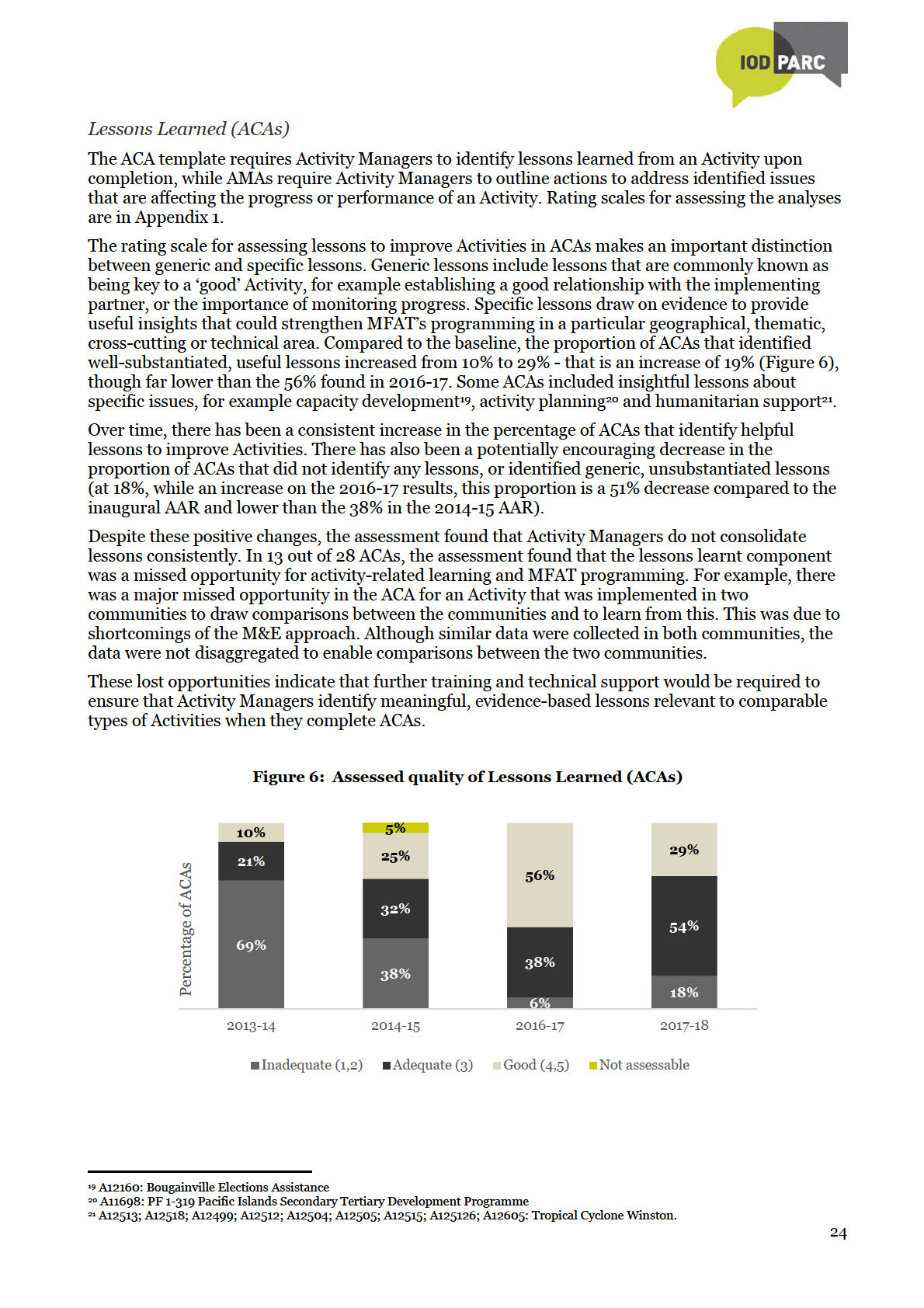

In ACAs, lessons to inform future Activities remain of a relatively high quality – 82% of ACAs

identified meaningful, useful lessons to strengthen future activities. This is 19% more compared to the

inaugural AAR, but 14% less that the 2016-17 AAR. It is very encouraging that the proportion of ACAs

that did not identify any lessons, or identified generic, unsubstantiated lessons, has decreased by

51% compared to the inaugural AAR.

Drawing on AMAs and ACAs to improve Activities

Information

The extent to which AMAs and ACAs can be used to improve Activities considers whether they

articulate a plausible, evidence-based story of an Activity’s progress and performance, and then

identify key issues and helpful recommendations/lessons to strengthen the Activity (or Activities in

general) going forward. The assessment is based on different criteria to the effectiveness ratings and

Released

therefore results may diverge.

Despite some missed opportunities to identify actions or lessons that could improve Activities, MFAT

can be cautiously confident that both AMAs and ACAs can be drawn on to improve Activities.

Qualitative elements in the majority of AMAs and ACAs provide insightful assessments across a range

Official

of issues, and they identify meaningful actions and lessons to inform activity improvement.

The proportion of AMAs and ACAs that cannot be drawn on to improve activities has

decreased steadily, but so has the proportion that are considered highly informative. This

has resulted in a net increase the proportion that are considered ‘adequate’ in documenting

information that can be drawn on to improve Activities. Therefore, most AMAs and ACAs

contain helpful information that can be drawn on to improve Activities.

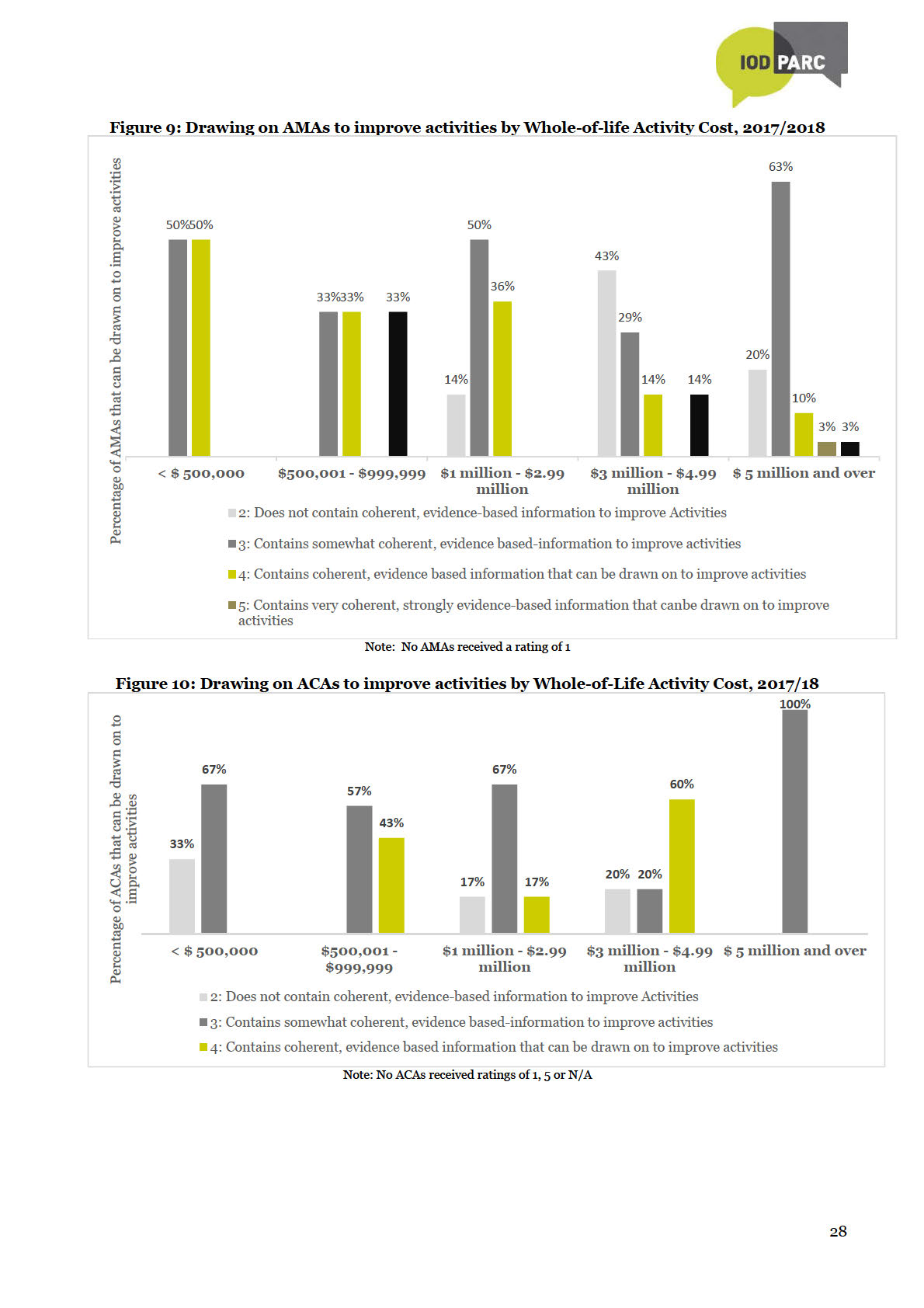

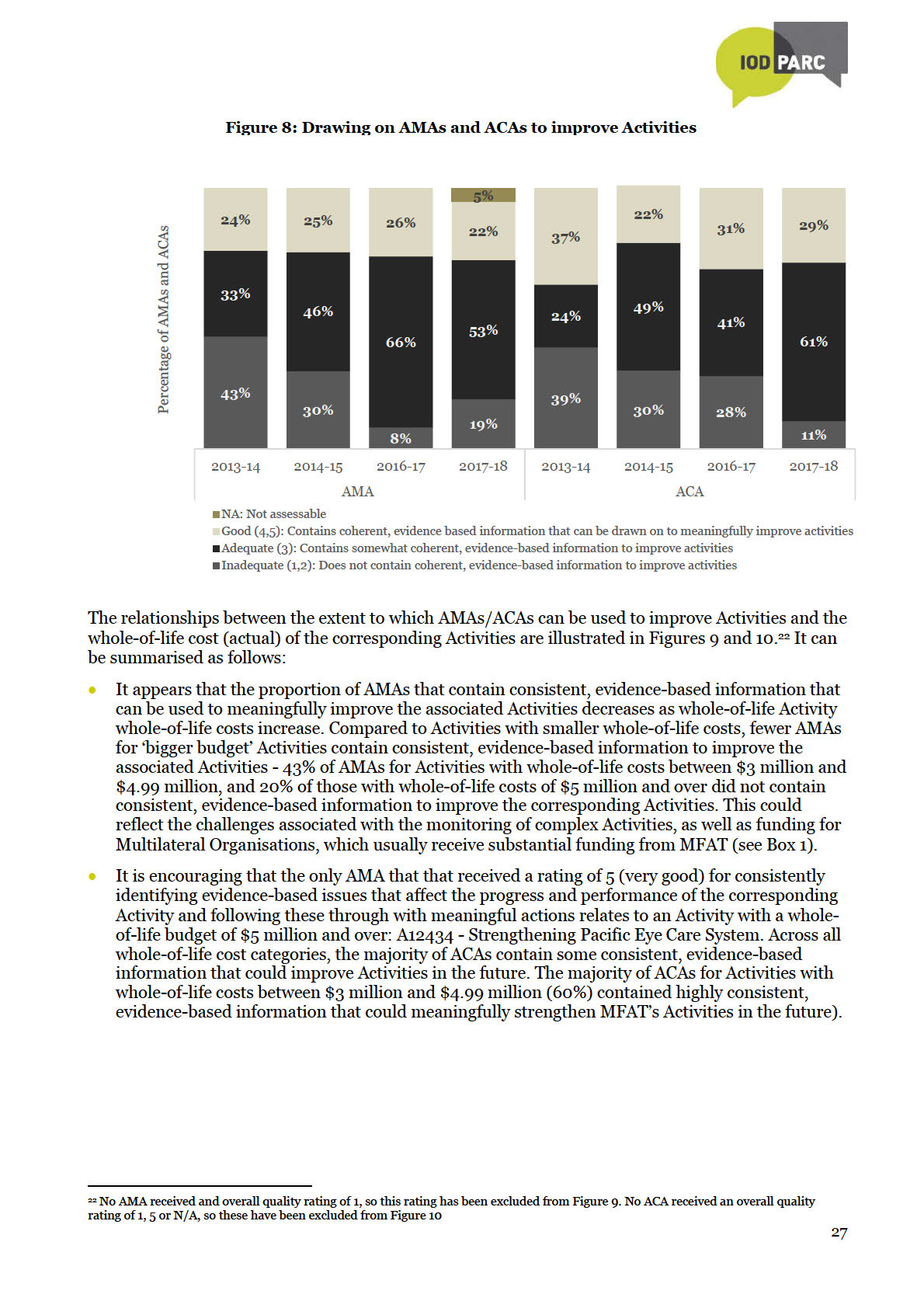

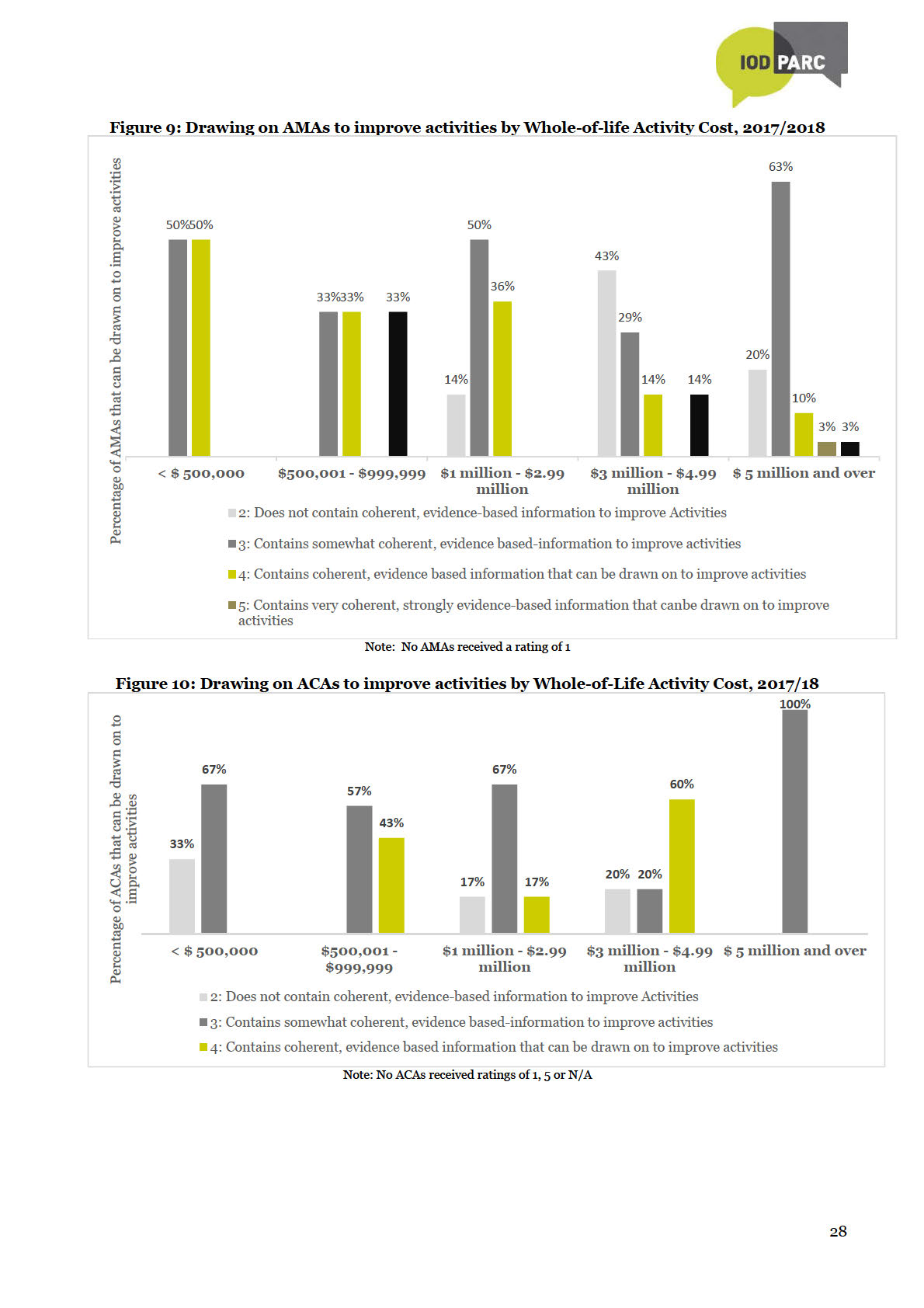

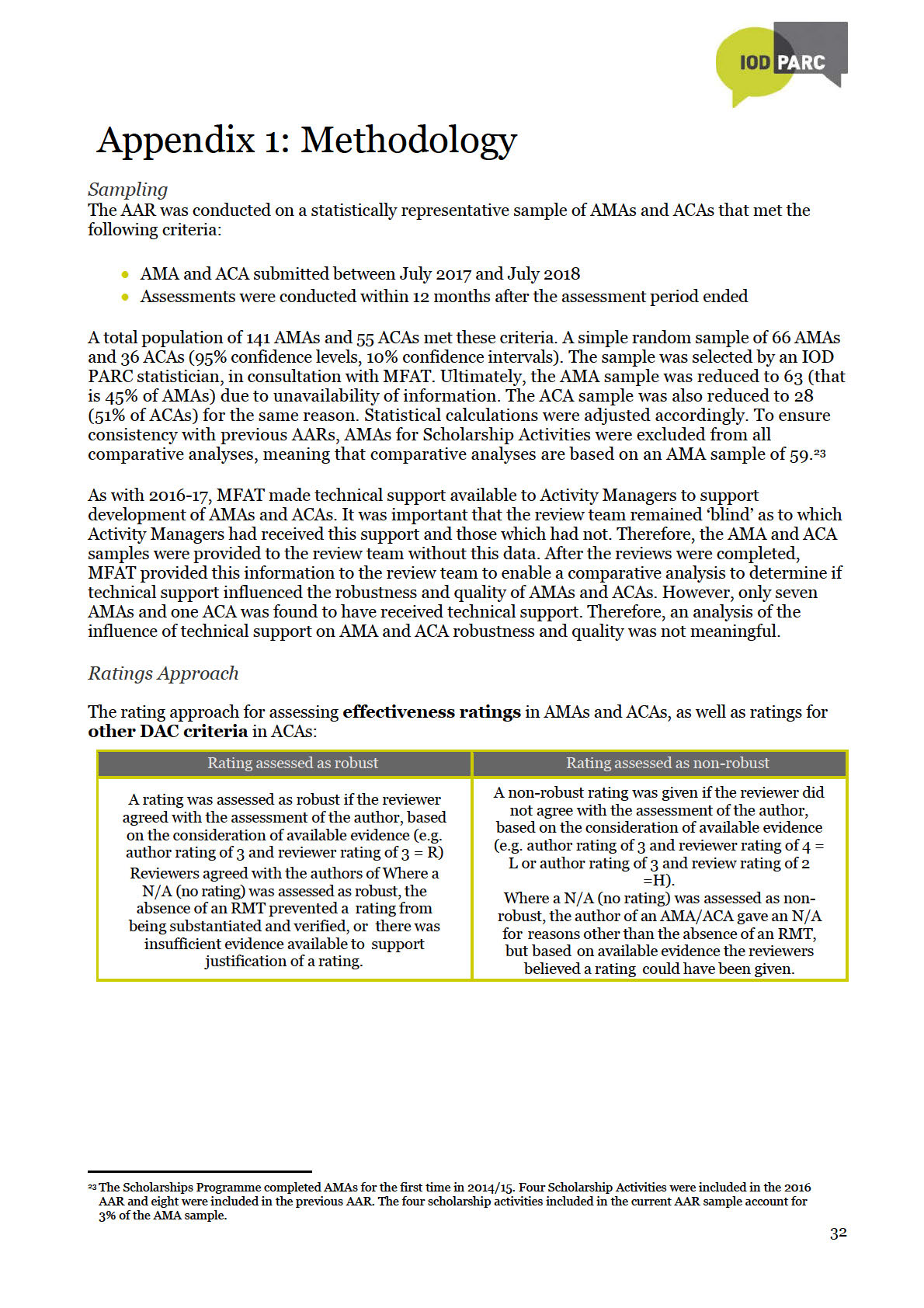

A relatively large proportion of AMAs (20%, or six AMAs) for Activities with large whole-of-

life costs ($5 million and more) did not provide helpful information to improve the

associated Activities. This can be ascribed partly to the fact that most complex Activities, as

well as funding of Multilateral Organisations, have large budgets but there is limited scope

for proposing ways within MFAT’s control to strengthen them.

6

Conclusions

Conclusions

1. Despite improvements in qualitative aspects of reporting, AMAs and ACAs still do not provide

sufficiently comprehensive or stand-alone records of Activities’ progress. As in previous AARs,

Activity Managers draw on evidence from a range of sources to assess the effectiveness of

Activities but tend not to document all this evidence in AMAs and ACAs. If the evidence is not

comprehensively documented, the loss of institutional knowledge leaves substantial gaps,

especially where staff turnover is high. When these gaps build up year-on-year, new Activity

Managers might find it challenging to complete insightful AMAs and ACAs, thereby jeopardising

the robustness of AMAs and ACAs in the longer term.

2. Despite remaining challenges around the robustness of effectiveness ratings, AMAs and ACAs

generally include helpful information to improve Activities. Providing helpful information to

improve complex Activities, which often have high whole-of-life costs, are challenging since ways

to improve these Activities may not be within MFAT’s full control.

3. So far, AARs have not revealed major statistically significant results related to AMA and ACA

improvement over time. However, due to contextual factors such as the capacity and capability of

the

Activity Managers, incentives, etc. in an environment of relatively high staff turnover, MFAT

does not expect to see statistically significant linear improvements over time.

Act

Recommendations

1. AMAs and ACAs should remain as essential building blocks of the Aid Programme’s performance

management system. Activity Managers use AMAs and ACAs to reflect and assess the progress,

performance and challenges of Activities and they serve as important repositories of institutional

under

memory and continuity during Activity implementation. Increasingly insightful and usable

lessons and actions to address issues, if harnessed through a robust knowledge management

system, could also prove valuable in strengthening Activities.

2. Ongoing training and technical support would be important to ensure that gains made in the

robustness and usefulness of AMAs and ACAs are maintained and enhanced. Gradual

improvements are becoming evident, but it would be important to address known challenges and

strengthen capacity to maintain this positive momentum and to prevent the gains made from

being lost.

Information

Continue to provide training and guidance for Activity Managers in Wellington and at Post

(including locally-engaged staff) to ensure that they understand why and how to document the

evidence base for AMAs and ACAs fully, yet concisely, to increase the proportion of AMAs and

ACAs that provide stand-alone records of Activities’ progress and performance. This would be

Released

instrumental to lift the robustness and usefulness of AMAs and ACAs (and therefore their value

as essential building blocks of the Aid Programmes performance management system) to a

higher level.

Training and support in the following priority areas could be considered:

Official

Documenting consolidated evidence from several sources to justify effectiveness and

DAC criteria ratings.

RMT quality and wider socialisation of RMTs as foundations of Activity design,

monitoring and reporting, as well as dynamic tools for Activity improvement.

MERL expert assistance to support regular reviewing and updating of RMTs to ensure

that they remain relevant and up-to-date.

Strengthening RMTs and monitoring of complicated and complex Activities, for example

multi-donor and multi-country Activities, as well as Activities funded through budget

support.

Improving consistency and coherence in AMAs and ACAs, including identifying issues

that affect the progress and performance of Activities, and following this through into

7

meaningful, evidence-based actions to improve on-going activities (in AMAs), or lessons

relevant to comparable types of Activities when they complete ACAs.

3. Given the size of MFAT’s funding to Multilateral Organisations and the unique arrangements

around their monitoring, it could be beneficial to tailor guidance for the AMAs of these Activities.

4. Provide support to Activity Managers to identify and perceptively address appropriate Activity

cross-cutting markers:

Where a cross-cutting marker is identified as relevant, it should be dealt with

consistently and perceptively throughout the design, monitoring and reporting of the

activity, including in AMAs and ACAs.

Avoid including cross-cutting markers that are not relevant to an Activity in its

AMA/ACA.

5. AARs should continue to be conducted on a periodic basis to monitor the effect of known

enablers and constraints to the robustness and usefulness of AMAs and ACAs, as well as to

identify emerging challenges and actions for their continuous improvement. A larger database

will also enable meaningful trend analyses of the robustness and usefulness of AMAs/ACAs

the

across different sectors, programmes and budget levels.

Act

under

Information

Released

Official

8

1. Introduction

Background

New Zealand’s Overseas Development Assistance (ODA) is delivered through Activities administered

by the Ministry of Foreign Affairs and Trade (MFAT). AMAs and ACAs are internal assessments of the

Activities’ performance and provide forward-looking actions and lessons to improve Activities. They

form the building blocks of the Aid Programme’s Performance System.

AMAs are completed annually for Activities expending over $250,000 per annum, or

smaller Activities with a high-risk profile. They rate and describe effectiveness of on-going

Activities, and provide a descriptive assessment of performance against relevance,

efficiency, sustainability, cross-cutting issues, risk, Activity management and actions to

address identified issues.

On completion of activities with a total expenditure over $500,000 ACAs are completed,

the

ideally within one month of receiving the final Activity Completion Report from the

implementing partner. ACAs rate and provide a narrative assessment of an Activity’s

Act

performance in relation to five Development Assistance Committee (DAC) criteria, namely

relevance, effectiveness, efficiency, impact and sustainability; they also comment and

provide analyses on cross-cutting issues, risk and Activity management, and identify lessons

to improve future Activities.

Completion rates for AMAs and ACAs have increased since 2013/14. The completion rate for 2014/15

AMAs and ACAs was 79%, which was an increase of almost 20% from 2013/14. The completion rate

under

for 2016/17 AMAs and ACAs was 76%. AMAs and ACAs from 2017/18 had the highest completion rate

ever, namely 88%.

During interviewing, eight (of 11) Activity Managers noted that revisions of AMA and ACA templates

over the last five years have made their completion easier, more useful and less time consuming.

Three Activity Managers stated that they had completed training and knew where to access support

if they required assistance with the completion of AMAs/ACAs. Two Activity Managers – one based

at post and one in New Zealand – noted that some staff at Post were unaware/unable to access the

same level of training/support that is offered to Wellington-based staff. Both stated that this could

Information

be improved by increased promotion of available support at Post and including the information in

orientation training for locally-engaged staff, in particular.

Purpose of the AAR

Released

For many Activities, AMAs and ACAs completed by Activity Managers are the only formal MFAT

assessment of their progress and performance. Aggregated ratings data from AMAs and ACAs provide

a snapshot of the performance of New Zealand’s ODA. It is important to have confidence in the

robustness of these ratings. AARs assess the robustness of Activity Managers’ ratings of Activities’

effectiveness. It also assesses the overall usefulness of AMAs and ACAs, as well as the analysis of

Official

cross-cutting issues, actions proposed to address issues (AMAs), and of lessons learnt (ACAs)

presented by Activity Managers.

The purpose of the AAR is to:

assess the level of confidence that MFAT can have in the robustness of AMAs and ACAs

inform the Insights, Monitoring & Evaluation team’s efforts to strengthen the Aid Programme’s

Performance System

provide input to the Aid Programme Strategic Results Framework.7

7 Level 3 of the New Zealand Aid Programme Strategic Results Framework incorporates the following indicators pertaining to the

quality of AMAs and ACAs:

2.3 : Percentage of AMAs and ACAs rated 3 or higher on a scale of 1-5 reviewed against quality standards

2.4 : Percentage of AMAs and ACAs rated 3 or higher on a scale of 1-5 reviewed against quality standards for Cross-cutting issues

9

This is the fourth Annual Assessment of Results (AAR) that provides an independent quality

assurance of AMAs and ACAs. It was conducted for AMAs and ACAs completed between July 2017

and July 2018.8 It describes findings from a sample of 66 AMAs and 36 ACAs, drawn from a total of

141 AMAs and 55 ACAs. Of those selected, 63 AMAs and 28 ACAs were eventually reviewed.9

Effectiveness ratings in 34 AMAs and 18 ACAs were found to be inconsistent with documented

evidence and 35 Activity Managers10 were identified for interviewing to clarify these ratings.

Interviews were conducted with 11 Activity Managers, covering eleven AMAs and six ACAs. This

constitutes an overall interview rate of 33%, which is much lower compared to previous AARs, and

which is a major limitation of the current AAR.

Structure of the Report

The report starts with a brief overview of the AAR’s purpose (Section 1) and methodology, including

limitations (Section 2). Section 3.1 deals with the robustness of effectiveness ratings in AMAs and

ACAs, while Section 3.2 deals with the robustness of other rated criteria, namely relevance, efficiency,

sustainability and impact (ACAs only). Challenges to the robustness of effectiveness ratings are also

discussed, including the role of Results Management Tables (RMTs). Section 3.3 reflects on the

assessed quality of non-rated criteria, namely Actions to address Issues Identified (AMAs), Lessons

the

Learned (ACAs) and the overall usefulness of AMAs and ACAs. The analysis of Cross-Cutting Issues is

also discussed. The report ends with conclusions and recommendations in Sections 4 and 5

Act

respectively.

2. Methodology

under

The methodology for the AAR was developed in consultation with MFAT and has been refined over

four successive AARs. A summary is provided here, with more detail in Appendix 1.

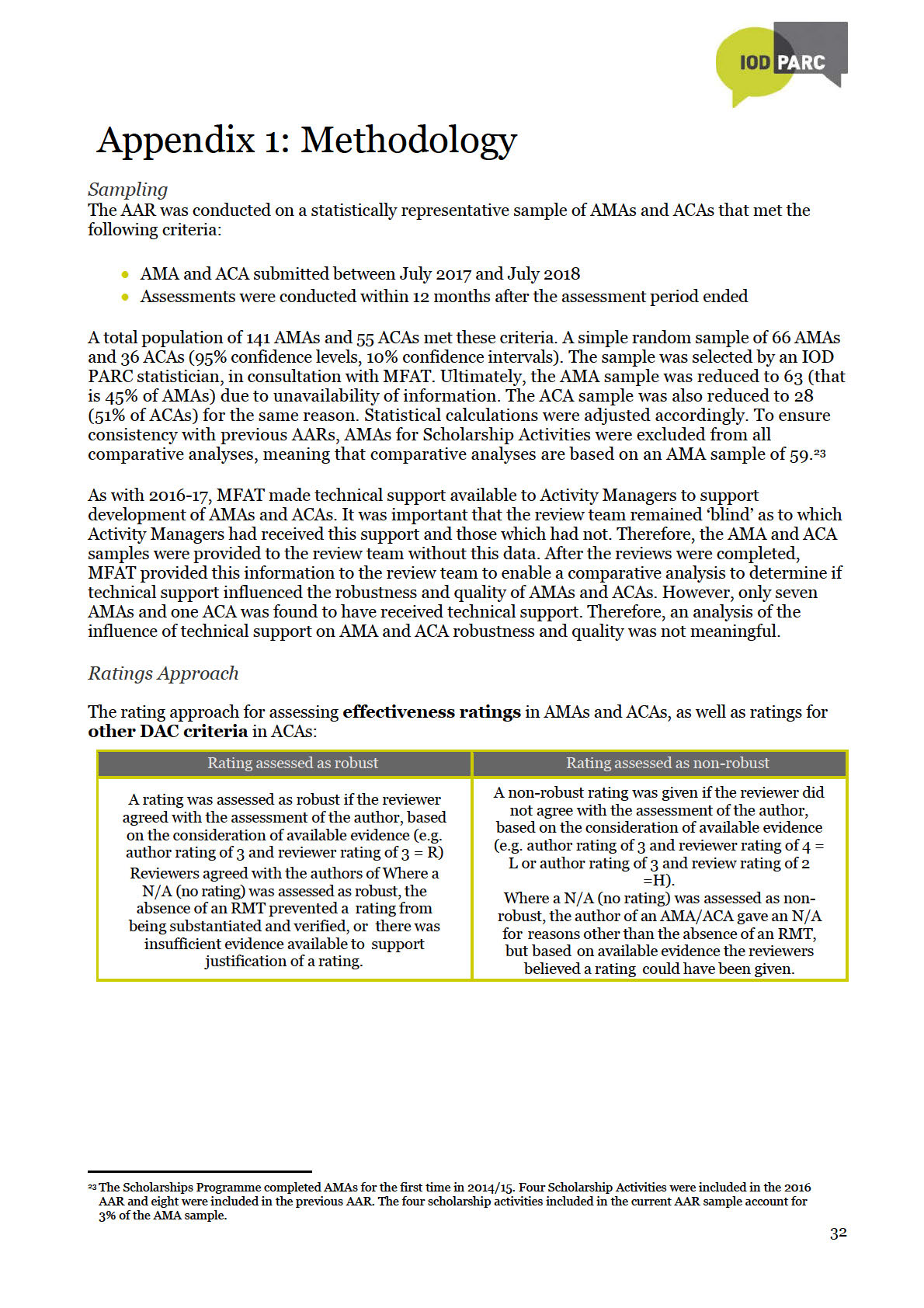

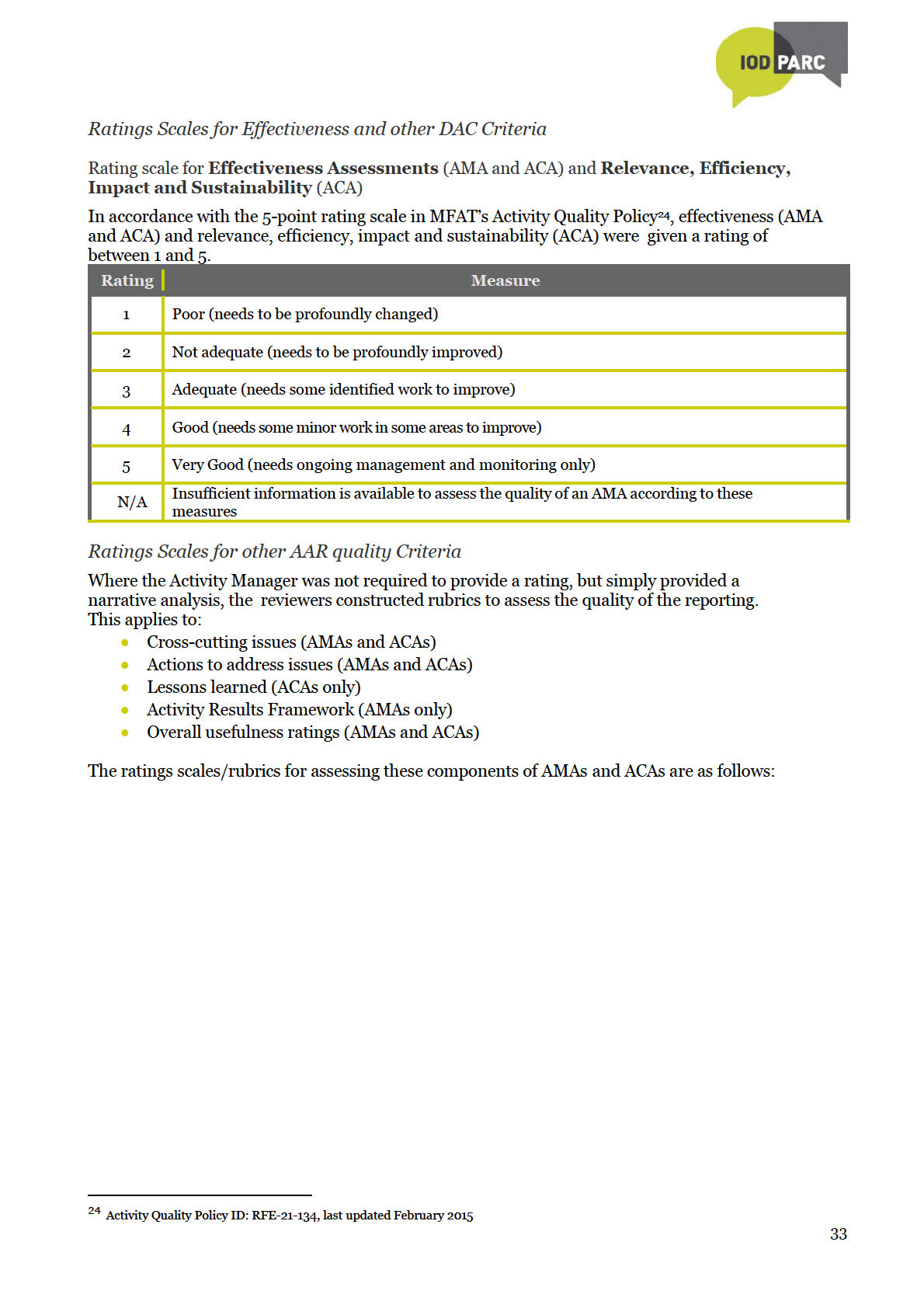

Sampling

The AAR is based on a statistically representative sample of AMAs and ACAs that met the following

criteria:

it was submitted between July 2017 and July 2018

it was completed within 12 months after the assessment period ended.

Information

A total population of 141 AMAs and 55 ACAs met these criteria. A simple random sample of 66 AMAs

and 36 ACAs (95% confidence levels, 10% confidence intervals). Ultimately, the AMA sample was

reduced to 63 (that is 45% of AMAs) due to unavailability of information. To ensure consistency

with previous AARs, AMAs for Scholarship Activities were excluded from all comparative

Released

analyses, meaning that comparative analyses are based on an AMA sample of 59.11 The ACA

sample was reduced to 28 (51% of ACAs) for the same reason. The distribution of the samples

according to sectors, programme categories and Whole-of-Life Budget Programme Approvals is

illustrated in Appendix 3.

There were no major changes to the quality reporting system during the review period. The use of

Official

revised AMA and ACA templates, which were introduced in June 2017, is well-established. Most

AMAs (91% of AMAs) and ACAs (75% of ACAs) were completed in the revised templates. Due to

the small number of AMAs and ACAs that were completed in the old templates, an analysis of the

effect of template revisions on the robustness of AMAs and ACAs would not be meaningful.12

8 The previous three AARs were the inaugural AAR, conducted in 2015 for AMAs and ACAs completed between July 2013 and July 2014;

the AAR conducted in 2016 for AMAs and ACAs completed between July 2014 and July 2015, and the AAR conducted in 2018 for AMAs

and ACAs completed between July 2016 and July 2017.

9 The main reason for the differences between selected and reviewed totals is that Activity-related documents could not be provided in time

to be included in the review.

10 Some Activity Managers were responsible for more than one AMA or ACA.

11 The Scholarships Programme completed AMAs for the first time in 2014/15. Four Scholarship Activities were included in the 2016

AAR and eight were included in the previous AAR. The four scholarship activities included in the current AAR sample account for

3% of the AMA sample.

12 Five AMAs and seven ACAs were complete using the old templates.

10

As in previous AARs, AMAs for Scholarship Activities are excluded from the main analysis because

the robustness of scholarships AMAs have typically been significantly lower and have skewed overall

AMA robustness results. In previous AARs, AMAs were prepared for individual Scholarship

Activities. In the current AAR period, the approach to AMAs for the scholarship Activities have been

revised to have a few integrated AMAs focused at a more programmatic level. Therefore, there was

an over-arching AMA prepared for the Scholarship programme, as well as integrated AMAs for all

Scholarship Activities at a country level. There was consistency of assessments and issues across the

four integrated Scholarship AMAs that were reviewed. Therefore, compared to previous AARs, the

influence of scholarship AMAs on the robustness of effectiveness ratings in the current AAR would

have been less evident, but for consistency scholarships activities are excluded.

Assessments

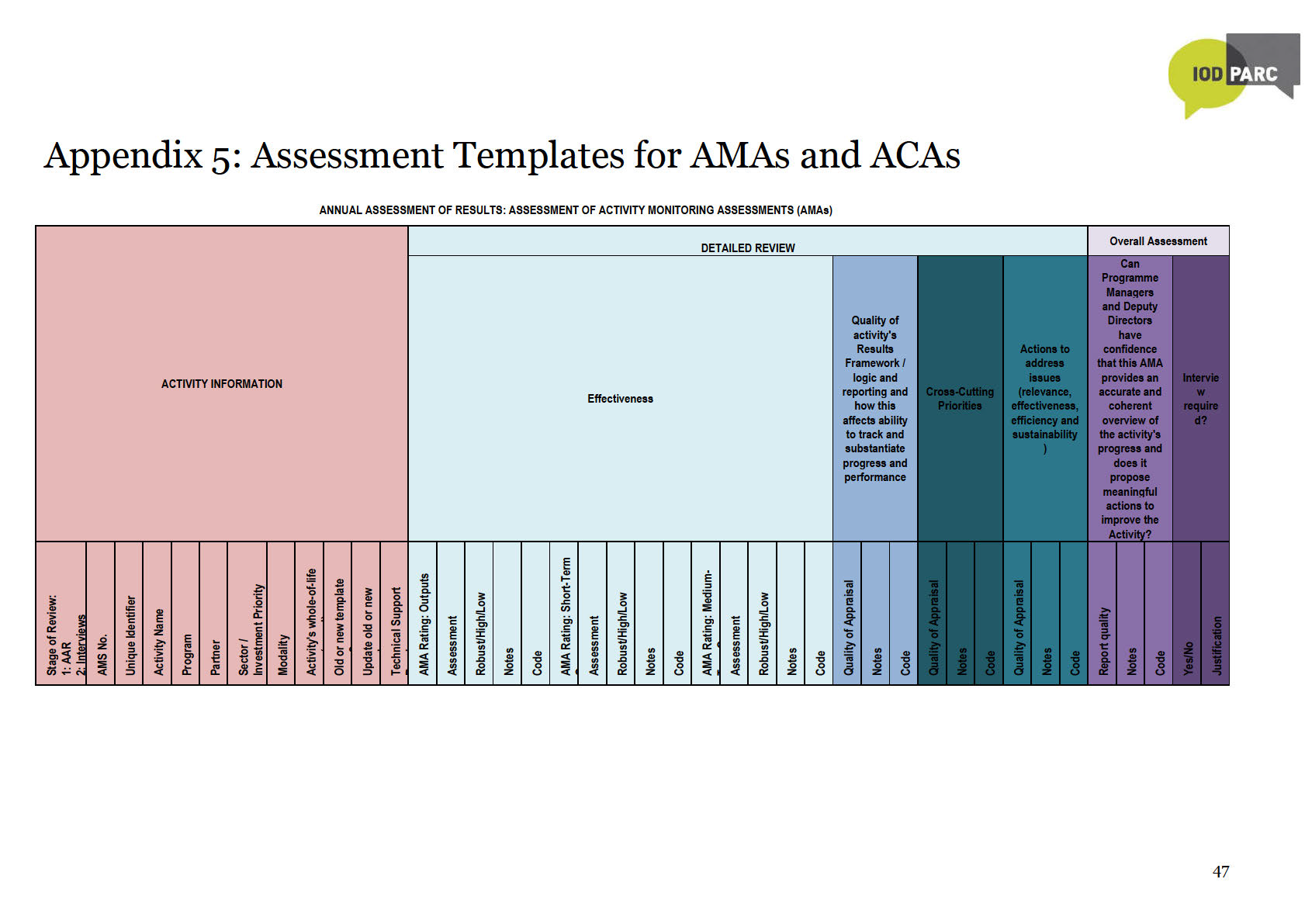

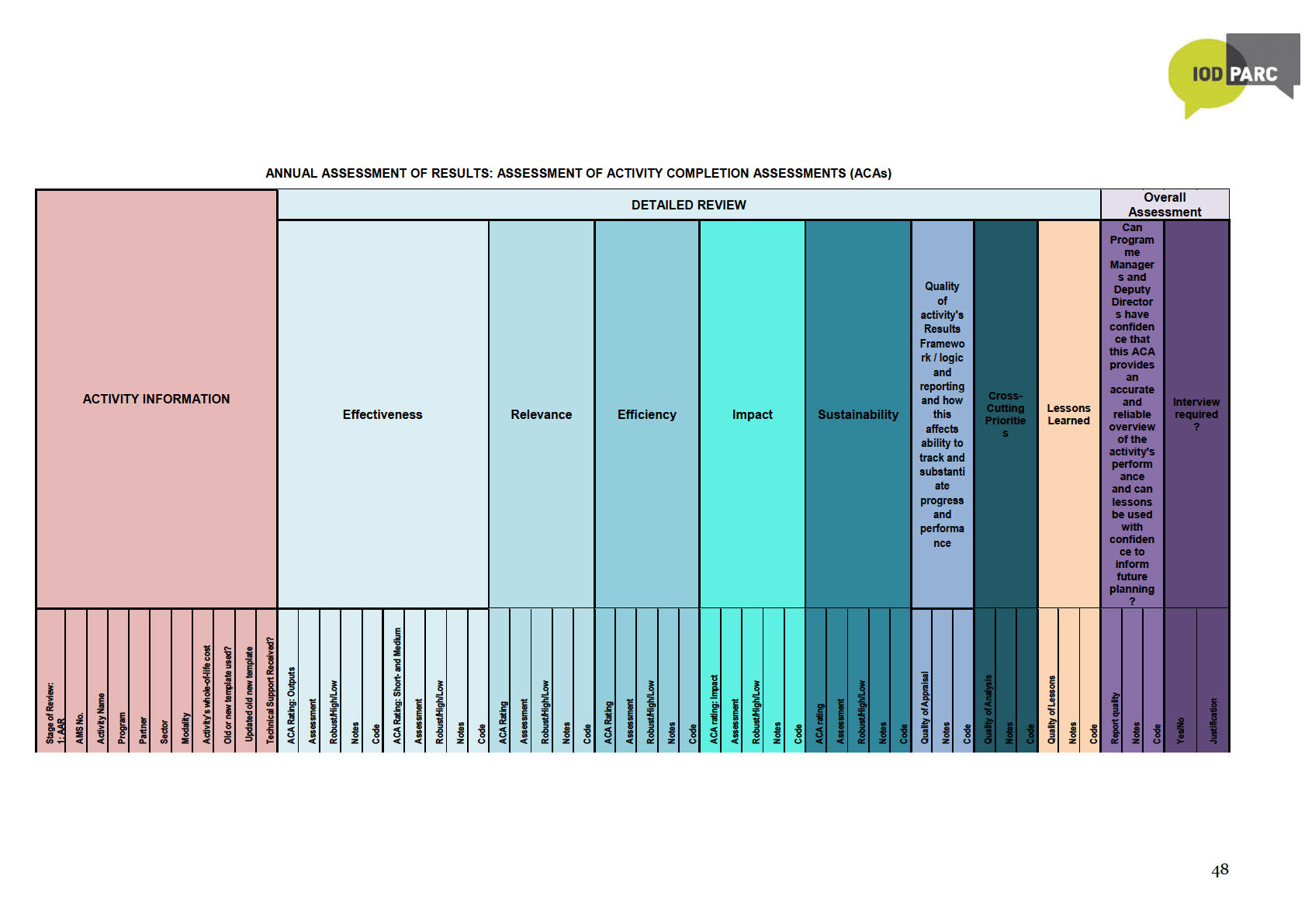

Standard assessment templates for AMAs and ACAs were developed for the inaugural AAR and

slightly amended over previous AARs without compromising comparability with the inaugural AAR.

For the current AAR, two revisions were made to templates:

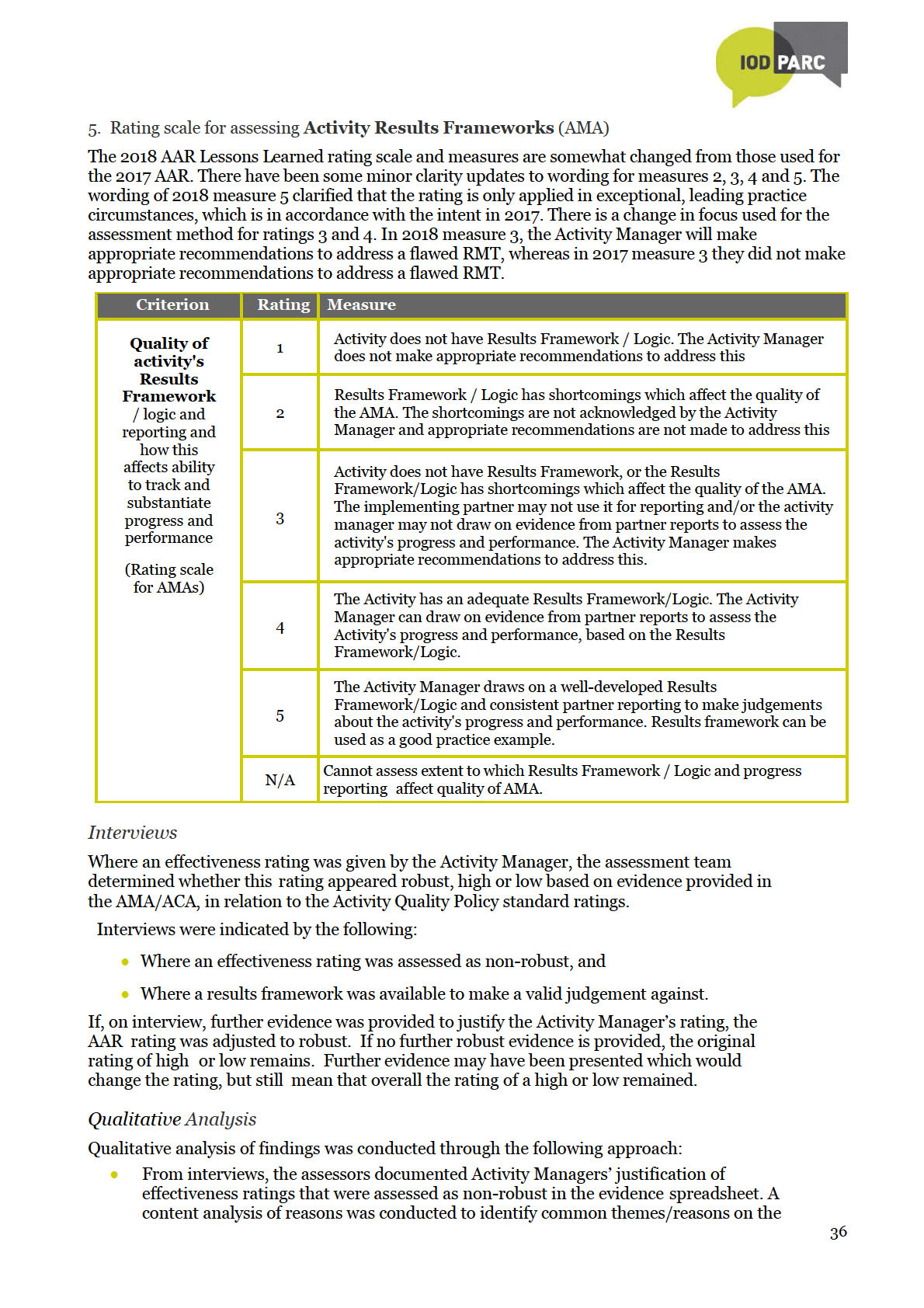

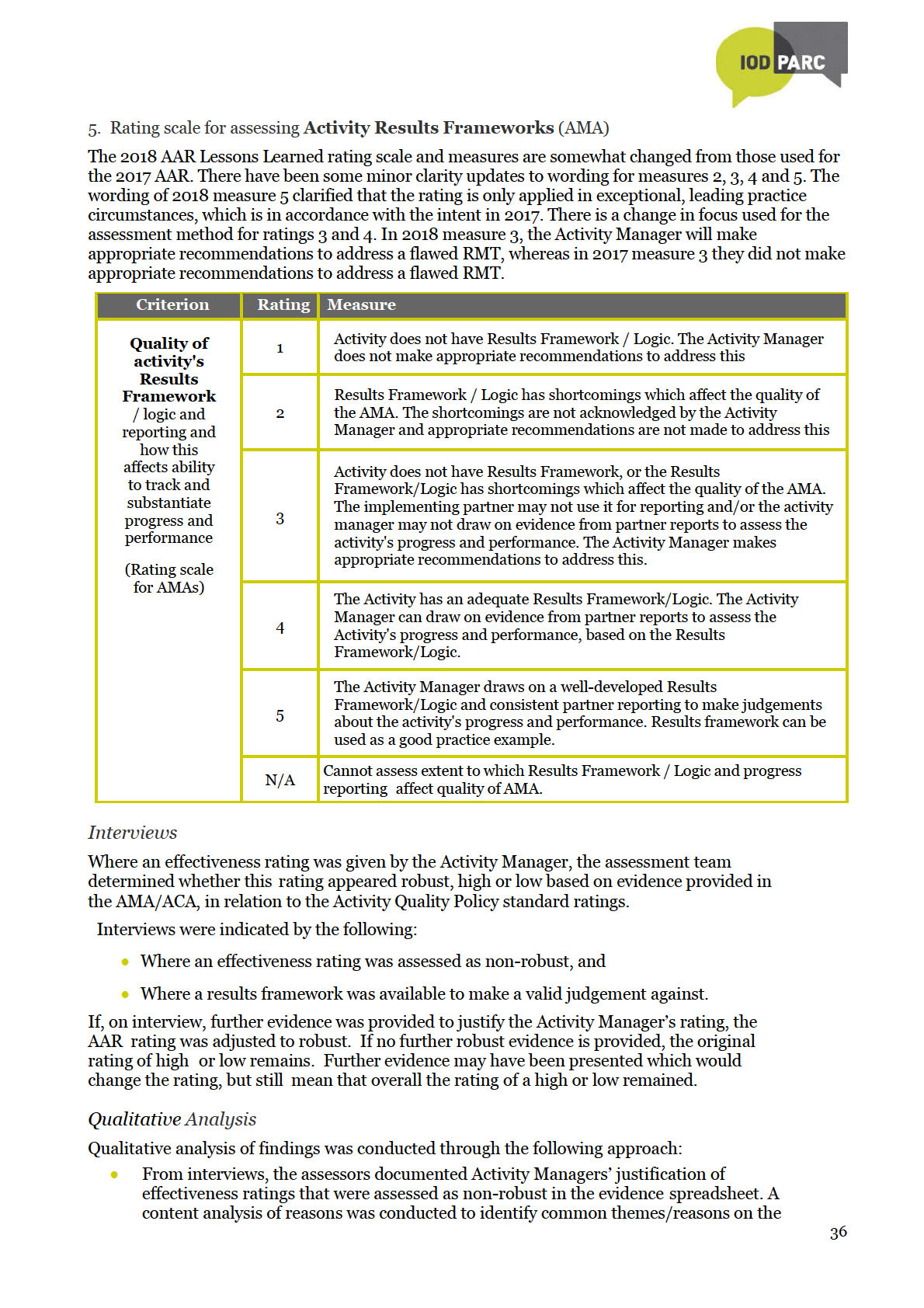

The rating scale for assessing RMTs in the AMA template was re-organised so that ratings

the

reflect a logical increase in quality from a rating of 1 to a rating of 5.

In previous AARs, an overall assessment of AMAs and ACAs focused on quality, based mainly

Act

on the robustness of evidence that informed the completion of the AMA/ACA. In the current

AAR, the overall assessment considered the robustness of evidence, report coherence and the

extent to which this evidence was used to propose helpful actions or lessons to improve

Activities. It considers coherence between identified issues and proposed actions/lessons to

address these issues.

A desk review of the sampled AMAs and ACAs, as well as accompanying partner progress reports,

under

completion reports and the reports of independent evaluations, was conducted to assess the robustness

of effectiveness ratings and, in ACAs, also the ratings for other DAC criteria. The assessment of

robustness is based on the extent to which Activity Managers’ ratings were substantiated by the

evidence and analysis presented in the AMA/ACA, as well as in supporting documentation provided to

reviewers, in accordance with guidance in the Activity Quality Policy (AQP) and in the new AMA and

ACA templates. To have confidence in the robustness of Activity Managers’ effectiveness ratings

across all AMAs and ACAs, MFAT would expect at least 75% of the assessed ratings to be robust.

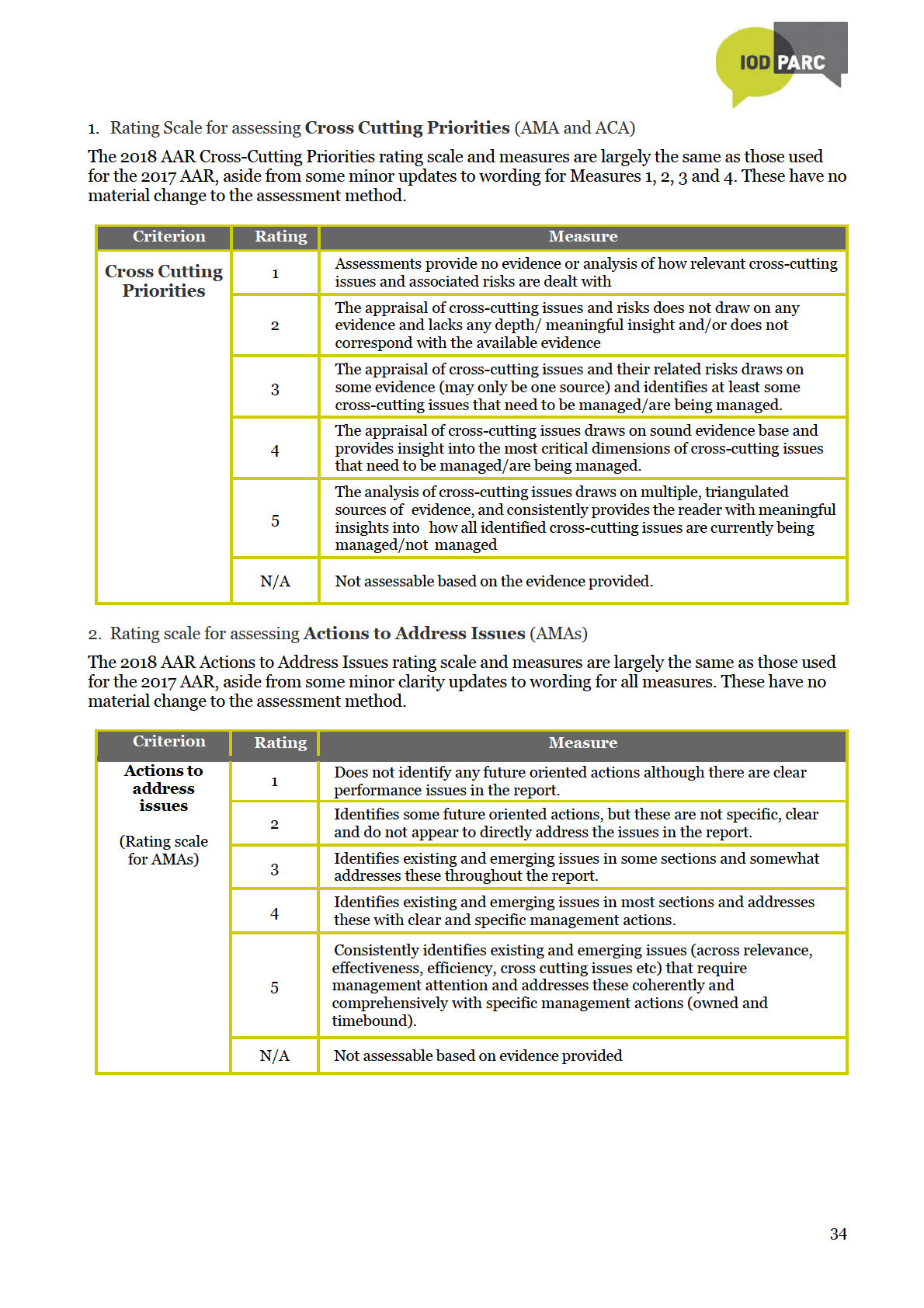

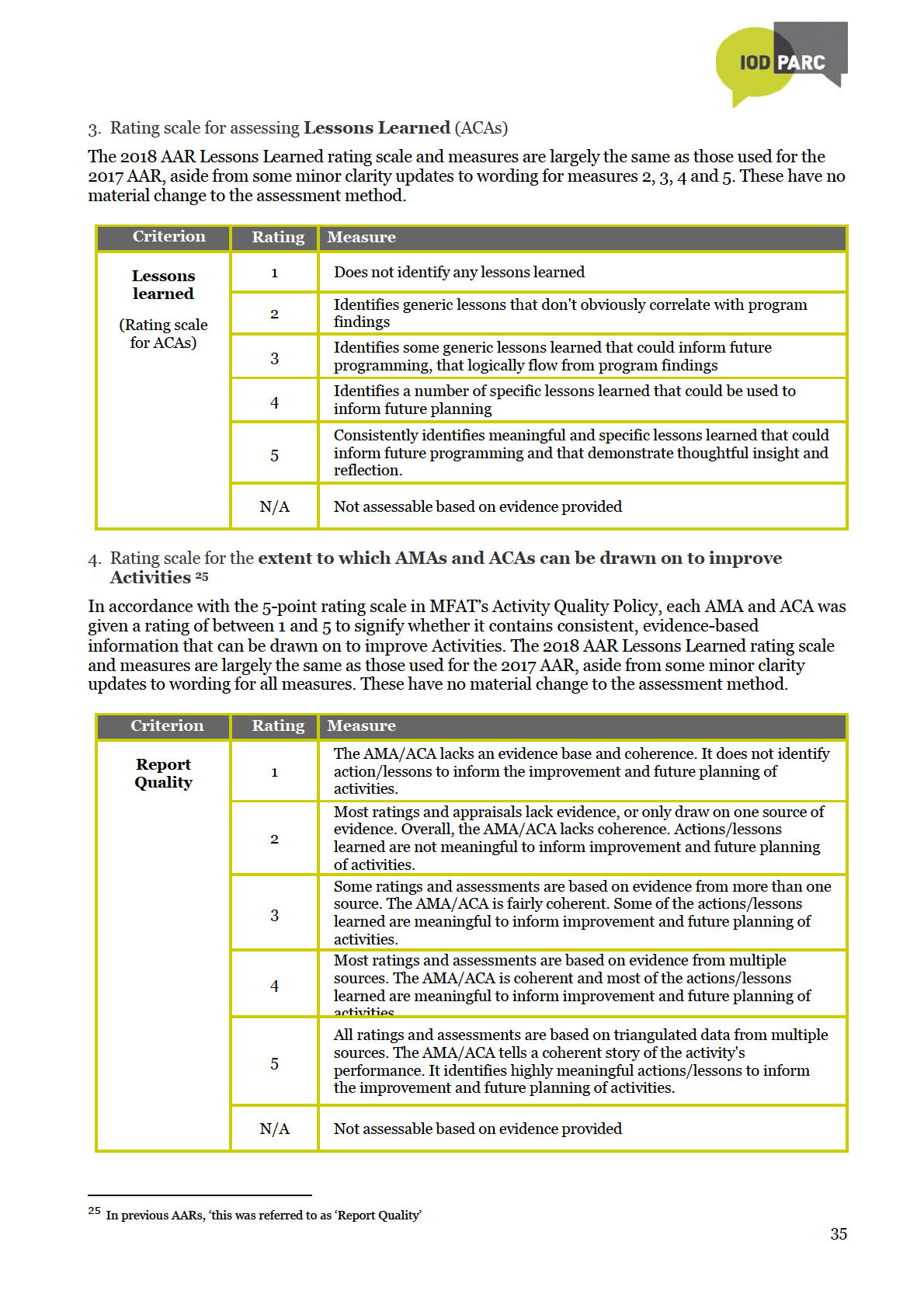

Non-rated elements of AMAs and ACAs were subject to more qualitative assessments, based on

Information

tailored rating scales. These assessments are not subject to the 75% confident level.

Ratings scales are in Appendix 1, while copies of the assessment templates are in Appendix 5.

Interviews

Released

Effectiveness ratings in 34 AMAs (52%) and 18 ACAs (50%) were initially assessed as non-robust.

Ideally, Activity Managers responsible for all these AMAs and ACAs should have been interviewed

telephonically to clarify the evidence base for the effectiveness ratings. However, only eleven Activity

Managers, covering only one-third of the identified AMAs and ACAs, were interviewed.13 Following

Official

interviews, a final assessment of the robustness of ratings was made.

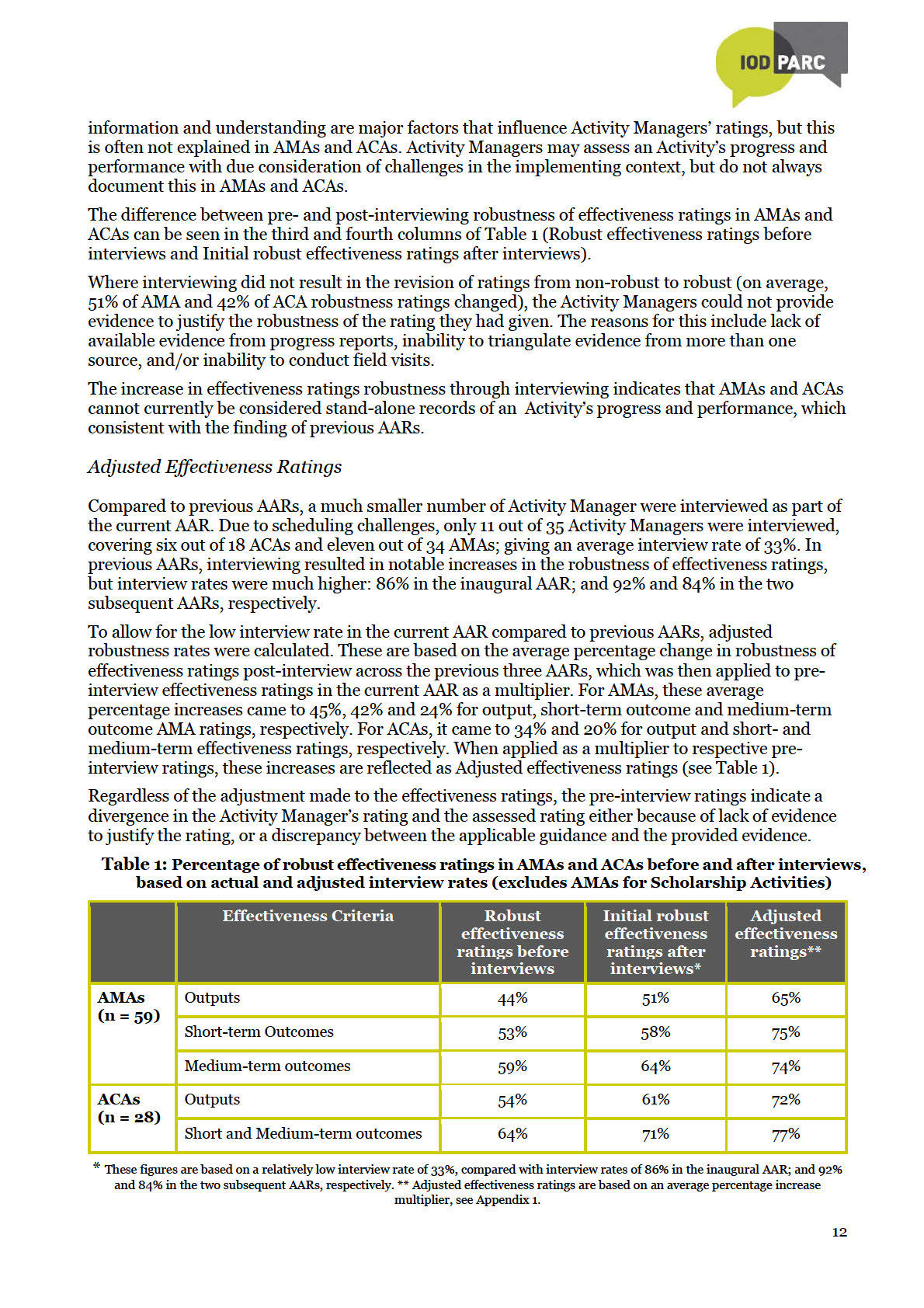

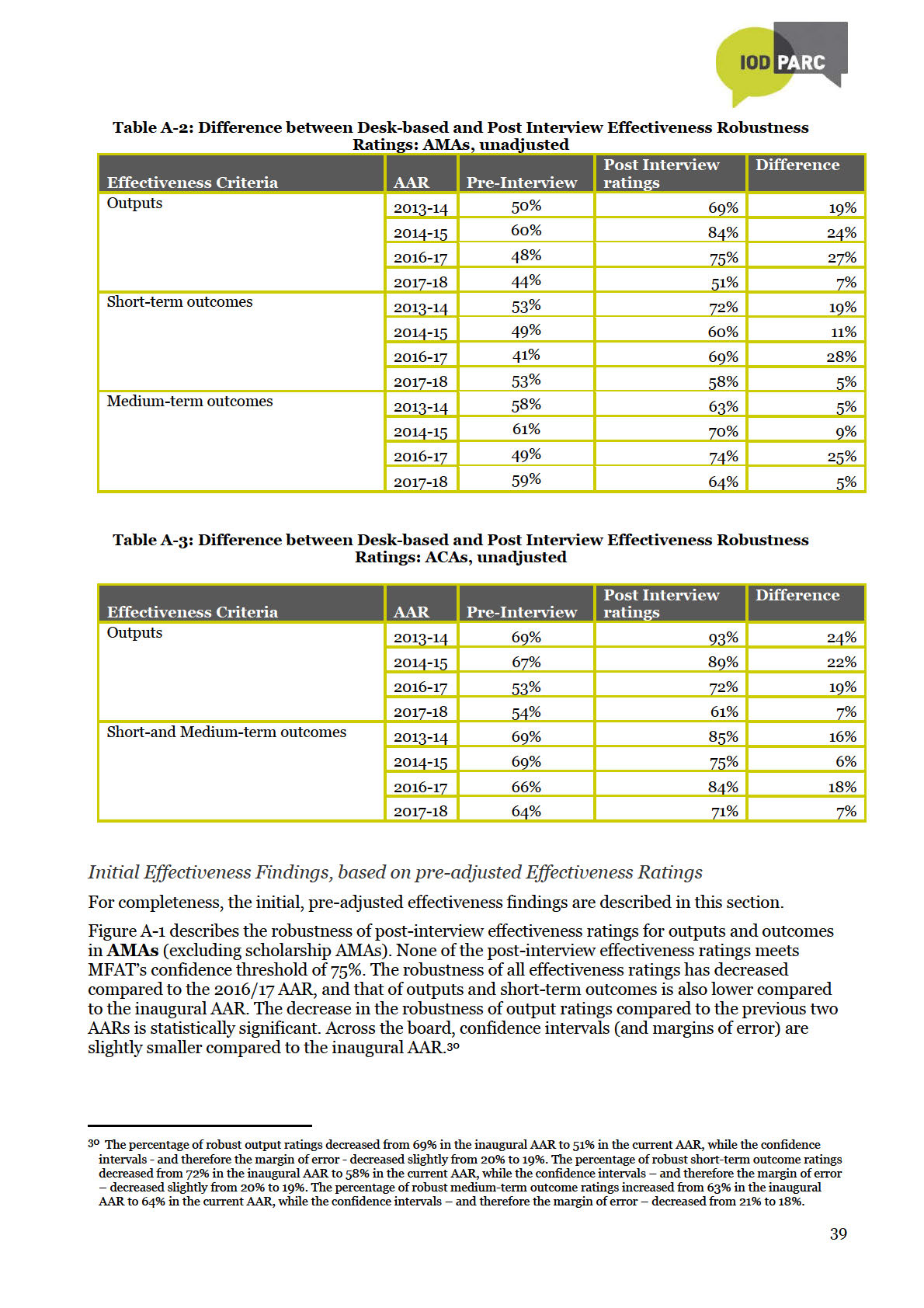

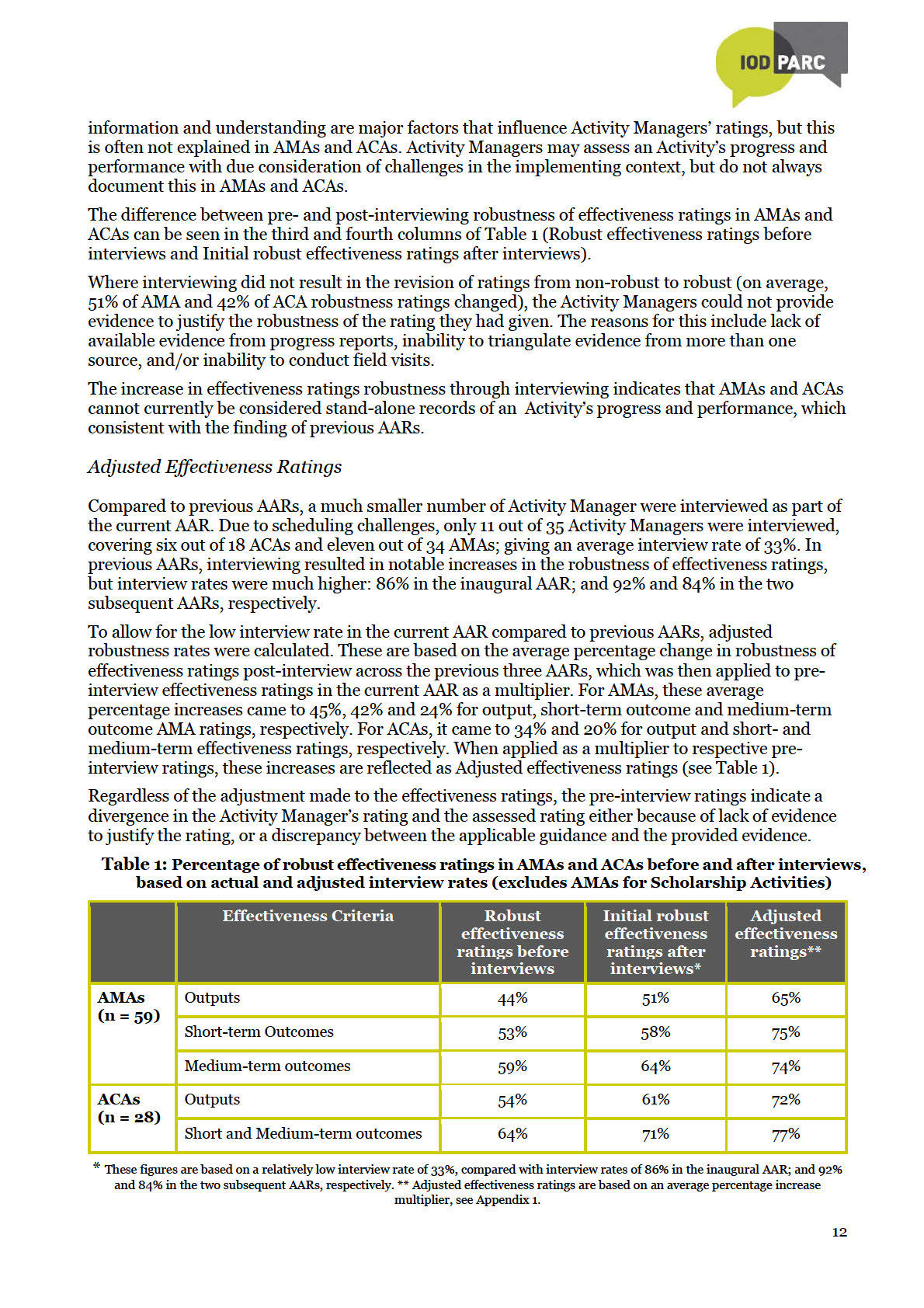

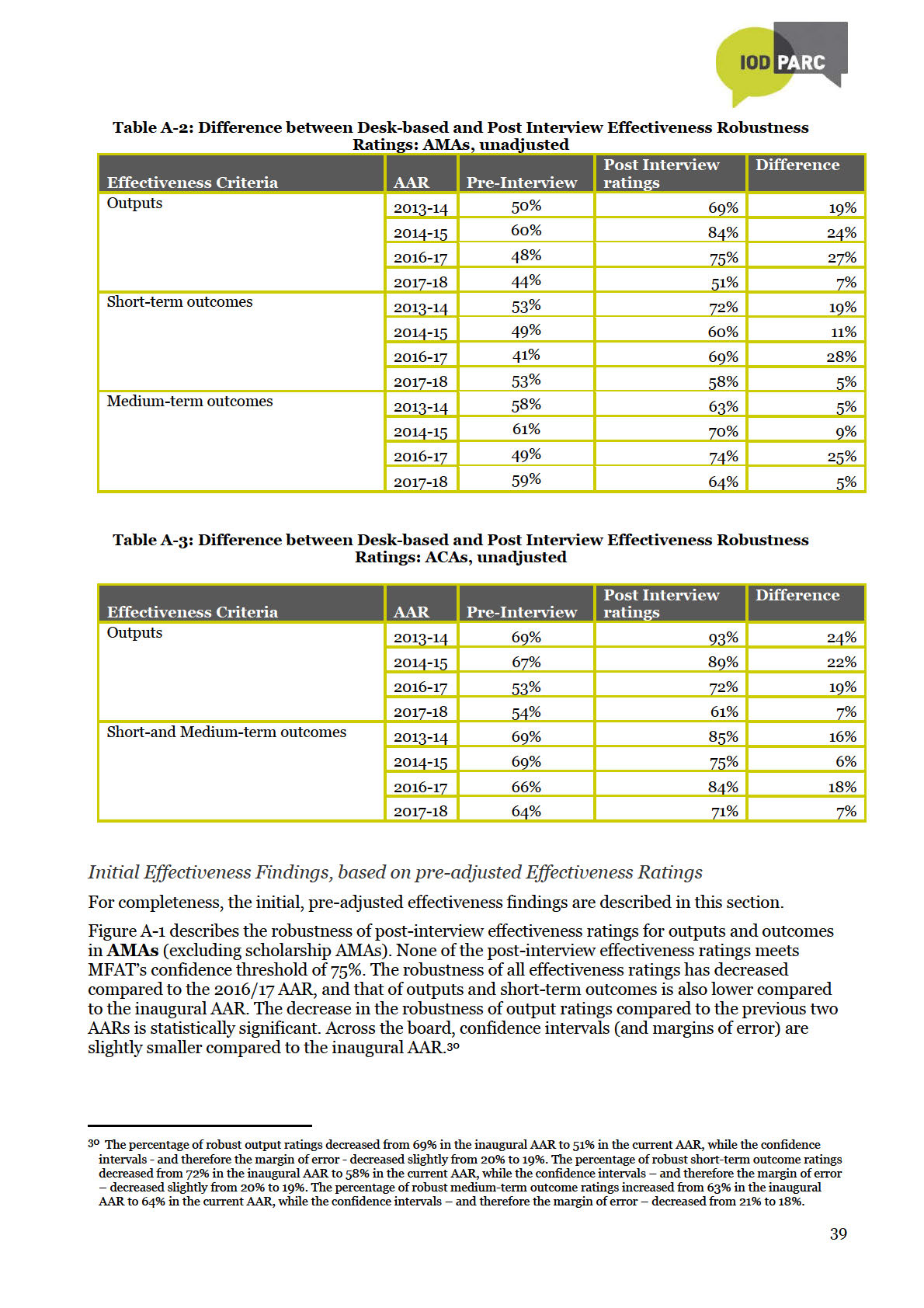

Interviewing resulted in an increase in the robustness of all effectiveness ratings for both AMAs and

ACAs

(see Table 1). Compared to before interviewing, the percentage of outputs assessed as robust

after interviewing increased by 7% for AMAs and 7% for ACAs. After interviewing, the robustness of

both short-term and medium-term ratings in AMAs increased by 5%, while the robustness of

outcome ratings in ACAs increased by 7%.

Where non-robust ratings were revised to robust after interviewing, Activity Managers could provide

additional information or explanations to justify the ratings they had given. This evidence is not

systematically documented in AMAs or ACAs, for example judgements formed from project visits

and direct interaction with implementing partners and/or partner governments. Contextual

13 Of the 11 interviews that were conducted, four involved discussion of more than one AMA and/or ACA.

11

the Act

under

Information

Released

Official

Limitations of the AAR

1. The AAR is based on selected information on Activity performance. This includes AMAs, ACAs,

the partner report(s) corresponding to the completion dates of AMAs/ACAs, as well as reports of

independent evaluations, where available. Interviews with Activity Managers supplement the

information base for some assessments. Other information that may affect ratings is not

reviewed.

2. Comparisons of actual post-interview effectiveness ratings between the current and previous

AARs should be made with caution.

Both the initial post-interview robustness ratings and the adjusted post-interview ratings have

limitations. The initial post-interview ratings are not informed by a proportional number of

interviews compared to previous years and therefore likely presents and under-estimation of

robustness of effectiveness ratings. The adjusted post-interview ratings are indicative rather than

definitive, but are likely to be closer to what the actual results may have been had a similar

proportion of interviews compared to previous AARs been conducted.

3. The assessment of DAC criteria (including effectiveness) was based on Quality Criteria

the

Considerations outlined in Appendix one of MFAT’s 2015 Aid Quality Policy. However, Activity

Managers likely based their ratings on the AMA/ACA Guidelines revised in 2017. This includes

Act

simplified requirements for Effectiveness, Relevance, Impact, Sustainability and Efficiency, and

Cross-Cutting issues being addressed in the Effectiveness section rather than throughout all

DAC criteria. This could affect the comparability of assessed ratings in the current AAR with

those of previous AARs.

4. The sample size is a representative sample determined at 95% confidence level and a confidence

interval of 10. Decreasing the confidence interval will increase the sample size and reduce the

under

margin of error. This will enable more precise estimates of AAR findings.14 However, for the

2017-18 AAR, confidence intervals have only been calculated for the initial post-interview

ratings and not the adjusted post interview ratings due to potential limitations in the approach.

5. Keeping interviews to 30 minutes each means that they focus on non-robust effectiveness

ratings, as well as four process-related questions. Other non-robust ratings (e.g. for other DAC

criteria in ACAs), or the analyses of CCIs, Lessons and Actions can generally not be discussed

within the available time.

6. The AAR was carried out by two assessors, and pairs of assessors have also changed in

Information

subsequent AARs. Inter-assessor consistency is therefore a potential limitation. This was

mitigated through (1) In-depth orientation and induction of assessors by an experienced Team

Leader, who has been involved in all four AARs to date; (2) Assessor ‘calibration’ following the

assessment of two AMAs and one ACA; (3) Cross-checks and investigation of inconsistent

Released

findings compared to previous AARs; and (4) Independent quality assurance of selected AMAs

and ACAs completed by both assessors.

Official

14 Taking initial outputs ratings in Annex 1 as an example, this means that we can say with 95% certainty that between 41% and 60% of

AMAs will have effective output ratings, no matter how many different samples of the same size we draw form the total number of

AMAs. Another way of saying it is that 51% of AMAs have robust output ratings, with a 19% margin of error. If the sample size is

increased, the confidence interval (and margin of error) will become smaller.

13

3. Key findings

3.1 Robustness of effectiveness ratings

For AMAs, Activity Managers rate effectiveness of Activities separately for outputs, short-term

outcomes and medium-term outcomes. For ACAs, the effectiveness of Activities is rated for outputs,

while a combined rating is given for short- and medium-term outcomes.

The current AAR included an integrated AMA for multiple scholarship Activities, comprising

scholarships for New Zealand based tertiary, Commonwealth, Regional Development

Scholarships and In-Country English Language Training. It incorporated some, but not all the

AMAs in the other three selected scholarship Activities, namely scholarships for Commonwealth,

Regional Development, Pacific Development and Short-Term Training in Niue, Timor-Leste and

Tuvalu, respectively. Compared to the previous AAR, the influence of scholarship AMAs on the

robustness of effectiveness ratings in the current AAR has been similar, although less prominent.

When AMAs for scholarship Activities were excluded from analyses, the robustness of output and

the

short-term outcome ratings increased, while that of medium-term outcomes decreased, but not as

markedly as in the previous AAR.

Act

The quantitative analysis of results is based on the final, adjusted, post-interview assessment of the

robustness of ratings in AMAs and ACAs, excluding AMAs for Scholarship Activities. To be

confident in the robustness of effectiveness ratings, MFAT would expect at least 75% of these

ratings to be robust.

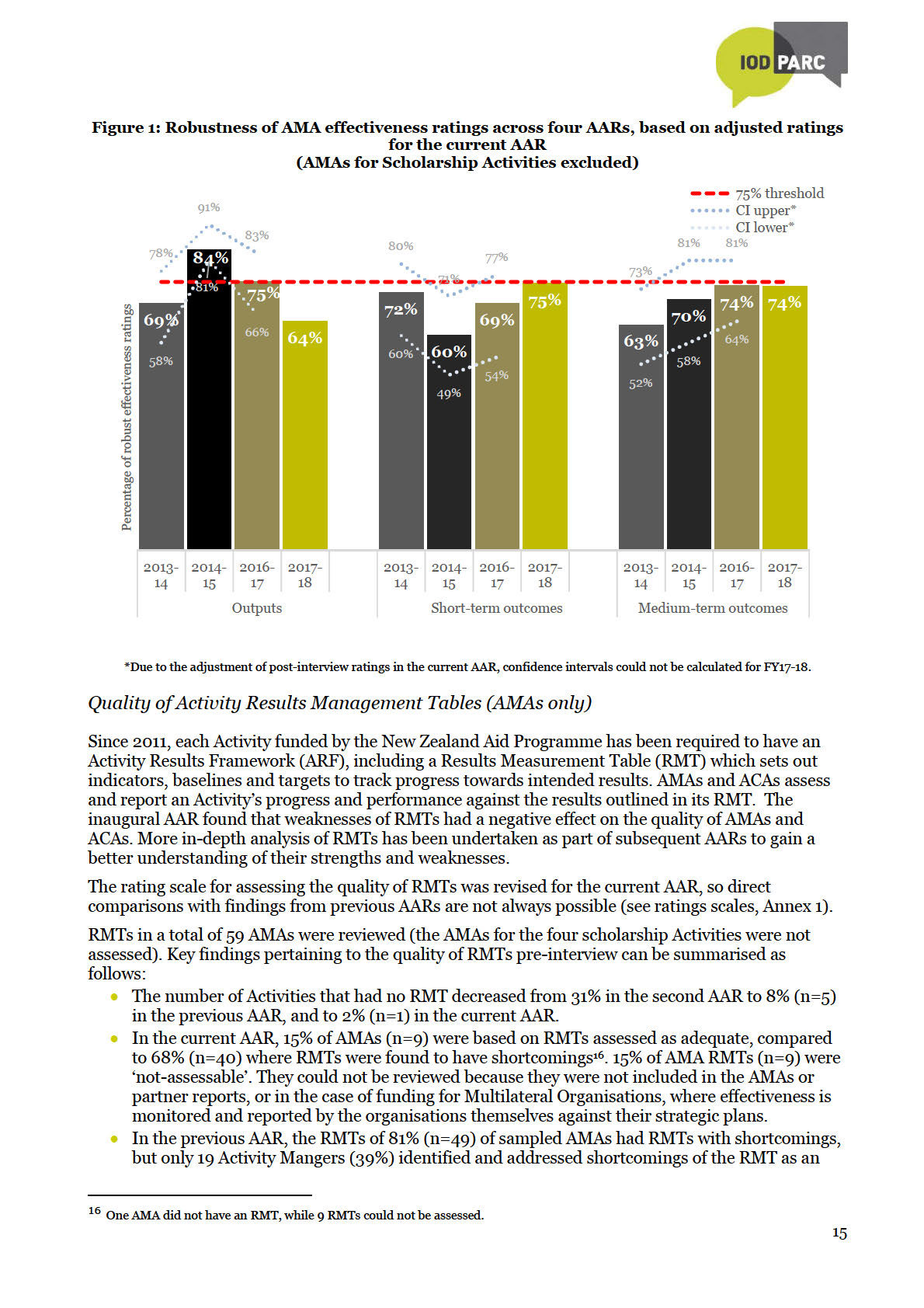

Robustness of effectiveness ratings: AMAs under

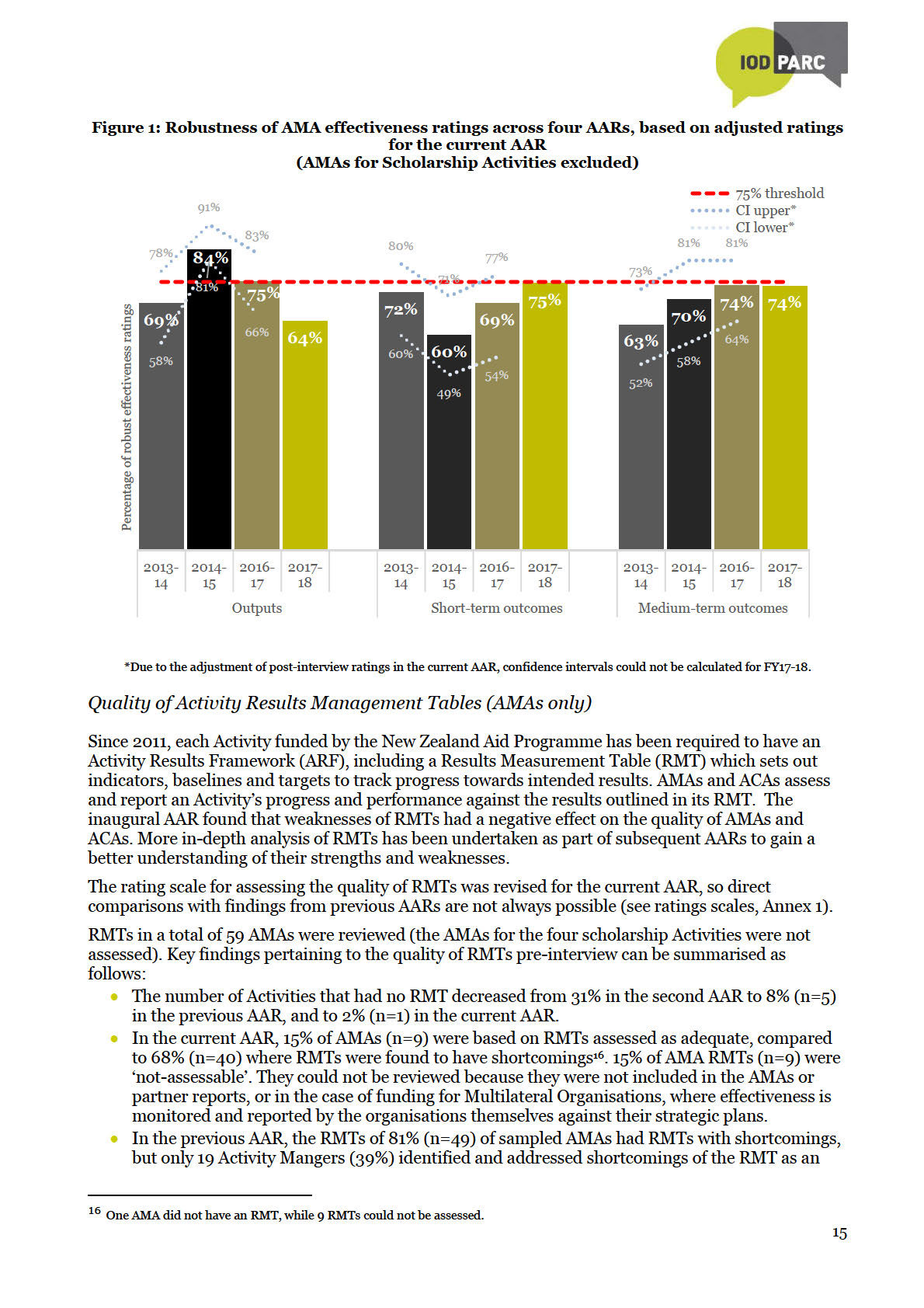

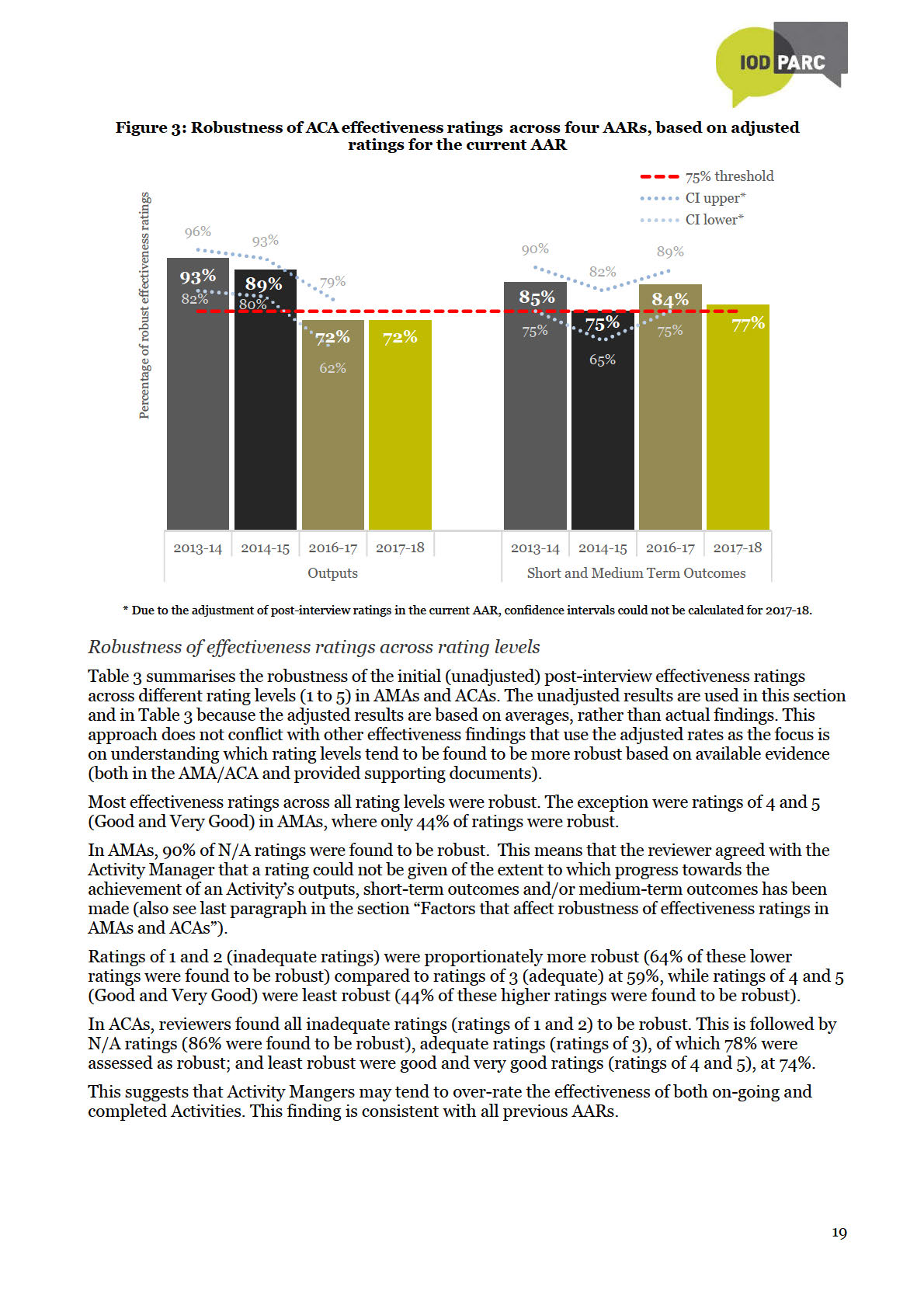

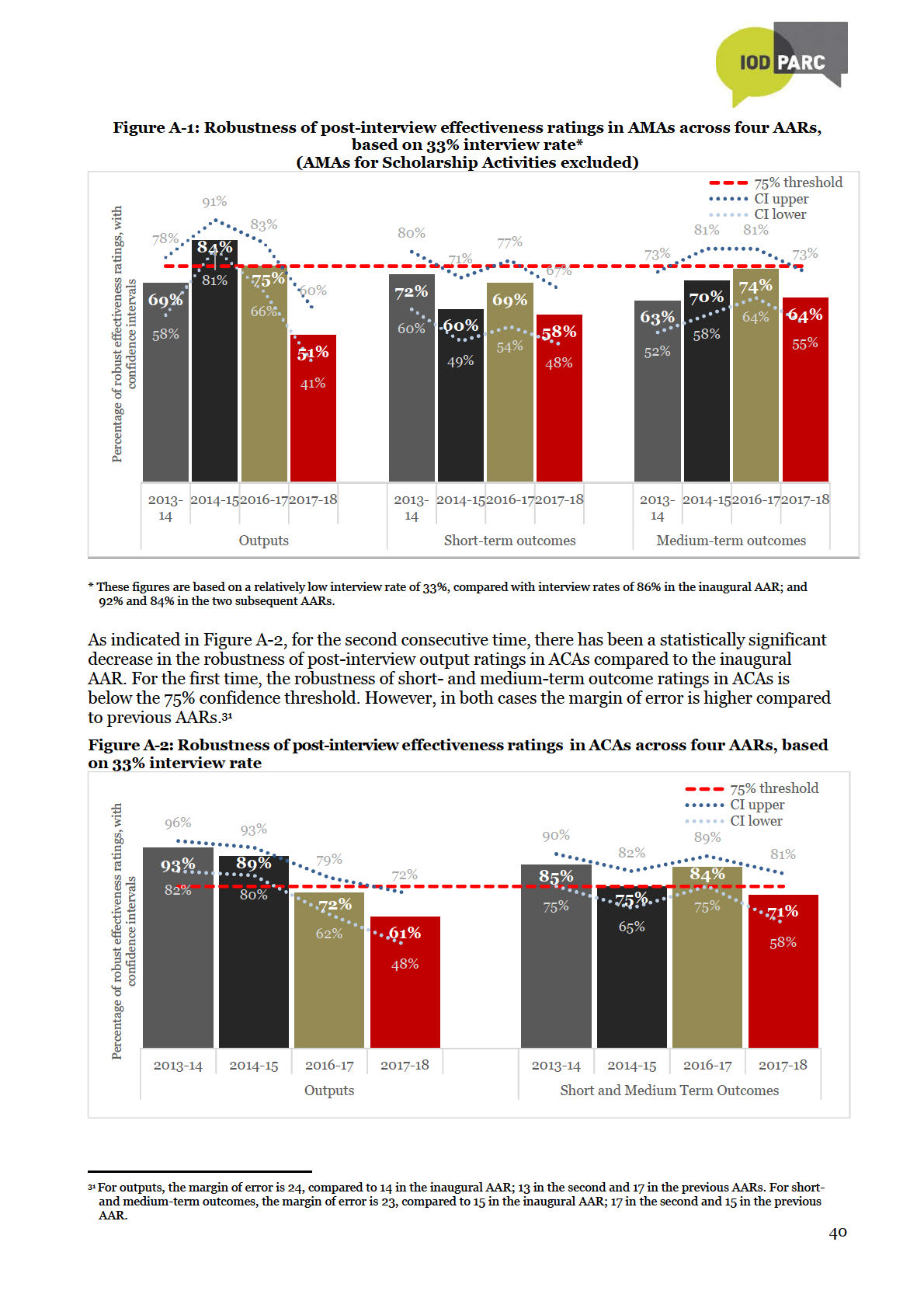

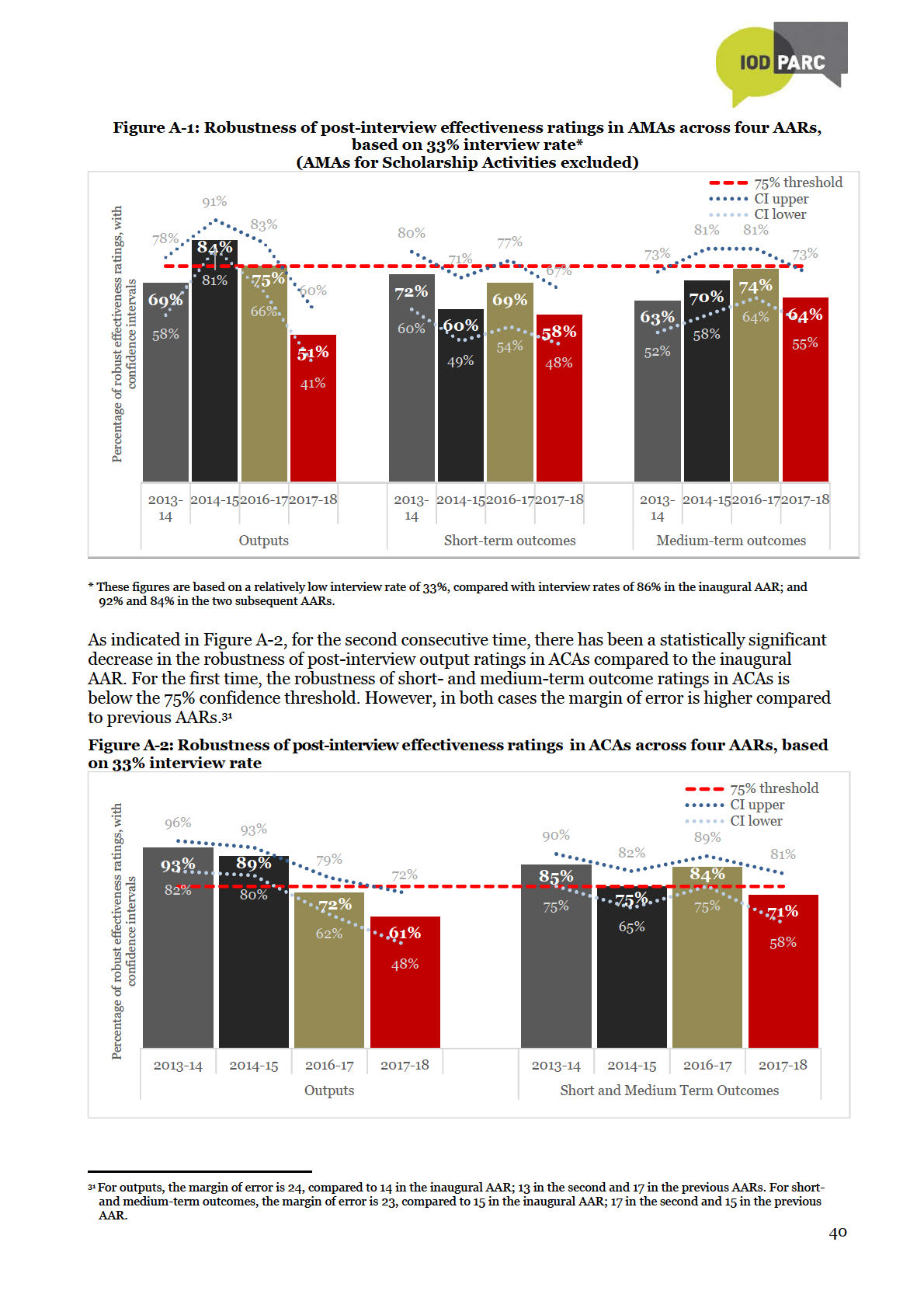

The robustness of output ratings in the current AAR remains below the 75% confidence threshold and

is also lower compared to previous AARs (Figure 1). The robustness of medium-term outcome ratings

would be just below the 75% threshold – higher than the baseline and similar to the previous AAR.

The robustness of short-term outcome ratings would be higher compared to the previous three AARs

and, for the first time, it would meet the 75% threshold.

Assessment of likely statistical significance between the adjusted 2017-18 results and both the

inaugural AAR and the 2016-17 results indicated no statistically significant changes. 15

Information

This suggests that MFAT can be reasonably confident in the robustness of AMA effectiveness ratings.

Released

Official

15 Assessment of statistical significance is limited by availability of detailed data from the inaugural AAR and the adjustment of ratings

applied in 2017-18.

14

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

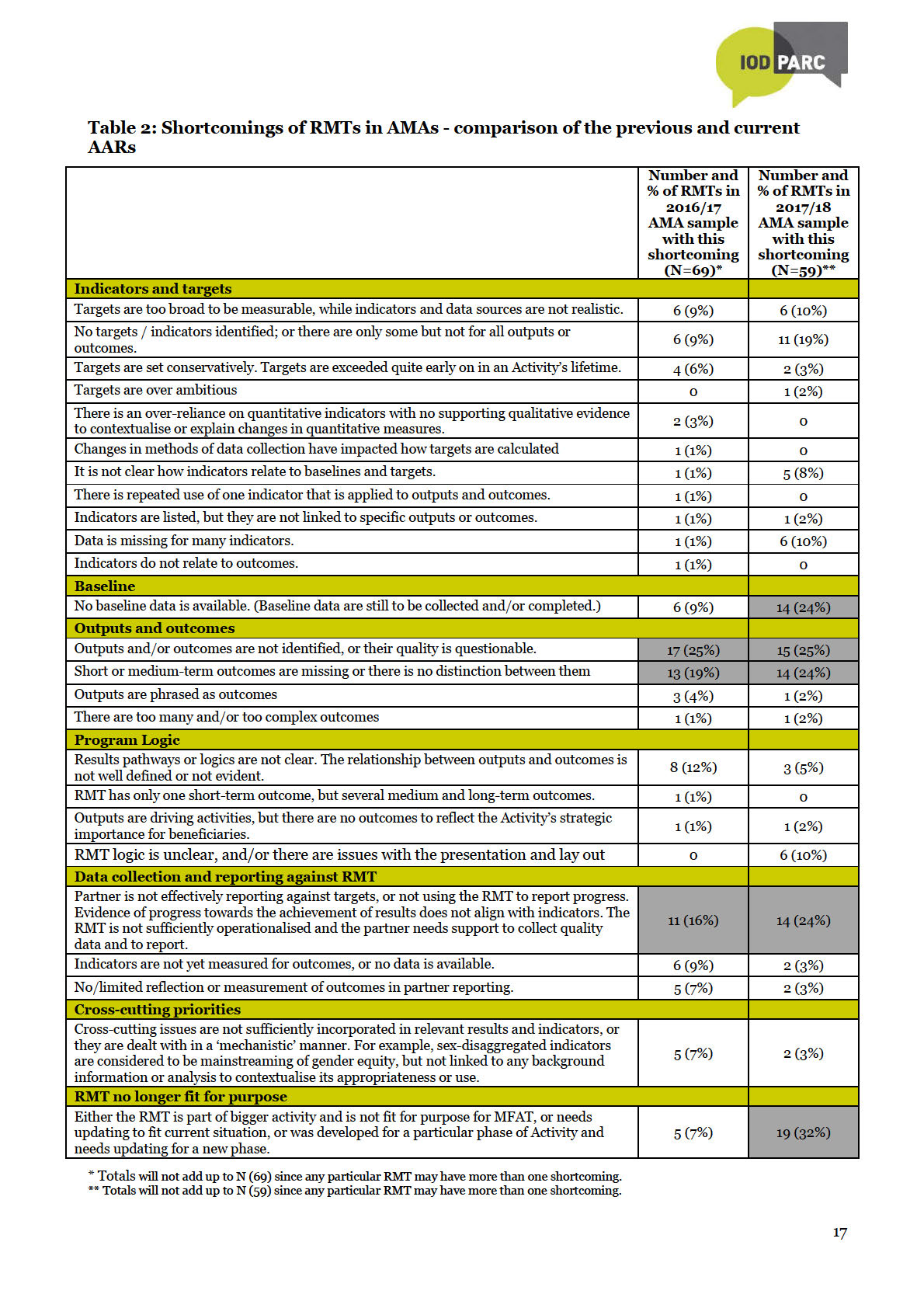

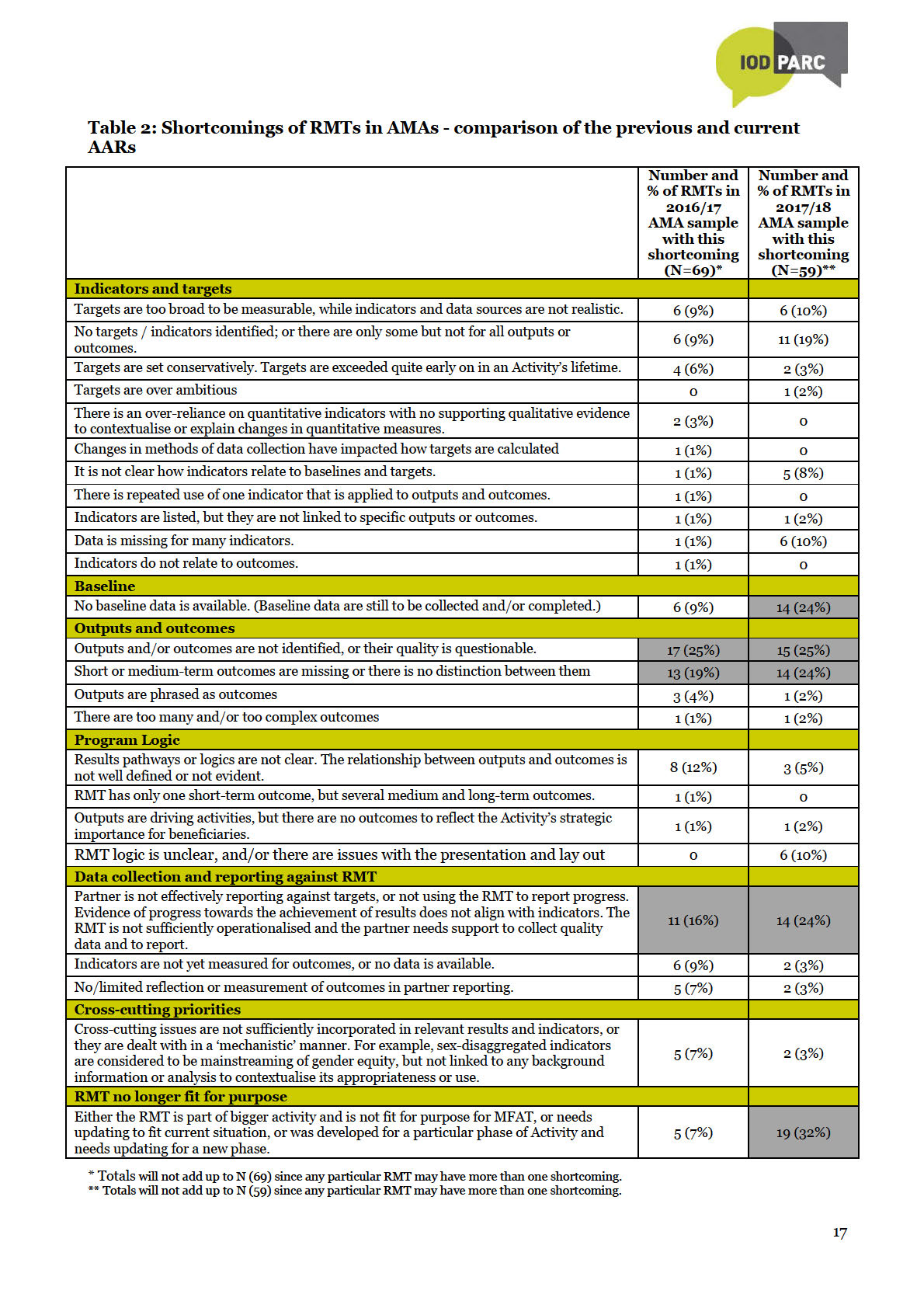

There are some differences in the shortcomings of RMTs found in the current AAR compared to the

previous AAR. In the previous AAR, shortcomings of RMTs were mainly related to Outputs and

Outcomes, e.g. outputs and outcomes were not clearly identified or distinguished; there were too

many, or they were too complex (34 out of 69 RMTs, that is almost half, had such shortcomings).

This was followed by challenges around Indicators and Targets (24 out of 69 RMTs, that is 35%),

with challenges around data collection and reporting against RMT also being common (22 out of 69

RMTs, that is 32%). In the current AAR, most shortcomings were also related to Indicators and

Targets (32 out of 59, that is 54%) and Outputs and Outcomes (31 out of 59, that is 53%). However,

compared to the previous AAR, substantially more challenges were associated with out-of-date

RMTs and RMTs that were no longer fir-for -purpose, as well as shortcomings related to data

collection and reporting. There has also been an increase in the number of RMTs where baseline

data were absent.

In the current AAR, the most common shortcomings relate to outdated RMTs, or RMTs that were no

longer fit-for-purpose (19 out of 59, that is 33%), followed by poorly identified outputs and/or

outcomes (15 out of 50 RMTs, that is 25%, had shortcomings in this regard). In the previous AAR,

the most common specific RMT shortcomings were poorly identified outputs and outcomes, and

poorly distinguished outputs and outcomes (17 out of 69, or 25%, and 13 out of 69, or 19%,

the

respectively). Additional shortcomings noted in the current AAR that were not found in the previous

AAR relate to targets being over-ambitious, lack of overall clarity and inadequate

Act

presentation/layout of the RMT, as well as inadequate accuracy of data/monitoring.

It appears that the development and updating of RMTs to ensure their relevance and use as dynamic

frameworks for monitoring and strengthening Activities on an on-going basis are becoming

increasingly challenging. Also, partner reporting against RMT indicators and targets is also falling

short by a substantial margin. However, it is encouraging that an increasing number of Activity

Managers are proposing appropriate actions to address the identified shortcomings of RMTs – of the

under

40 AMAs that had RMTs with identified shortcomings, 67% included appropriate recommendations

to revise and/or strengthen the Activity’s RMT. This could indicate that more Activity Managers are

realising the value of robust RMTs and are keen to ensure they have fit-for-purpose to monitor

Activities. More robust RMTs would be more useful as Activity monitoring tools and would likely

encourage more coherent partner reporting against expected results.

Training and technical assistance could be instrumental in enhancing the robustness of RMTs.

Special attention could be given to the development and socialisation of RMTs as foundations of

Activity design, as well as dynamic tools for Activity monitoring, reporting and improvement.

Information

Progress reporting for more complex Activities, or for Activities that do not require RMTs per MFAT

policy might require special attention.

Robustness of effectiveness ratings: ACAs

Released

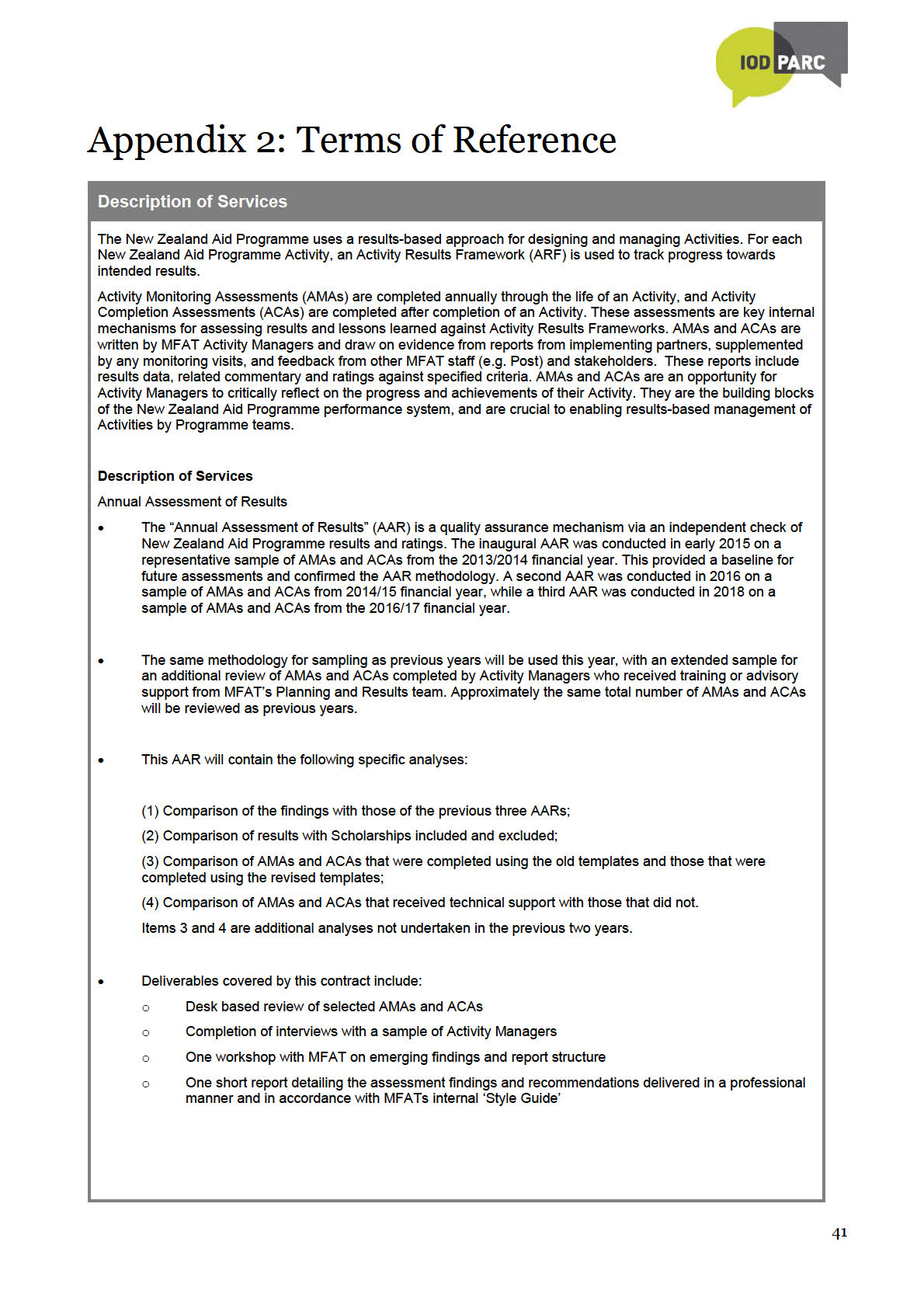

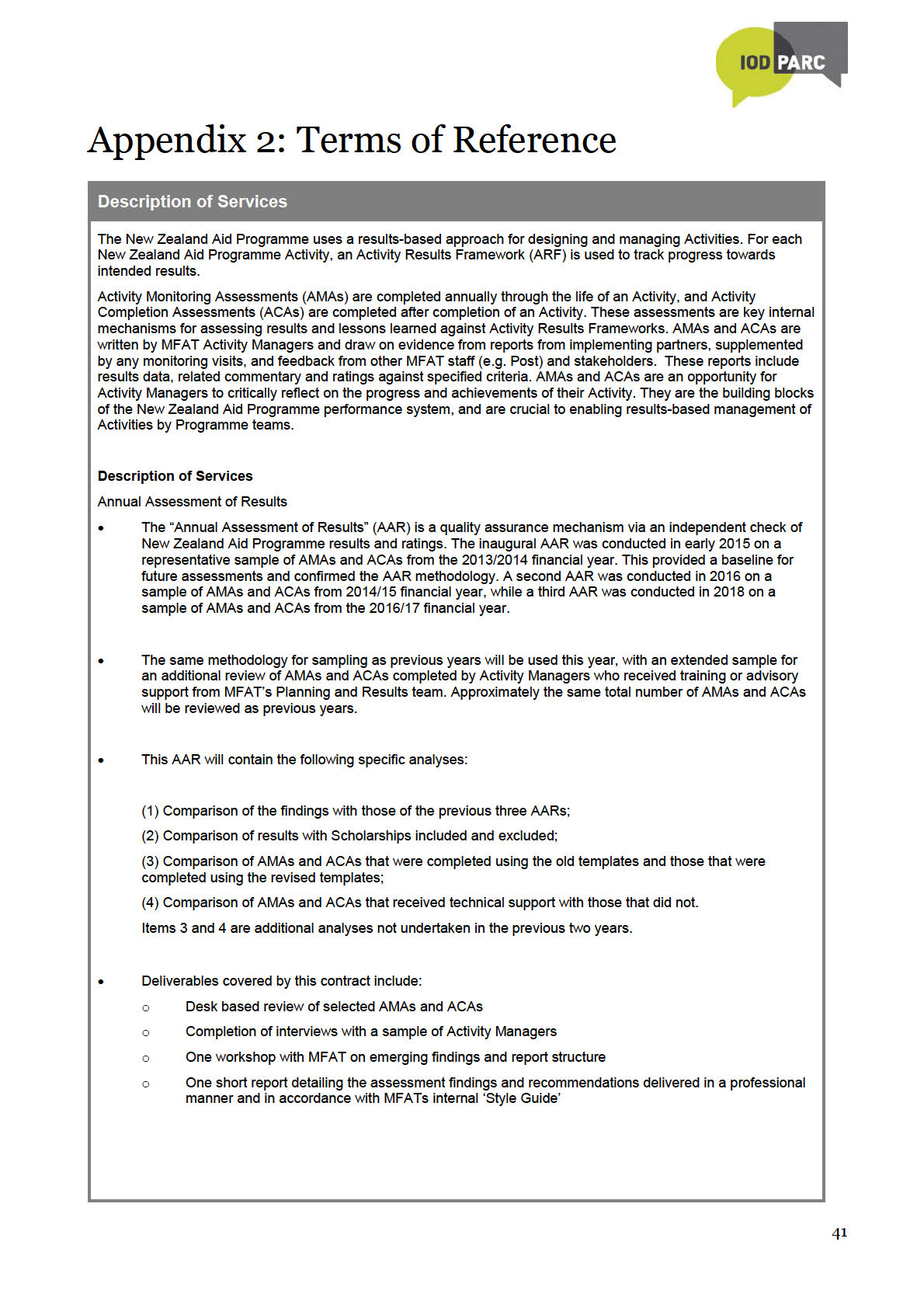

Based on adjusted post-interview effectiveness ratings, the robustness of output ratings is 72% and

that of short-and medium-term outcomes is 77% (see Figure 3). This brings the robustness of output

ratings in line with the 2016-17 AAR (yet still lower compared to the baseline), while the robustness of

short- and medium-term results now exceeds the 75% confidence threshold (but is also still lower

compared to the baseline).

Official

Assessment of

likely statistical significance between the adjusted 2017-18 results and both the

inaugural AAR and the 2016-17 results indicates a potentially statistically significant decrease in the

robustness of output ratings between the inaugural AAR and the current AAR.17

When an Activity ends, it is especially important to know whether it has achieved its results or not. It

is therefore encouraging that MFAT can be reasonably confident about the robustness of short- and

medium-term outcome ratings in ACAs.

17 Assessment of statistical significance is limited by availability of detailed data from the inaugural AAR and the adjustment approach

applied in 2017-18.

18

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

have a robust RMT, which enabled ratings to be substantiated by reporting against clearly

identified results and indicators. The same applies to nine out of eleven ACAs (82%).

Compared to previous AARs, robust ratings in the current AAR appear to demonstrate greater

confidence in evidence on the part of Activity Managers. Ratings were often substantiated by

references to movements in indictors, or progress in relation to baselines and targets.

In 24 AMAs (excluding scholarship AMAs) and five ACAs, N/A effectiveness ratings were

assessed as robust – this means that the reviewers agreed that an Activity’s effectiveness could

not be rated. Reasons for this included:

Shortcomings of RMTs. In 17 AMAs (including AMAs for Activities involving Multilateral

Organisations and those funded through Budget Support, as well as multi-country and multi-

donor Activities) and two ACAs (including one Humanitarian Response Activity), the absence

or shortcomings of RMTs made it impossible to assess effectiveness.

In five of 24 AMAs (21%), it was too early to assess progress towards outcomes.

In seven of 24 AMAs (29%), there was no data/evidence available to assess effectiveness,

including three AMAs where baseline data were not yet available. Effectiveness in four ACAs

could not be assessed due to lack of data/evidence.

the

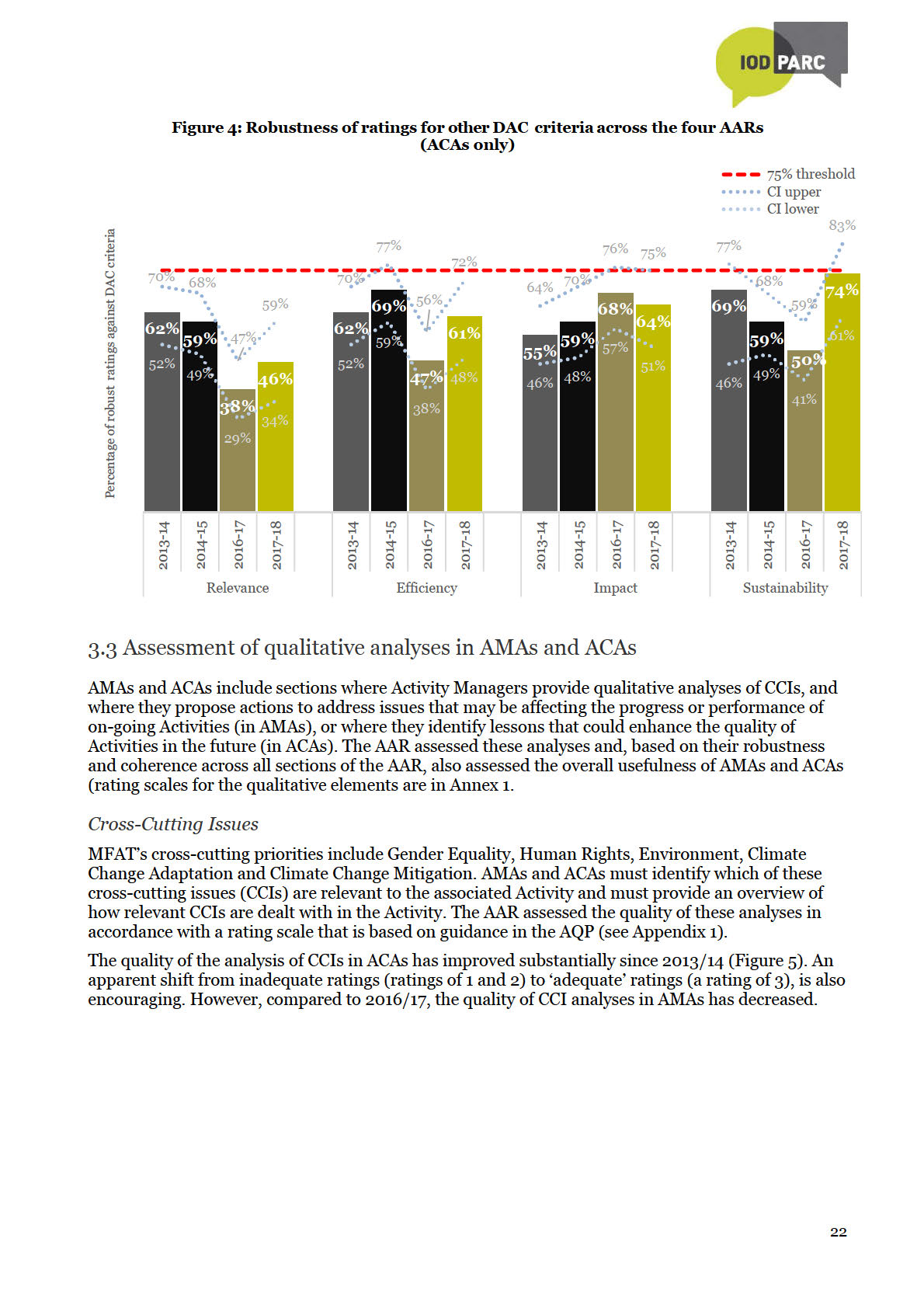

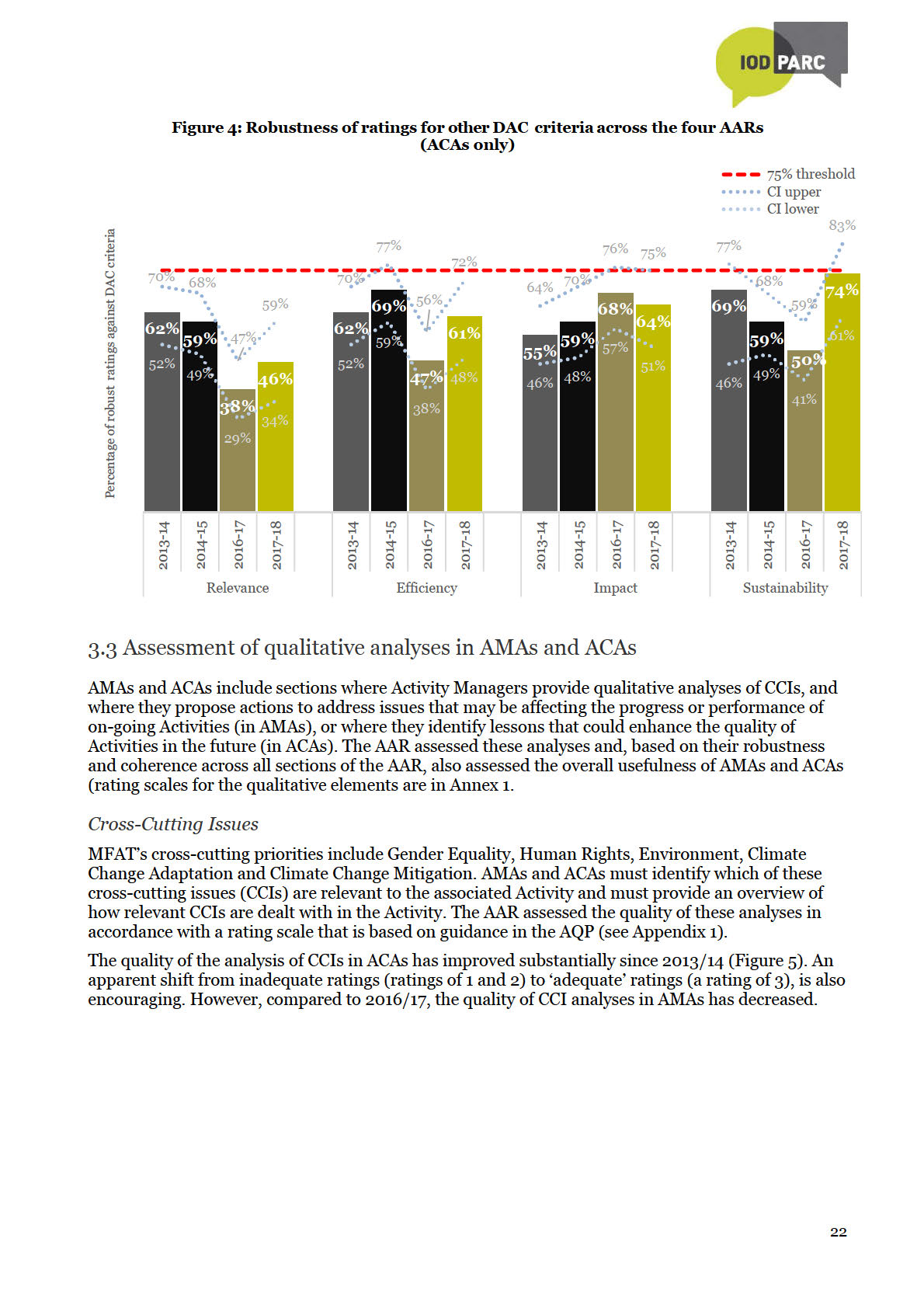

3.2 Robustness of other DAC criteria ratings (ACAs only)

Act

The assessed robustness ratings for the DAC criteria of relevance, efficiency, impact, sustainability in

ACAs is based on desk reviews, as interviews focused on effectiveness ratings. Had the DAC ratings

been discussed further at interview, there may have been some increase in their robustness in line

with increases seen in previous AARs, where the ratings of these criteria were discussed during

interviews.

under

The comparative robustness of ratings for the DAC criteria across the four AARs is shown in Figure

4. Compared to the baseline (inaugural AARs), the robustness of sustainability and impact ratings

has increased. While that of efficiency has decreased by only one per cent, the robustness of

relevance ratings has decreased by 16%. No rating has yet reached the MFAT confidence level of

75%. Bearing in mind that that ratings of these criteria were not discussed during interviews, and

despite encouraging increases in robustness of sustainability and impact ratings, MFAT cannot use

the ratings of other DAC criteria in ACAs with confidence to inform decision-making.

The main reason for non-robust relevance ratings is that they do not address all considerations

Information

outlined in the 2015 AQP, against which they were assessed. However, MFAT staff may have,

appropriately, based their response on the AMA and ACA guideline

Activity Quality Ratings for

Completion Assessment revised in 2017, which is not consistent with the 2015 AQP. Key changes

include: simplified requirements for Relevance, Impact, Sustainability and Efficiency, and Cross-

Released

Cutting issues being addressed in the Effectiveness section rather than throughout all DAC criteria.

Therefore, aspects such as the relevance of modality and the mainstreaming of cross cutting issues

across all criteria, are of lesser importance.

A likely result of this would be an increase in the number (and proportion) or robust relevance,

efficiency, impact and sustainability ratings – and the robustness of sustainability ratings would

Official

likely exceed the 75% confidence threshold. Reviewers based their assessment of the robustness of

these ratings on a number of considerations that are no longer included in the revised guidance. Had

assessments been based on the revised guidance, more ratings may have been assessed as robust.

21

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

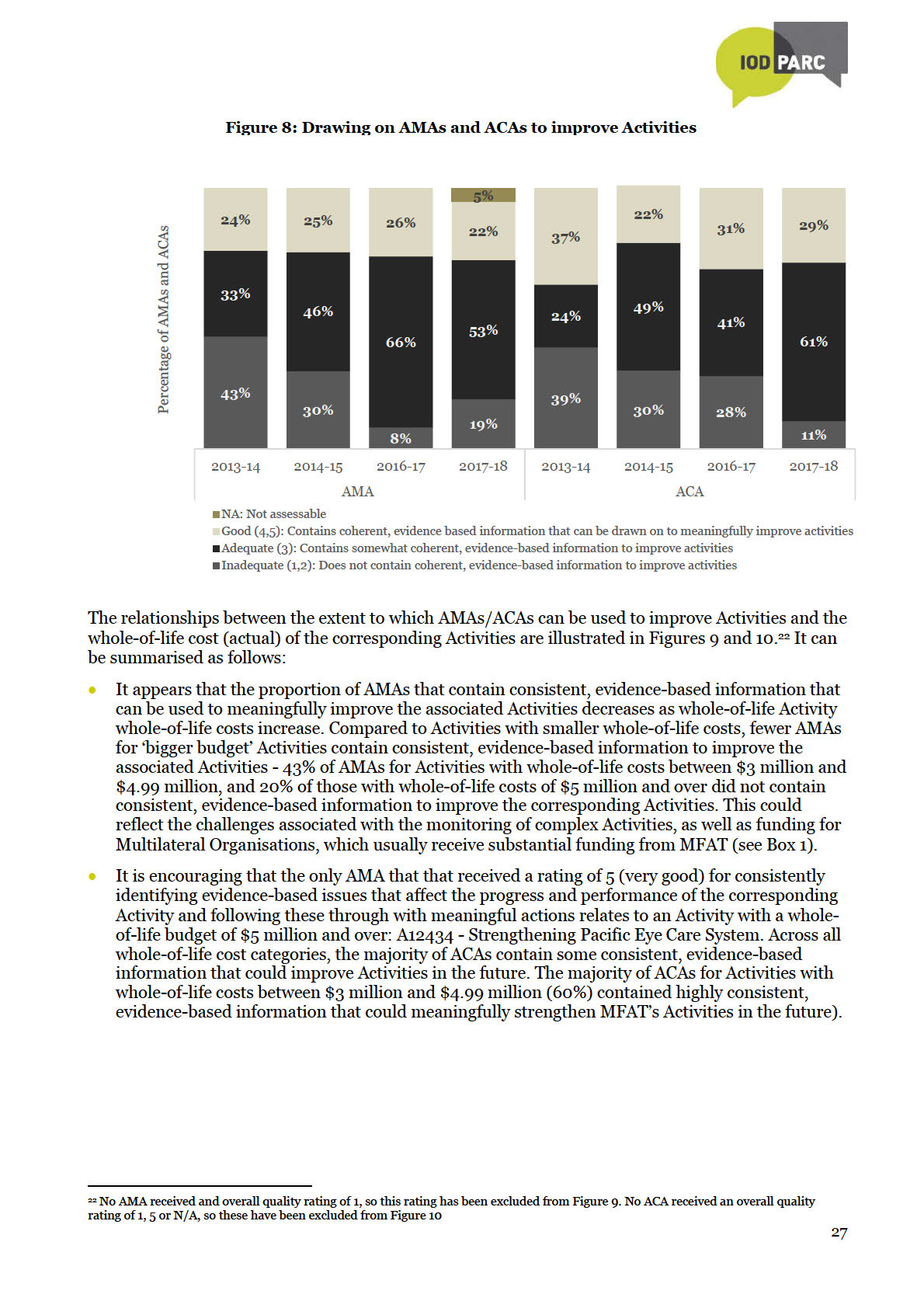

Drawing on AMAs and ACAs to improve Activities

Drawing on AMAs and ACAs to improve Activities

The extent to which AMAs and ACAs can be drawn on to improve Activities depends on whether they

are based on evidence from more than one source (regardless of the robustness of effectiveness

ratings); whether they tell a coherent, evidence-based story of progress; and whether they

consistently identify meaningful actions (AMAs) or

Box 2

lessons (ACAs) to inform the improvement and future

2017/18 AMAs on strengthening

planning of Activities (rating scales are presented in

Scholarship Activities

Appendix 1).

When assessed separately, all four

Figure 8 suggests that there could be reason to be

scholarship AMAs in the current AAR

more confident in drawing on AMAs and ACAs to

refer to actions that could strengthen

improve Activities:

monitoring, reporting and AMAs for

scholarship Activities in the future. This

The percentage of AMAs that received an overall

includes the development of a revised

rating of 1 or 2 (meaning they do not contain

RMT for scholarship activities, which

consistent, evidence-based information to improve

was being undertaken as part of a

the associated Activity) decreased from 43% in the

strategic evaluation of the scholarship

inaugural AAR to 19% in the current AAR; that is a

programme. The evaluation was

the

decrease of 24%.

underway at the time the AMAs were

being drafted. The three country

Act

The percentage of AMAs that received overall

scholarship AMAs also refer to a tracer

ratings of 4 and 5 (meaning that they contain highly

study that was underway at the time the

consistent, evidence-based information to improve

AMAs were being drafted, as well as the

the associated Activity) shows small decreases

alumni strategy and a new Scholarships

across successive AARs. In the current AAR, 22% of

and Alumni Management System (SAM),

AMAs received a rating of 4 or 5, which is a

which are expected to strengthen

monitoring and reporting against

under

decrease of 2% compared to the inaugural AAR.

medium-term outcomes. It would be

This coincided with an increase of 20% in the

important to monitor the effect of these

proportion of AMAs that contain fairly consistent,

initiatives on the robustness and quality

evidence-based information to improve the

of scholarship AMAs in the future.

associated Activities increased by 20% - up from

33% in the inaugural AAR to 53% in the current

AAR. This indicates that the proportion of AMAs that cannot be drawn on to improve Activities is

decreasing in favour of those that can be drawn on to improve Activities, although there is room

for improvement.

Information

Over time, the proportion of ACAs that does not contain consistent, evidence-based information

to improve Activities has reduced substantially (from 39% in the inaugural AAR to 11% in the

current AAR – therefore a decrease of 28%), but the proportion provides highly consistent,

evidence-based information to improve Activities has also decreased somewhat (from 37% to

Released

29% - therefore a decrease of 8%). The proportion that provides fairly consistent, evidence-based

information to improve Activities has increased by 37% - up from 24% in the inaugural AAR to

61% in the current AAR. This indicates that the vast majority of ACAs contain some information

that could meaningfully be used to improve Activities in the future.

Official

26

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

4. Summary of Findings

1. Anecdotally, interviews with Activity Managers conducted as part of the current AAR revealed

that they have a positive attitude about AMAs and ACAs, as well as a good understanding and

appreciation of their value. Fewer Activity Managers regard the completion of AMAs and ACAs as

a compliance requirement and there was a clear sense that more Activity Managers view the

completion of AMAs and ACAs as important opportunities to reflect analytically on the progress,

performance and challenges of Activities and their on-going improvement. This is substantiated

by relatively high AMA and ACA completion rates.

2. Using the adjusted post-interview robustness ratings, in AMAs, the robustness of output and

medium-term outcomes ratings is lower compared to previous AARs, but the robustness of short-

term outcome ratings is higher, reaching the 75% confidence threshold. In ACAs, the robustness

of short- and medium-term outcomes is just above the 75% confidence threshold.

3. References to RMTs as sources of evidence for completing AMAs and ACAs are increasing,

indicating that Activity Managers are increasingly relying on RMTs to keep track of Activities’

the

progress. The quality of RMTs appears to be improving. However, shortcomings of RMTs

continue to hamper monitoring and reporting of results. Many RMTs are not updated and are no

Act

longer adequate to monitor progress of evolving Activities. Other major shortcomings of RMTs

include the absence of baselines, targets and data to monitor and report progress.

AMAs of funding for Multilateral Organisations appear to be especially challenging because the

results frameworks and progress reporting of these Organisations do not always correspond to

MFAT’s requirements. It appears that developing AMAs and ACAs for complex Activities, for

example multi-county and multi-donor Activities, as well as Activities funded through Budget

under

support could also be challenging because RMTs for these Activities are not straight-forward.

It is encouraging that an increasing number of Activity Managers are proposing appropriate

actions to address the identified shortcomings of RMTs.

4. The identification and analysis of Cross-Cutting Issues remain a challenge for Activity Managers.

Although the quality of analysis of CCIs in both AMAs and ACAs is showing improvement, the

proportion of these analyses that received ‘good’ or ‘very good’ ratings remains small. There was

also an increase in the proportion of AMAs and ACAs where CCI analyses received N/A ratings,

suggesting that there either were no cross-cutting priorities identified in the AMA or ACA (i.e. CCI

Information

is classified as ‘not measured’ or ‘not targeted’), or there was very limited or no further elaboration

on CCIs in the document..

5. Observed improvements in the quality of more analytical aspects of AMAs and ACAs are

Released

encouraging. Compared to the baseline, the proportion of ACAs that identified well-

substantiated, useful lessons increased by 19%, while proportionately more AMAs are shifting

from ‘inadequate’ to ‘adequate’ quality as far as the identification of actions to strengthen

Activities is concerned.

6. There is reason to be cautiously confident in the overall usefulness of AMAs and ACAs. The

Official

majority of AMAs and ACAs are of adequate usefulness. The proportions of AMAs and ACAs that

are of inadequate usefulness have decreases substantially compared to the baseline – by 24% in

the case of AMAs and by 20% in the case of ACAs. These appear to have shifted mainly from

‘inadequate’ to ‘adequate’ usefulness. However, a small proportion of AMAs and ACAs appear to

be slipping back from ‘good’ to ‘adequate’ usefulness.

5. Conclusions

1. Despite improvements in qualitative aspects of reporting, AMAs and ACAs still do not provide

sufficiently accurate, complete or stand-alone records of the activity. As in previous AARs,

Activity Managers draw on evidence from a range of sources to assess the effectives of Activities

but tend not to document all this evidence in AMAs and ACAs. If evidence is not comprehensively

29

documented, the loss of institutional knowledge leaves substantial gaps, especially where staff

turnover is high. When these gaps build up year-on-year, new Activity Managers might find it

challenging to complete insightful AMAs and ACAs, thereby jeopardising the robustness of AMAs

and ACAs in the longer term.

2. Despite remaining challenges around the robustness of effectiveness ratings, AMAs and ACAs

generally include some consistent, evidence-based information that could be drawn on to improve

Activities. Providing useful information to improve complex Activities, which often have high

whole-of-life costs, are challenging since ways that could improve these Activities may not be

within MFAT’s full control.

3. So far, AARs have not revealed major statistically significant results related to AMA and ACA

improvement over time. Some trends are beginning to emerge, while valuable lessons have

contributed to a much-refined methodology. While MFAT does not expect linear improvement

due to contextual factors such as organisational capacity and incentives, over a longer time period

statistically significant changes may become evident.

6. Recommendations

the Act

1. AMAs and ACAs should remain as essential building blocks of the Aid Programme’s performance

management system. Activity Managers use AMAs and ACAs to reflect and assess the progress,

performance and challenges of Activities and they serve as important repositories of institutional

memory and continuity during Activity implementation. Increasingly insightful and usable

lessons and actions to address issues, if harnessed through a robust knowledge management

system, could also prove valuable in strengthening Activities.

under

2. Ongoing training and technical support would be important to ensure that gains made in the

robustness and usefulness of AMAs and ACAs are maintained and enhanced. Gradual

improvements are becoming evident, but it would be important to address known challenges and

strengthen capacity to maintain this positive momentum and to prevent the gains made from

being lost.

Continue to provide training and guidance for Activity Managers in Wellington and at Post

(including locally-engaged staff) to ensure that they understand why and how to document the

evidence base for AMAs and ACAs fully, yet concisely, to increase the proportion of AMAs and

Information

ACAs that provide stand-alone records of Activities’ progress and performance. This would be

instrumental to lift the robustness and usefulness of AMAs and ACAs (and therefore their value

as essential building blocks of the Aid Programmes performance management system) to a

higher level. Released

Training and support in the following priority areas could be considered:

Documenting consolidated evidence from several sources to justify effectiveness and

DAC criteria ratings.

RMT quality and wider socialisation of RMTs as foundations of Activity design,

Official

monitoring and reporting, as well as dynamic tools for Activity improvement.

MERL expert assistance to support regular reviewing and updating of RMTs to ensure

that they remain relevant and up-to-date.

Strengthening RMTs and monitoring of complicated and complex Activities, for example

multi-donor and multi-country Activities, as well as Activities funded through budget

support.

Improving consistency and coherence in AMAs and ACAs, including identifying issues

that affect the progress and performance of Activities, and following this through into

meaningful, evidence-based issues to improve on-going activities (in AMAs), or lessons

relevant to comparable types of Activities when they complete ACAs.

30

3. Given the size of MFAT’s funding to Multilateral Organisations and the unique arrangements

around their monitoring, it could be beneficial to tailor guidance for the AMAs of these Activities.

4. Provide support to Activity Managers to identify and perceptively address appropriate Activity

cross-cutting markers:

Where a cross-cutting marker is identified as relevant, it should be dealt with

consistently and perceptively throughout the design, monitoring and reporting of the

activity, including in AMAs and ACAs.

Avoid including cross-cutting markers that are not relevant to an Activity in its

AMA/ACA.

5. AARs should continue to be conducted on a periodic basis to monitor the effect of known

enablers and constraints to the robustness and usefulness of AMAs and ACAs, as well as to

identify emerging challenges and actions for their continuous improvement. A larger database

will also enable meaningful trend analyses of the robustness and usefulness of AMAs/ACAs

across different sectors, programmes and budget levels.

the

Act

under

Information

Released

Official

31

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

basis of which the final rating was decided. Each reason was then coded to a common

theme/reason and a frequency analysis of theses/reasons was conducted.

Information on Activity’s Managers’ process when completing AMAs/ACAs, their

opinion of the AMA/ACA templates and guidance, their view of the robustness of

evidence available to inform the completion of AMAs and ACAs, as well as which

aspects of AMAs/ACAs they found especially challenging (and why) was documented. A

content analysis of the documented information was conducted to identify

commonalities and emerging themes.

Sample Revision for AAR Quantitative Analysis

Quantitative Analysis

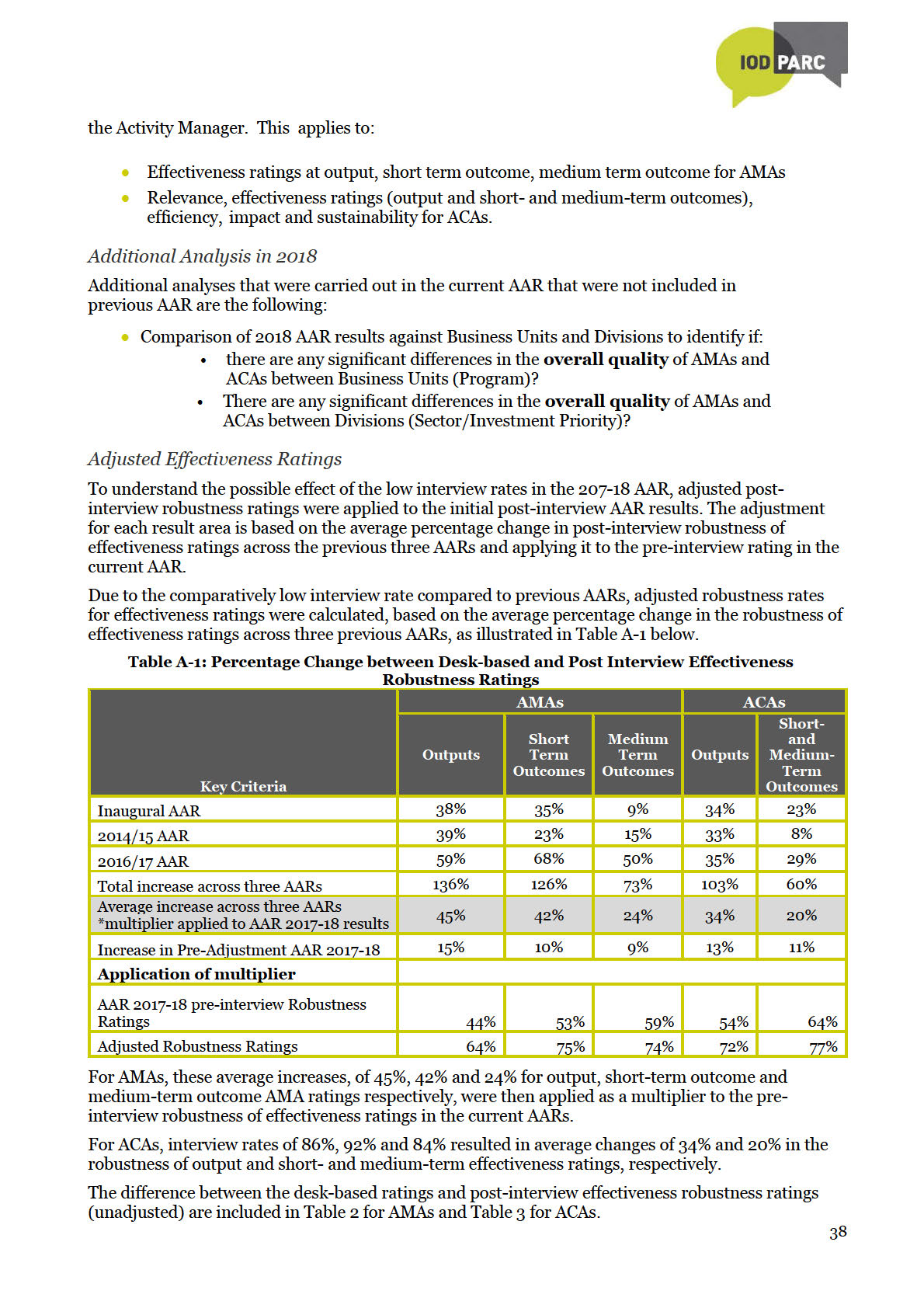

Comparative assessments of results between the four AARs was completed. Robustness of

effectiveness ratings at output, short term outcome, medium term outcome for AMAs and ACAs

as well as relevance, efficiency, impact and sustainability for ACAs was compared and presented

in charts. For the remaining criteria (Cross-cutting issues (AMAs and ACAs) , Overall quality

ratings (AMAs and ACAs), Actions to address issues (AMAs only), Activity Results Framework

the

(AMAs only), and Lessons learned (ACAs only)), the assessed ratings were compared by

Act

percentage and presented in charts for inadequate (rating of 1 or 2), adequate (rating of 3) and

good (rating of 4 or 5).

N/A Ratings

N/A ratings were removed from the observed proportion to enable the calculation of robustness.

Scholarships Programme

under

Scholarships Activities were introduced to the AAR process in 2016 (AMAs for 2014-15) and have a

notable influence on the overall robustness of AMA effectiveness ratings. For purposes of

comparability across all four AARs, assessments of the robustness of AMAs in this report

consistently exclude Scholarship Activities unless noted otherwise.

Statistical Analysis of results

The Wilson score method was used to construct initial confidence intervals26:

Information

In this calculation n is the sample size, p is the observed proportion, q=1‐p and z is the quintile of the

standard normal distribution which depends on the desired level of confidence. For 95% confidence

Released

intervals, as is the case in this instance, z is 1.96. Using the same formulas as in previous AARs27,

these were the adjusted using the ‘finite population correction’ method referenced by Burstein28 to

construct confidence intervals with more accurate coverage probability given the small population

sizes. This approach enables the nominal coverage of 95% to be maintained while narrowing the

confidence intervals.

Official

To determine

statistical significance of differences in findings between the inaugural and 2017-

18 AAR and the 2016-17 and 2017-18 AAR, the Z test based on normal approximation in two finite

populations was used29. It was possible to conduct statistical analyses when a rating was applied by

26 Newcombe, R.G. (1998) Two‐sided confidence intervals for the single proportion: comparison of seven methods. Stat. Med. 17, 857‐872.

27 Wilson’s method alone was used for the inaugural 2015 AAR report (2013-14 results). The 2016 AAR report (2014-15 results) used the current

combination of Wilson overlaid with the FPC method referenced in Burstein; the 2013-14 results were revised accordingly for comparison. See 2016

AAR Report for further detail.

28 Burstein. H (1975), Finite Population Correction for Binomial Confidence Limits, Journal of the American Statistical Association, Vol. 70, No.

349 (Mar., 1975), pp. 67- 69; Rosenblum, M, van der Lann. M.J (2009) Confidence Intervals for the Population Mean Tailored to Small Sample

Sizes, with Applications to Survey Sampling, Int. J Biostat 5(1):4

29 Krishnamoorthy K and Thomson J. Hypothesis Testing About Proportions of Two Finite Populations. The American Statistician, Vol 56, No 3

(August 2002), pp 215-222.

37

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

Appendix 3: Distribution of sample

The following figures profile some of the key descriptors for the AMA and ACA samples:

Number of AMAs by Sector

Agriculture/Forestry/Fishing

15

Government & Civil Society

7

Education

5

Economic Governance

5

Agriculture

5

Transport & Storage

4

Health

3

Water Supply & Sanitation

2

the

Unspecified / Multi

2

Act

Reconstruction relief and rehabilitation

2

Other multisector

2

Law and Justice

2

Humanitarian Response

2

Trade / Tourism

1

Resilience

1

under

Popuation Policies/Programmes &…

1

Emergency Assistance

1

Communications

1

Business & Other Services

1

Banking & Financial Services

1

Information

Number of ACAs by Sector

Health

4

Released

Resilience

3

Agriulture/Forestry/Fisheries

1

Agriculture

4

Other Multisectoral

2

Governance

2

Official

Education

2

Banking & Financial Services

2

Water Supply & Sanitation

1

Trade and Labour Mobility

1

Renewable Energy

1

Humanitarian Response

1

Environment

1

Economic Governance

1

Business & Other Services

1

43

Number of AMAs by Programme

Number of AMAs by Programme

Solomon Islands

6

ASEAN Regional

6

Multilateral Agencies

5

Kiribati

5

Scholarships

4

Vanuatu

3

Partnerships and Funds

3

Pacific Human Development

3

Pacific Economic

3

Niue

3

Indonesia

3

Fiji

3

Papua New Guinea

2

Pacific Regional Agencies

2

Latin America and the Caribbean

2

Humanitarian & Disaster Management

2

Tonga

1

the

Tokelau

1

Timor Leste

1

Act

Pacific Transformation Fund

1

Pacific Energy & Infrastructure

1

Myanmar

1

Economic Development - ED

1

Africa

1

under

Number of ACAs by Programme

Partnerships and Funds (contestable)

6

Latin America & the Caribbean

3

Papua New Guinea

2

Other Asia

2

Information

Niue

2

Multilateral Agencies

2

Fiji

2

Released

Tonga

1

Vanuatu

1

Solomon Islands

1

Samoa

1

Pacific Human Development

1

Official

Pacific Economic Development

1

Humanitarian & Disaster Management

1

Economic Development - ED

1

Africa Regional

1

44

Number of AMAs and ACAs according to Whole-of-Life Budget Programme Approval*

Number of AMAs and ACAs according to Whole-of-Life Budget Programme Approval*

AMAs

ACAs

$ 5 million and over

31

$ 5 million and over

6

$3 million - $4.99

$3 million - $4.99

8

5

million

million

$1 million - $2.99

$1 million - $2.99

16

6

million

million

$500,001 - $999,999

3

$500,001 - $999,999

7

the

< $ 500,000

3

< $ 500,000

3 Act

*The Whole-of-Life Budget Programme Approvals for three AMAs and one ACA were not available

under

Information

Released

Official

45

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

the Act

under

Information

Released

Official

Appendix 6: Interviewing script

INTRODUCTORY SCRIPT - MFAT Annual Assessment of Results (AAR) 2018

Thank you for agreeing to speak with me today.

As you will have been advised by the Development Strategy and Effectiveness team, I have been

involved in a review of a sample of AMAs and ACAs to determine the robustness of self-assessed

performance ratings in these reports. This year my colleague and I are reviewing 66 AMAs and 36

ACAs (102 in total) that were randomly selected from a total of 196 AMAs and ACAs submitted

between July 2017 and July 2018.

We would specifically like to discuss the ratings given against the effectiveness criteria – these are

the ratings provided for achievement of outputs, short-term outcomes and medium-term outcomes.

We will aim to keep the interview to 30 minutes (longer if more than one AMA or ACA will be

the

discussed). Time allowing, we may also briefly discuss assessments and ratings provided for other

performance criteria, as well as your experience of the tools, guidance and support available to

Act

complete AMAs and ACAs.

The primary documents reviewed for our analysis are the AMAs/ACAs themselves, as well as the

corresponding partner report. We assessed whether or not the effectiveness ratings given appear

‘robust’ on the basis of the evidence and analysis presented in the AMA/ACA and the partner report.

Where further information and clarification are required, we have the option to speak with Report

under

Authors (Activity Managers) and/or Deputy Directors in order to gain further insight into the

evidence and considerations underlying the given ratings.

I asked to speak with you today because I need a deeper understanding of how you arrived at some

of the ratings in the following report/s (list here)

Before we begin the discussion, I would like to assure you that the discussion will be

Information

Strictly confidential. Individual AMA/ACA reports will not be identifiable in the Annual

Assessments of Results (AAR) report. All findings will be presented as percentages of the

sample, and aggregated for smaller programs. Any comments from interviewees that are

included in the report will be anonymous. Assessment and interview records will be stored

securely by the Development Strategy and Effectiveness team and it will not be used for any

Released

purpose other than do inform the AAR.

The AAR process will not result in a change to the original ratings in the MFAT system. The

assessors are not tasked to recommend any changes to the ratings in individual AMAs/ACAs.

Our main aim is to understand the process and quality of evidence that informed the ratings.

Official

At the end of the interview, feedback on the independent assessment can be provided to Program

Managers/Activity Managers by the interviewers, if required/requested. This feedback will be

provided in general terms, that is, indicating the strengths/weaknesses of the AMA/ACA against a

suite of agreed criteria against which it was assessed.

Now, to confirm, we are discussing (Activity number and name – if more than one, take them one at

a time)

Questions on criteria:

To be inserted by interviewer based on desk assessment.

Focus on effectiveness ratings that were assessed as non-robust. Time allowing, then discuss

ratings for other criteria that were also assessed as non-robust.

49

Clarify evidence and interpretation of evidence to understand whether original rating is

robust or not.

In a separate row directly below the original assessment in the assessment template, indicate

what the assessed rating is after interviewing and motivate why, if it has changed from the

rating given at the time of the desk assessment (e.g. “The Activity Manager conducted a site

visit and provided information from this visit to justify the original rating. This information

was not documented in the AMA/ACA. Based on this information, the reviewer agrees with

the Activity Manager’s original rating – it is robust”).

If the Activity Manager cannot justify his/her original rating based on all available

information, then you may want to ask about his/her interpretation of the evidence which led

you to assess the original rating as non-robust. This is not about defending your assessment.

It is about allowing the Activity Manager to contextualise and explain that information,